International Journal of Scientific & Engineering Research, Volume 2, Issue 12, December-2011 1

ISSN 2229-5518

Machine Transliteration for Indian Languages: A Literature Survey

Antony P J and Dr. Soman K P

Abstract— This paper address the various developments in Indian language machine transliteration system, which is considered as a very important task needed for many natural language processing (NLP) applications. Machine transliteration is an important NLP tool required mainly for translating named entities from one language to another. Even though a number of different transliteration mechanisms are available for worlds top level languages like English, European languages, Asian languages like Chinese, Japanese, Korean and Arabic, still it is an initial stage for Indian languages. Literature shows that, recently some recognizable attempts have done for few Indian languages like Hindi, Bengali, Telugu, Kannada and Tamil languages. This paper is intended to give a brief survey on transliteration for Indianlanguages.

Index Terms— Named Entity, Agglutinative, Natural Language Processing, Transliteration, Dravidian Languages

—————————— ——————————

.

Machine transliteration is the practice of transcribing a charac- ter or word written in one alphabetical system into another alphabetical system. Machine transliteration can play an im- portant role in natural language application such as informa- tion retrieval and machine translation, especially for handling proper nouns and technical terms, cross-language applica- tions, data mining and information retrieval system. The transliteration model must be design in such a way that the

2000

1. Oh and Choi

2. Kang, B. J. and K. S. Choi

3. SungYoung Jung et.al.

2003

1. Lee et.al.

2004

Wei Gao Kam- Fai Wong Wai

2008

Dan Goldwasser and Dan Roth

phonetic structure of words should be preserve as closely as

possible.

The topic of machine transliteration has been studied exten-

sively for several different language pairs. Various methodol-

ogies have been developed for machine transliteration based

on the nature of the languages considered. Most of the current transliteration systems use a generative model based on

alignment for transliteration and consider the task of generat- ing an appropriate transliteration for a given word. Such model requires considerable knowledge of the languages. Transliteration usually depends on context. For example, the

English (source) grapheme „a‟ can be transliterated into Kan- nada (target) language graphemes on the basis of its context, like „a‟, ‟aa‟, „ei‟ etc. Similarly „i‟ can be transliterated either „i‟ or „ai‟ on the basis of its context. This is because vowels in English may correspond to long vowels or short vowels or some time combination of vowels [1] in Kannada during trans- literation. Also on the basis of its context, consonants like „c‟,

‟d‟, ‟l‟, or „n‟, has multiple transliterations in Kannada lan-

1994

Arababi

1998

Knight and Graehl

2. Paola and Sanjeev

3. Jaleel and Larkey

2002

1. Al-Onaizan and

Knight

2. Zhang Min LI Haizhou

2001

Fujii and

Ishikawa

Lam

2006

1. Oh and Choi

2. Dmitry and

Chinatsu Aone

3 Alexandre and

Dan Roth

guage. For transliterating names, we have to exploit the pho- netic correspondence of alphabets and sub-strings in English to Kannada. For example, “ph” and “f” both map to the same sound of (f). Likewise, “sha” in Kannada (as in Roshan) and “tio” in English (as in ration) sound similar. The transliteration model should be design while considering all these complexi- ties.

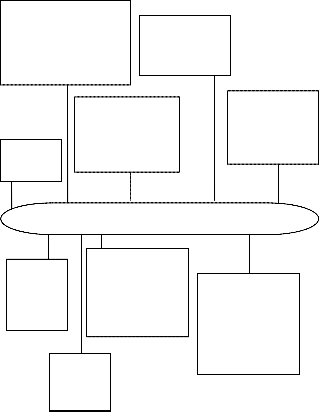

The figure 1 shows the researchers who proposed different approaches to develop various machine transliteration sys- tems.

Fig.1. Contributors to Machine Transliteration

The very first attempt in transliteration was done by Arababi through a combination of neural network and expert systems for transliterating from Arabic-English in 1994 [2]. The pro- posed neural network and knowledge-based hybrid system generate multiple English spellings for Arabic person names.

The next development in transliteration was based on a statis-

tical based approach proposed by Knight and Graehl in 1998

for back transliteration from English to Japanese Katakana.

This approach was adapted by Stalls and Knight for back transliteration from Arabic to English.

IJSER © 2011

International Journal of Scientific & Engineering Research, Volume 2, Issue 12, December-2011 2

ISSN 2229-5518

There are three different machine transliteration develop- ments in the year 2000, from three separate research team. Oh and Choi develped a phoneme based model using rule based approach incorporating phonetics as an intermediate repre- sentation. This English-Korean (E-K) transliteration model is built using pronunciation and contextual rules. Kang, B. J. and K. S. Choi, in their work presented an automatic character alignment method between English word and Korean transli- teration. Aligned data is trained using supervised learning decision tree method to automatically induce transliteration and back-transliteration rules. This methodology is fully bi- directional, i.e. the same methodology is used for both transli- teration and back transliteration. SungYoung Jung proposed a statistical English-to-Korean transliteration model that exploits various information sources. This model is a generalized mod- el from a conventional statistical tagging model by extending Markov window with some mathemaical approximation tech- niques. An alignment and syllabification method is developed for accurate and fast operation.

In the year 2001, Fujii and Ishikawa describe a transliteration system for English-Japanese Cross Language Information Re- trieval (CLIR) task that requires linguistic knowledge.

In the year 2002, Al-Onaizan and Knight developed a hybrid

model based on phonetic and spelling mappings using Finite

state machines. The model was designed for transliterating

Arabic names into English. In the same year, Zhang Min LI

Haizhou SU Jian proposed a direct orthographic mapping

framework to model phonetic equivalent association by fully exploring the orthographical contextual information and the

orthographical mapping. Under the DOM framework, a joint source-channel transliteration model (n-gram TM) captures the source-target word orthographical mapping relation and the contextual information.

An English-Arabic transliteration scheme was developed by

Jaleel and Larkey based on HMM using GIZA++ approach in

2003. Mean while they also attempted to develop a translitera-

tion system for Indian language. Lee et.al. [2003] developed

the noisy channel model for English Chinese language pair, in

the same set of statistics and algorithms, transformation know- ledge is acquired automatically by machine learning from ex- isting origin-transliteration name pairs, irrespective of specific dialectal features implied. The method starts off with direct estimation for transliteration model, which is then combined with target language model for postprocessing of generated transliterations. Expectation-maximization (EM) algorithm is applied to find the best alignment (Viterbi alignment) for each training pair and generate symbol-mapping probabilities. A weighted finite state transducer is built (WFST) based on sym- bol-mapping probabilities, for the transcription of an input English phoneme sequence into its possible pinyin symbol sequences.

Dmitry Zelenko and Chinatsu Aone proposed two discrimina- tive methods for name transliteration in 2006. The methods correspond to local and global modeling approaches in model- ing structured output spaces. Both methods do not require alignment of names in different languages but their features are computed directly from the names themselves. The me- thods are applied to name transliteration from three languages Arabic, Korean, and Russian into English. In the same year Alexandre Klementiev and Dan Roth developed a discrimina- tive approach for translieration. A linear model is trained to decide whether a word T is a transliteration of an Named Entity S.

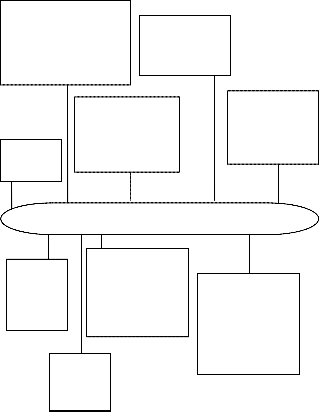

Transliteration is generally classified in to three types namely, Grapheme based, Phoneme based, hybrid models and corres- pondence-based transliteration model [1][2].

Machine Transliteration

which the back transliteration problem is solved by finding the most probable word E, given transliteration C. Letting P(E) be the probability of a word E, then for a given transliteration C, the back-transliteration probability of a word E can be writ- ten as P(E|C). This method requires no conversion of source words into phonetic symbols. The model is trained automati-

Gra-

pheme

based

Pho-

neme

based

WEST

EMW

Hybr-

id

Corres

respon

pon-

dence

cally on a bilingual proper name list via unsupervised learn- ing. Model parameters are estimated using EM. Then, the channel decoder with Viterbi decoding algorithm is used to find the word Ê that is the most likely to the word E that gives rise to the transliteration C. The model is tested for English Chinese language pair. In the same year Paola Virga and San- jeev Khudanpur demonstrated the application of statistical machine translation techniques to “translate” the phonemic representation of an English name, obtained by using an au- tomatic text-to-speech system, to a sequence of initials and finals, commonly used subword units of pronunciation for Chinese.

Wei Gao Kam-Fai Wong Wai Lam proposed an efficient algo-

Source Channel Model

Maximum Entropy Model

model

Decision Tree Model

CRF Model

model

rithm for phoneme alignment in 2004. In this a data driven technique is proposed for transliterating English names to their Chinese counterparts, i.e. forward transliteration. With

Fig. 2. General Classification of Machine Transliteration

IJSER © 2011

International Journal of Scientific & Engineering Research, Volume 2, Issue 12, December-2011 3

ISSN 2229-5518

These models are classified in terms of the units to be transli- terated. The grapheme based approach (Lee & Choi, 1998; Jeong, Myaeng, Lee, & Choi, 1999; Kim, Lee, & Choi, 1999; Lee,

1999; Kang & Choi, 2000; Kang & Kim, 2000; Kang, 2001; Goto, Kato, Uratani, & Ehara, 2003; Li, Zhang, & Su, 2004) treat transliteration as an orthographic process and tries to map the source graphemes directly to the target graphemes. Gra- pheme based model is further divided in to (i) source channel model (iii) Maximum Entropy Model (iii) Conditional Ran- dom Field models and (iv) Decision Trees model. The gra- pheme-based transliteration model is sometimes referred to as the direct method because it directly transforms source lan- guage graphemes into target language graphemes without any phonetic knowledge of the source language words.

On the other hand, phoneme based models (Knight & Graehl,

1997; Lee, 1999; Jung, Hong, & Paek, 2000; Meng, Lo, Chen, & Tang, 2001) treat transliteration as a phonetic process rather than an orthographic process. Weighted Finite State Trans- ducers (WFST) and extended Markov window (EMW) are the

approaches belong to the phoneme based models. The pho- neme-based transliteration model is sometimes referred to as the pivot method because it uses source language phonemes as a pivot when it produces target language graphemes from source language graphemes. This model therefore usually needs two steps: 1) produce source language phonemes from source language graphemes and 2) produce target language graphemes from source phonemes.

As the name indicates, a hybrid model (Lee, 1999; Al-Onaizan

& Knight, 2002; Bilac & Tanaka, 2004) either use a combination

of a grapheme based model and a phoneme based model or

capture the correspondence between source graphemes and

source phonemes to produce target language graphemes. Cor-

respondence-based transliteration model was proposed by Oh

& Choi, in the year 2002. The hybrid transliteration model and correspondence-based transliteration model make use of both

source language graphemes and source language phonemes when producing target language transliterations. Figure 2 shows the general classification of machine transliteration sys- tem.

According to Internet & Mobile Association of India (IAMAI), as on September 2008 India had 45.3 million active Internet users. The government of India has taken number of initiatives to enable rural Indians to access the Internet. This signifies need for providing information in regional languages to the user. Many technical terms and proper names, such as person- al, location and organization names, are translated from one language into another language with approximate phonetic equivalents. The chapter is organized as follow: the first sec- tion gives a brief description of various approaches towards machine transliteration, followed by various transliteration attempts for Indian languages. The following sub sections de- scribe the various machine transliteration developments in Indian languages.

Literature shows that majority of work in machine translitera- tion for Indian languages were done in Hindi and Dravidian languages. The following are the noticeable developments in English to Hindi or other Indian languages to Hindi machine transliteration.

IJSER © 2011

International Journal of Scientific & Engineering Research, Volume 2, Issue 12, December-2011 4

ISSN 2229-5518

a memory-based classification framework that enables ef-

ficient estimation of these features while avoiding data sparseness problems. The experiments were both at cha- racter and transliteration unit (TU) level and reported that position - dependent source context features produce sig- nificant improvements in terms of all evaluation metrics. In this way the problem of machine transliteration was successfully implemented by adding source context mod- eling into state-of-the-art log-linear phrase-based statistic- al machine translation (PB-SMT). In their experiment, they also showed that by taking source context into ac- count, improve the system performance substantially.

v) Abbas Malik, Laurent Besacier Christian Boitet and Push- pak Bhattacharyya proposed an Urdu to Hindi Translite- ration using hybrid approach in 2009 [2]. This hybrid ap- proach combines finite-state machine (FSM) based tech- niques with statistical word language model based ap- proach and achieved better performance. The main effort of this system was to removal of diacritical marks from the input Urdu text. They report that the approach im- proved the system accuracy by 28.3% in comparison with their previous finite-state transliteration model.

vi) A Punjabi to Hindi transliteration system was developed by Gurpreet Singh Josan and Jagroop Kaur based on sta- tistical approach in 2011 [1]. The system used letter to let- ter mapping as baseline and try to find out the improve- ments by statistical methods. They used a Punjabi – Hindi parallel corpus for training and publically available SMT tools for building the system.

Afraz and Sobha developed a statistical transliteration system

using statistical approach in the year 2008. The third translit- eration system was based on Compressed Word Format (CWF) algorithm and a modified version of Levenshtein’s Edit Distance algorithm. Vijaya MS, Ajith VP, Shivapratap G, and Soman KP of Amrita University, Coimbatore proposed the remaining three English to Tamil Transliteration using differ- ent approaches.

‘t’, the most probable source language string s that gave raise to ‘t’ is decoded. The method is applied for forward transliteration from English to Hindi, Tamil, Arabic, Japa-

nese and backward transliteration from Hindi, Tamil,

Arabic, Japanese to English.

30,000 person names and 30,000 place names to train the transliteration model. They evaluated the performance of the system based on top 5 accuracy and reported 84.16% exact English to Tamil transliteration.

v) In their second attempt, the transliteration problem was modeled as classification problem and trained using C4.5 decision tree classifier, in WEKA Environment [5]. The same parallel corpus was used to extract features and these features are used to train the WEKA algorithm. The resultant rules generated by the WEKA were used to de- velop the transliteration system. They reported exact Ta- mil transliterations for 84.82% of English names.

vi) The third English to Tamil Transliteration was developed using One Class Support Vector Machine algorithm in

2010 [6]. This is a statistical based transliteration system,

where training, testing and evaluations were performed with publically available SVM tool. The experiment result

IJSER © 2011

International Journal of Scientific & Engineering Research, Volume 2, Issue 12, December-2011 5

ISSN 2229-5518

shows that, the SVM based transliteration was outper- formed over other previous methods..

Antony P J, Ajith VP, and Soman KP of Amrita University, Coimbatore proposed three different approaches for English to Kannada Transliteration. The proposed systems based on a well aligned bilingual parallel corpus of 40,000 English- Kan- nada place names.

ti-class classification approach in which j48 decision tree classifier of WEKA was used for classification [7]. The pa- rallel corpus consisting of 40,000 Indian place names was aligned properly and the extracted feature patterns were used to train the transliteration model. The accuracy of the model was tested with 1000 English names that were out of corpus. The model was evaluated by considering top 5 transliterations. The model was tested with a data set of 1000 English names and produced exact Kannada transliteration for English words with an accuracy of

81.25% when we considered only the top 1 result. We ob-

tained an accuracy of 85.88% when we considered only the top 2 results. The overall accuracy is increased to

91.32%, when we considered top 5 results.

developed using a publically available translation tool called Statistical Machine Translation (SMT) [9].The model is trained on 40,000 words containing Indian place names. During the training phase the model is trained for every class in order to distinguish between examples of this class and all the rest. The SVM binary classifier predicts all possible class labels for a given sequence of source lan- guage alphabets and selects only the most probable class labels. Also SVM generate a dictionary which consists of all possible class labels for each alphabet in the source language name. This dictionary avoids the excessive nega- tive examples while training the model and training be-

come faster. This transliteration technique was demon- strated for English to Kannada Transliteration and achieved exact Kannada transliterations for 89.27% of English names.

In the year 2009, Sumaja Sasidharan, Loganathan R, and So- man K P developed English to Malayalam Transliteration us- ing Sequence labeling approach [10]. They have used a parallel corps consisting of 20000 aligned English-Malayalam person names for training the system. The approach is very similar to earlier English to Tamil transliteration. The model produced the Malayalam transliteration of English words with an accu- racy of 90% when tested with 1000 names.

An application of transliteration was proposed by V.B. Sow- mya and Vasudeva Varmain 2009 [11]. They proposed a transliteration based text input method for Telugu, in which the user’s type Telugu using Roman script using simple edit- distance based approach. They have tested the approach with three datasets – general data, countries and places and per- son names and reported the performance of the system.

well for English to Indian languages. There are also Keyboard

layouts like Inscript and Keylekh transliteration that have been available for Indian languages. The following are the generic approach for machine transliteration for English to Indian languages.

IJSER © 2011

International Journal of Scientific & Engineering Research, Volume 2, Issue 12, December-2011 6

ISSN 2229-5518

ration while one standard and one non-standard run were

submitted for Kannada and Tamil. The reported results were as follow: For the standard run, the system demon- strated means F-Score values of 0.818 for Bengali, 0.714 for Hindi, 0.663 for Kannada and 0.563 for Tamil. The re- ported mean F-Score values of non-standard runs are

0.845 and 0.875 for Bengali non-standard run-1 and 2,

0.752 and 0.739 for Hindi non-standard run-1 and 2, 0.662 for Kannada non-standard run-1 and 0.760 for Tamil non- standard run-1. Non-Standard Run-2 for Bengali has achieved the highest score among all the submitted runs. Hindi Non-Standard Run-1 and Run-2 runs are ranked as the 5th and 6th among all submitted Runs.

posed a cross-lingual information retrieval system en- hanced with transliteration generation and mining in 2010 [14]. They proposed Hindi-English and Tamil-English cross-lingual evaluation tasks, in addition to the English- English monolingual task. They used a language model- ing based approach using query likelihood based docu- ment ranking and a probabilistic translation lexicon learned from English-Hindi and English-Tamil parallel corpora. To deal with out-of-vocabulary terms in the cross-lingual runs, they proposed two specific techniques. The first technique is to generate transliterations directly or transitively, and second technique is to mining possible transliteration equivalents from the documents retrieved in the first-pass. In their experiment they showed that both of these techniques significantly improved the over- all retrieval performance of our cross-lingual IR system. The systems achieved a peak performance of a MAP of

0.4977 in Hindi-English and 0.4145 in the Tamil-English.

2009 [2]. Using the expectation maximization algorithm, statistical alignment models maximizes the probability of the observed (source, target) word pairs and then the cha-

racter level alignments are set to maximum posterior pre-

dictions of the model. The advantage of the system is that no language-specific heuristics used in any of the mod- ules and hence it is extensible to any language-pair with least effort.

ix) Using word-origin detection and lexicon lookup method, an improvement in transliteration was proposed by Mi- tesh M. Khapra and Pushpak Bhattacharyya in 2009 [2]. The proposed improved model uses the following frame- work: (i) a word-origin detection engine (pre-processing)

IJSER © 2011

International Journal of Scientific & Engineering Research, Volume 2, Issue 12, December-2011 7

ISSN 2229-5518

(ii) a CRF based transliteration engine and (iii) a re-

ranking model based on lexiconlookup (post-processing). They applied their idea on English-Hindi and English- Kannada transliteration and reported 7.1% improvement in top-1 accuracy. The performance of the system was tested against the NEWS 2009 dataset. They submitted one standard run and one non-standard run for the Eng- lish-Hindi task and one standard run for the English- Kannada task.

‘local contexts’ and ‘phonemic information’ acquired from an English pronunciation dictionary. They evaluated the performance of the system separately for Hindi, Kannada and Tamil languages using a CRF trained on the training and development data, with the feature set U+B+T+P.

xi) Balakrishnan Vardarajan and Delip Rao proposed an ε- extension Hidden Markov Models and Weighted Trans- ducers for Machine Transliteration from English to five different languages, including Tamil, Hindi, Russian, Chinese, and Kannada in 2009 [2]. The developed method involves deriving substring alignments from the training data and learning a weighted finite state transducer from these alignments. They have defined a ǫ-extension Hid- den Markov Model to derive alignments between training pairs and a heuristic to extract the substring alignments. The performance of the transliteration system was eva- luated based on the standard track data provided by the NEWS 2009. The main advantage of the proposed ap- proach is that the system is language agnostic and can be trained for any language pair within a few minutes on a single core desktop computer.

NETEs from large comparable corpora: exhaustiveness (in

mining sparse NETEs), computational efficiency (in scal- ing on corpora size), language independence (in being applicable to many language pairs) and linguistic frugali- ty (in requiring minimal external linguistic resources). In their experiment they showed that the performance of the proposed method was significantly better than a state-of- the-art baseline and scaled to large comparable corpora.

transliteration system among Indian languages using WX Notation in 2010 [16]. They have proposed a new transli- teration algorithm which is based on Unicode transforma- tion format of an Indian language. They tested the per- formance of the proposed system on a large corpus hav- ing approximately 240k words in Hindi to other Indian languages. The accuracy of the system is based on the phonetic pronunciations of the words in target and source language and this was obtained from Linguistics having knowledge of both the languages. From the experiment, they found that the time efficiency of the system is better and it takes less than 0.100 seconds for transliterating 100

Devanagari (Hindi) words into Malayalam when run on an Intel Core 2 Duo, 1.8 GHz machine in Fedora.

In this paper work, we have presented a survey on develop- ments of different machine transliteration systems for Indian languages. Additionally we tried to give a brief idea about the existing approaches that have been used to develop machine transliteration tools. From the survey I found out that almost all existing Indian language machine transliteration systems are based on statistical and hybrid approach. The main effort and challenge behind each and every development is to design the system by considering the agglutinative and morphologi- cal rich features of language.

We acknowledge our sincere gratitude to Mr. Benjamin Peter (Assistant Professor, MBA Dept, St.Joseph Engineering College, Mangalore, India) and Mr. Rakesh Naik (Assistant Professor, MBA Dept, St.Joseph Engineering College, Mangalore, India) for their valuable support regarding proof reading and correction of this survey paper.

IJSER © 2011

International Journal of Scientific & Engineering Research, Volume 2, Issue 12, December-2011 8

ISSN 2229-5518

[1] Gurpreet Singh Josan & Jagroop Kaur (2011)’ Punjabi to Hindi Statistical Machine Translaiteration’, International Journal of Information Technology and Knowledge Management July-December 2011, Volume 4, No. 2, pp.

459-463.

[2] Amitava Das, Asif Ekbal, Tapabrata Mandal and Sivaji Bandyopadhyay (2009), ‘English to Hindi Machine Transliteration System at NEWS’, Pro- ceedings of the 2009 Named Entities Workshop, ACL-IJCNLP 2009, page

80-83, Suntec, Singapore.

[3] Taraka Rama, Karthik Gali (2009), ‘Modeling Machine Transliteration as a Phrase Based Statistical Machine Translation Problem’, Language Technol- ogies Research Centre, IIIT, Hyderabad, India.

[4] Vijaya MS, Ajith VP, Shivapratap G, and Soman KP (2008), ‘Sequence labeling approach for English to Tamil Transliteration using Memory based learning’, In Proceedings of Sixth International Conference on Natural Lan- guage processing.

[5] Vijaya MS, Ajith VP, Shivapratap G, and Soman KP (2009), ‘English to Tamil Transliteration using WEK’,International Journal of Recent Trends in Engineering, Vol. 1, No. 1.

[6] Vijaya M. S., Shivapratap G., Soman K. P (2010), ‘English to Tamil Trans- literation using One Class Support Vector Machine’, International Journal of Applied Engineering Research, Volume 5, Number 4, 641-652.

[7] Antony P J, Ajith V P and Soman K P (2010), ‘Feature Extraction Based English to Kannada Transliteration’, Third International Conference on Se- mantic E-business and Enterprise Computing, SEEC-2010.

[8] Antony P J, Ajith V P and Soman K P (2010), ‘Kernel Method for English to Kannada Transliteration’, International Conference on-Recent Trends in Information, Telecommunication and Computing (ITC 2010), Paper is arc- hived in the IEEE Xplore and IEEE CS Digital Library.

[9] Antony P J, Ajith V P and Soman K P (2010), ‘Statistical Method for Eng- lish to Kannada Transliteration’, Lecturer Notes in Computer Science- Communications in Computer and Information Science (LNCS-CCIS), Vo- lume 70, 356-362, DOI: 10.1007/978-3-642-12214-9_57.

[10] Sumaja Sasidharan, Loganathan R, and Soman K P (2009), ‘English to Malayalam Transliteration using Sequence labeling approach’,. International Journal of Recent Trends in Engineering, Vol. 1, No. 2.

[11] V.B. Sowmya, Vasudeva Varma in (2009), ‘Transliteration Based Text Input Methods for Telugu’, 22and International Conference on Computer Processing for Oriental Languages" (ICCPOL-2009), Hongkong.

[12] Harshit Surana and Anil Kumar Singh, ‘A More Discerning and Adaptable Multilingual Transliteration Mechanism for Indian Languages’, Language Tech. Research Centre IIIT, Hyderabad, India.

[13] Amitava Das, Asif Ekbal, Tapabrata Mandal and Sivaji Bandyopadhyay (2010), ‘A transliteration technique based on orthographic rules and pho- neme based approach’, NEWS 2010.

[14] K Saravaran Raghavendra Udupa, A Kumaran (2010), ‘Crosslingual Infor- mation Retrieval System Enhanced with Transliteration Generation and Mining’, Microsoft Research India, Bangalore , India.

[15] Prashanth Balajapally, Phanindra Bandaru, Madhavi Ganapathiraju N. Bala- krishnan and Raj Reddy , ‘Multilingual Book Reader: Transliteration, Word- to-Word Translation and Full-text Translation’.

[16] Rohit Gupta, Pulkit Goyal and Sapan Diwakar (2010), ‘Transliteration among Indian Languages using WX Notation by. Semantic Approaches in Natural Language Processing’, Proceedings of the Conference on Natural Language Processing 2010. Pages 147-151, Universaar, Universitätsverlag des Saarlandes Saarland University Press, Presses universitaires de la Sarre.

IJSER © 2011