International Journal of Scientific & Engineering Research, Volume 4, Issue 6, June-2013 85

ISSN 2229-5518

DIGITAL IMAGE PROCESSING

Nilesh Ingale1, Ashish Borkar2

1,3rd year EXTC Engg., DES’s COE & T, Dhamangaon Rly.

S.G.B.A.U Amravati, Maharashtra, India.

2,3rd year EXTC Engg., DES’s COE & T, Dhamangaon Rly.

S.G.B.A.U Amravati, Maharashtra, India.

1. ABSTRACT

Image enhancement techniques are used to emphasize and sharpen image featuresfor display and analysis. Image enhancement is the process of applying these techniquesto facilitate the development of a solution to a computer imaging problem. Consequently,the enhancement methods are application specific and are often developed empirically.The type of techniques includes point operations, where each pixel is modifiedaccording to a particular equation that is not dependent on other pixel values; maskoperations, where each pixel is modified according to the values of the pixel's neighbors or global operations, where all the pixel values in the imageare taken into consideration. Spatial domain processing methods includeall three types, but frequency domain operations, by nature of the frequency transforms, are global operations.

Of course, frequency domain operations canbecome "mask operations," based only on a local neighborhood, by performing thetransform on

small image blocks instead of the entire imageEnhancement is used as a preprocessing step in some computer visionapplications to ease the vision task, for example, to enhance the edges of an object tofacilitate guidance of a robotic gripper. Enhancement is also used as a preprocessing stepin applications where human viewing of an image is required before further processing.Image enhancement is used for post processing to generate a visually desirable image. Overall, image enhancement methods are used to make images look better. So“Image

Enhancement Is As Much An Art As It Is A Science.”

2. INTRODUCTION

————————————————————

equipment and trained personnel necessary to conduct routine machine analysis of data were not widely available,

Pictures are the most common and convenient means of conveying or transmitting information. A picture is worth a thousand words. Pictures concisely convey information about positions, sizes and inter-relationships between objects. They portray spatial information that we can recognize as objects. Human beings are good at deriving information from such images, because of our innate visual and mental abilities. About 75% of the information received by human is in pictorial form. In the present context, the analysis of pictures that employ an overhead perspective, including the radiation not visible to human eye are considered. Thus our discussion will be focusing on analysis of remotely sensed images. These images are represented in digital form. When represented as numbers, brightness can be added, subtracted, multiplied, divided and, in general, subjected to statistical manipulations that are not possible if an image is presented only as a photograph. Although digital analysis of remotely sensed data dates from the early days of remote sensing, the launch of the first Landsat earth observation satellite in 1972 began an era of increasing interest in machine processing (Cambell, 1996 and Jensen, 1996). Previously,

digital remote sensing data could be analyzed only at specialized remote sensing laboratories. Specialized

in part because of limited availability of digital remote sensing data and a lack of appreciationof their qualities.

3. What Is Image?

“A digital image is representation of a two-dimensional image with an array of certain numbers of finite set of

digital valuescalled picture elements or pixels.

Pixel values typically represent gray levels, colors, heights, opacities etc. Each pixel is a number represented as DN (Digital Number) that depicts the averageradiance of relatively small area within a scene. The range of DN values being normally 0to 255. The size of this area effects the reproduction of details within the scene. As thepixel size is reduced more scene detail is preserved in digital representation.

4. What Is Image Processing?

Image Processing and Analysis can be defined as the "Act of examining imagesfor the purpose of identifying objects and judging it’s significance"Image analyst studies the remotely sensed data and attempt through

IJSER © 2013 http://www.ijser.org

International Journal of Scientific & Engineering Research, Volume 4, Issue 6, June-2013 86

ISSN 2229-5518

logicalprocess in detecting, identifying, classifying, measuring and evaluating the significanceof physical and cultural objects, their patterns and spatial relationship.

Digital Image Processing is a collection of techniques for the manipulation of digitalimages by computers. The raw data received from the imaging sensors on the satelliteplatforms contains flaws and deficiencies. To overcome these flaws and deficiencies inorder to get the originality of the data, it needs to undergo several steps of processing.

This will vary from image to image depending on the type of

image format, initialcondition of the image and the information of interest and the composition of the imagescene.

5. Steps In Image Processing

Digital Image Processing undergoes three general steps:

• Image Acquisition

• Preprocessing

• Segmentation

Digital Image Acquisition

The general goal for image acquisition and processing is to bring pictures into the computer

domain of the computer, where they can be

displayed and then manipulated and altered for enhancement.

Four processes are involved in image acquisition:

Inp ut

Dis p la y

Ma nip u la tio n

O utp ut

‘The transformation of optical image into an array of numerical data which may be manipulation by a computer, so overall aim of machine vision may be achieved’

In order to achieve this aim three major issues must be tackled they are:

R ep res entatio n

s e ns ni g

Dig itiz ing

Image preprocessing

Image preprocessing seeks to modify and prepare thepixel values of

a digitized image to produce a form that is more suitable forsubsequent

operations within the generic model. There are two majorbranches of

image preprocessing, namely

Im a g e E nha nc e m e nts

Im ag e R es to ra tio n

Image enhancement attempts to improve the quality of image orto

emphasize particular aspects within the image. Such an objectiveusually implies a degree of a degree of subjective

judgment about theresulting quality and will depend on the

operation and the application inquestion.

The results may produce an image, which is quite different

fromtheoriginal, and some aspects may have to be deliberately sacrificed inorder to improve others.

The aim of image restoration is to recover the original imageafter ‘known’ effects such as geometric distortion

within a camera systemhave degraded it or blur caused by poor optics or movement. In all casesa mathematical or

statistical model of the degradation is required so thatrestorative action can be taken.Both types of operation

take the acquired image array as inputand produce a modified image array as output, and they are

thusrepresentative of pure ‘image processing’. Many of the common imagesprocessing operations are essentially

concerned with the application oflinear filtering to the original image ‘signal’.

• Image Enhancement

The term Enhancement is used to mean the alteration of the appearance of an image in such a way that the information contained in that image is more readily interpreted visually in terms of a particular nee

Enhancement – Improving Quality of Image

Image Enhancement Techniques

Image Enhancement Techniquesare instigated for making satellite imageries more informative and helping to

achieve the goal of image interpretation. As an image enhancement technique often drastically alters the original

numeric data, it is normally used only for visual (manual)

interpretation and not for further numeric analysis. Common

enhancements include image reduction, image rectification, and image magnification; transect extraction, contrast adjustments, band rationings, spatial filtering, Fourier transformations, and principal component analysis and texture transformation.

The enhancement techniques depend upon two factors

mainly:

The d ig ita l d a ta

The o b jec tive o f inte rp re tatio n

The image enhancement techniques are applied either to single-band images or

separately to the individual bands of a multiband image set.

Image Segmentation Methods

Histogram-based methods

Edge detection

Clustering methods

Split-and-merge methods

IJSER © 2013 http://www.ijser.org

International Journal of Scientific & Engineering Research, Volume 4, Issue 6, June-2013 87

ISSN 2229-5518

Histogram :-

A histogram is one of the simplest methods of analyzing an image. An image histogram maintains a count of the frequency for a given color level. When graphed, a histogram can provide a good representation of the color spread of the image. Histograms can also be used to equalize the image, as well as providing a large number of statistics about it.The following methods are for manipulating histograms in a consistence and meaningful manner.

1. Histogram Equalization

2. Histogram Specification

3. Local Enhancement

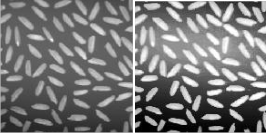

Histogram Equalization :-

The underlying principle of histogram equalization is straightforward and simple; it is assumed that each level in

the displayed image should contain an approximately equal number of pixel values, so that the histogram of these

displayed values is almost uniform. The objective of the histogram equalization is to spread the range of pixel values

present in the input image over the full range of the display device. Histogram equalization results are similar to contrast

stretching but offer the advantage of full automation.

original image image after histogram equalization

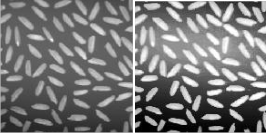

Histogram Specification :-

Histogram equalization does not allow interactive image enhancement and generates only one result: an

approximation to a uniform histogram. Sometimes though, we need to be able to specify particular histogram shapes

capable of highlighting certain gray-level ranges. original image

image after histogram specification

Local Enhancement :-

When it is necessary to enhance details over small areas To devise transformation functions based on the gray-

level distribution in the neighborhood of every pixel The

procedure is: Define a square (or rectangular) neighborhood and move the center of this area from pixel to pixel. At each

location, the histogram of the points in the neighborhood is computed and either a histogram equalization or histogram specification transformation function is obtained. This function is finally used to map the gray level of the pixel centered in the neighborhood.

The center is then moved to an adjacent pixel location and the procedure is repeated.

Edge Detection

Edges characterize boundaries of objects in image

A fundamental problem in image processing

Edges are areas with strong intensity contrasts

A jump in intensity from one pixel to the next

Edge detected image

• Reduces significantly the amount of data,

• Filters out useless information,

• Preserves the important structural properties

To determine a point as an edge point

• Determine the transition in grey level associated with the point which is significantly stronger than the background at that point.

• Use threshold to determine whether a value is

“significant” or not.

Note that the point’s two-dimensional first-order derivative

must be greater than the specified threshold

Fairly little noise can have such a significant impact on the two key derivatives used for edge detection in images

Image smoothing should be serious consideration prior to the use of derivatives in applications where noise is likely to be present.

6. Need of Edge Detection

1. Digital artists use it to create image outlines.

2 . The output of an edge detector can be added back to an original image to enhance the edges

3. Edge detection is often the first step in image segmentation

4. Edge detection is also used in image registration by alignment of two images that may have been acquired at

separate times or from different sensors

7.APPLICATIONS

1. Crime prevention

2.The military

3. AMedical diagnosis

4.Graphical Information & Remote sensing system

5. Educational & Training

6. Intellectual Property

IJSER © 2013 http://www.ijser.org

International Journal of Scientific & Engineering Research, Volume 4, Issue 6, June-2013 88

ISSN 2229-5518

8. ADVANTATGES

1. Robustness to high quality lossy image compression.

2. Automatic discrimination between malicious and innocuousmanipulations.

3. Controllable visual deterioration of the VS sequence by varying thewatermark embedding power.

4. Watermark embedding and detection can be performed in real time fordigital data.

9. CONCLUSION

Image processing plays a vital role in 21st century which is emerging with variousresearch programs & advances for more sophisticated & enjoyable life.

Image enhancement has importance in entertainment. It evolves the enhancement ofphotographs, special effects in movies. Thus image processing is used in every computerapplication.It provides automaton detection of tumors in body, automatic target detection inmilitary application , which yields more accurate results. This is a rapidly growing field& has a very wide scope.

10. References

[1] Dudgeon, D.E. and R.M. Mersereau, Multidimensional

Digital Signal Processing.1984, Englewood Cliffs, New Jersey: Prentice-Hall.

[2] Castleman, K.R., Digital Image Processing. Second ed.

1996, Englewood Cliffs, NewJersey: Prentice-Hall.

[3] Oppenheim, A.V., A.S. Willsky, and I.T. Young, Systems and Signals. 1983,Englewood Cliffs, New Jersey: Prentice-Hall. Papoulis, A., Systems and Transforms with Applications in Optics. 1968, New York:McGraw- Hill.

[4] Digitalimage Processing- Cartleman

[5] The Image Processing Handbook –Russ T.C

[6] Digital Image Processing –Gonzalez, R.E.Woods

IJSER © 2013 http://www.ijser.org