Inte rnatio nal Jo urnal o f Sc ie ntific & Eng inee ring Re se arc h, Vo lume 3, Issue 2, February -2012 1

ISS N 2229-5518

Off-line Signature Verification Using

Neural Network

Ashwini Pansare, Shalini Bhatia

ognition, f ingerprint recognition, iris scanning and retina scanning. Voice recognition or signature verif ication are the most w idely know n among the non- vision based ones.As signatures continue ti play an important role in f inancial, commercial and legal transactions, truly se cured authentication becomes more and more crucial. A signature by an authorized person is considered to be the “seal of approval” and remains the most pr ef erred means of authen- tication.The method presented in this paper consists of image prepossessing, geometric f eature extraction, neural netw ork training w ith extracted f ea- tures and verifcation. A verif ication stage includes applying the extracted f eatures of test signature to a trained neural netw ork w hich w ill classify it as a genuine or f orged.

—————————— ——————————

As signatur e is the primary mechanism both for authentica- tion and author ization in legal transactions, the need for effi- cient auto-mated solutions for signatur e ver ification has in- cr eased [6].Unlike a passw ord, PIN, PKI or key car ds – ident i- fication data that can be for gotten, lost, stolen or shar ed – the captur ed values of the handwritten signatur e ar e unique to an individual and virtually impossible to duplicate. Signatur e ver ification is natural and intuitive. The technology is easy to explain and tr ust. The pr imary advantage that signatur e ver i- fication systems have over other type’s technologies is that signatur es ar e alr eady accepted as the common method of identity ver ification [1].

A signature verification system and the techniques used to solve this problem can be divided into two classes Online and Off-line [5].On-line approach uses an electronic ta blet and a stylus connected to a computer to extract information about a signature and takes dynamic information like pressure, veloc i- ty, speed of writing etc. for verification purpose.Off-line signa- ture verification involves less electronic control and uses si g- nature images captured by scanner or camera. An off-line sig- nature verification system uses features extracted from scanned signature image. The features used for offline signa- ture verification are much simpler. In this only the pixel image needs to be evaluated. But, the off-line systems are difficult to design as many desirable characteristics such as the order of strokes, the velocity and other dynamic information are not available in the off-line case [3,4]. The verification process has to wholly rely on the features that can be extracted from the trace of the static signature images only.

Contr ibution:

In this paper w e pr esent a model in which neur al networ k

classifier is used for verification. Signatur es fr om database ar e pr epr ocessed pr ior to featur e extraction. The geometr ic fea- tur es ar e extracted fr om pr epr ocessed signatur e image. These extracted featur es ar e then used to train a neur al networ k.In ver ification stage, on test signatur es pr epr ocessing and featur e extraction is per formed.These extracted featur es ar e then ap- plied as input to a tr ained neural networ k which will classify it as a genuine or for ged signatur e.

Or ganization of the paper : The r est of the paper is or ganized as follows. In section 2, the signatur e ver ification model is de- scr ibed. In section 3, the algorithm is pr esented .Results gener- ated by the system is pr esented in section 4 and concluded in section 5.

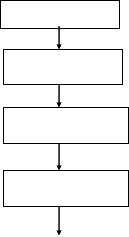

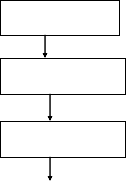

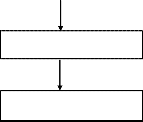

In this section, block diagram of system is discussed. Fig. 1 gives the block diagram of proposed signature verification system which verifies the authenticity of given signature of a person.

The design of a system is divided into two stages

1) Training stage 2) Testing stage

A training stage consist of four major steps 1) Retrieval of a signature image from a database 2) Image preprocessing 3) Feature extraction 4) Neural network training

A Testing stage consists of five major steps 1) Retrieval of a signature to be tested from a database 2) Image preprocessing

3) Feature extraction 4) Application of extracted features to a

trained neural network 5) checking output generated from a neural network.

IJSER © 201 2

Inte rnatio nal Jo urnal o f Sc ie ntific & Eng inee ring Re se arc h, Vo lume 3, Issue 2, Februa 2

ISS N 2229-5518

Training stage

Sample database

Input signature

Testing stage

Input signature

2.1.2

The re in different sizes so,to is performed, which will 256*256 as shown in fig. 3.

Pre processing

Pre processing

Feature extraction

Feature extraction

e 256*256

Verification

2.1.3

Thin ures invariant to imag and paper. Thi nning mean to strokes that are singl ature image.

Verification R esult

Fig. 1 : Block Diagra m of Of f line Signature Verif ication usin g NN

Fig. 2 shows signature image taken from a database.

Fig. 2 Signature image f rom a database

The pre processing step is applied both in training and tes ting phases. Signatures are scanned in gray. The purpose in this phase is to make signature standard and ready for feature ex- traction. The preprocessing stage improves quality of the im- age and makes it suitable for feature extraction [2]. The pre- possessing stage includes

2.1.1 Converting image to binary

A gray scale signature image is converted to binary to make feature extraction simpler.

Fig.4 signature image af ter thinning

2.1.4 Bounding box of the signature:

In the signature image, construct a rectangle encompassing the signature. This reduces the area of signature to be used for further processing and saves time.fig. 5 shows signature en- closed in a bounding box.

Fig.5 Signature image w ith a bouding box

IJSER © 201 2

Inte rnatio nal Jo urnal o f Sc ie ntific & Eng inee ring Re se arc h, Vo lume 3, Issue 2, February -2012 3

ISS N 2229-5518

The choice of a powerful set of features is crucial in sign ature verification systems. The features that are extracted from this phase are used to create a feature vector. We use a feature vec- tor of dimension 60 to uniquely characterize a candidate signa- ture. These features are geometrical features which mean they are based on the shape and dimensions of the signature image. These features are extracted as follows:

2.2.1 Geo metric Centre:

Travers e a s ignature image to find a point (row and column number in the image) at which no of black pixels is half of the total no of black p ixe ls in the image [7].

Fig.6 Geo metric center in s ignature image is repres ented by red marker( *).

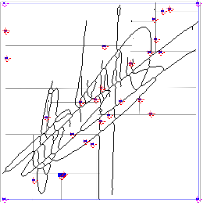

2.2.2 Featur e extracted using vertical splitting:

Split the signatur e image with vertical line passing thr ough its geometr ic centr e to get left and right part of signatur e .Find geometr ic centr e for left and right part. Divide the left part with horizontal line passing thr ough its geometr ic center to get top and bottom par t. Find the geometr ic centers for top and bottom parts of left part corr espondingly. Similar ly, the r ight part is split with a hor izontal lin e at its geometric center to get top and bottom parts of right part corr espondingly. Find geometr ic center for the top and bottom of the right part. Each part of the image is again split using same method to obtain thirty vertical featur e points as shown in Figur e 7.

X: 8

Y: 73

2.2.3 Featur e extracted using horizontal splitting

Split the signature image with horizontal line passing through its geometric centre to get top and bottom part of signature. Find geometric centre for top and bottom part. Divide the top part with vertical line passing through its geometric center to get left and right part. Find the geometric centers for left and right parts of top part correspondingly. Similarly, the bottom part is split with a vertical line at its geometric center to get left and right parts of bottom part correspondingly. Find geo- metric center for the left and right of the bottom part. Each part of the image is again split using same method to obtain thirty horizontal feature points as shown in Figure 8 .

Fig. 8 Signature Image a fter horizontal s plitting

2.2.4 Creation of featu re vec tor:

A feature vector of size 60 is formed using 30 features ex- tracted from vertical and horizontal splitting each.

Extracted 60 feature points are normalized to bring them in the range of 0 to 1.Thes e normalized features are applied as input to the neural network.

In the verification stage, a signature to be tested is preproc- essed and feature extraction is performed on pre processed test signature image as explained in 2.2 to obtain feature vec- tor of size 60. After normalizing a feature vector it is fed to the trained neural network which will classify a signature as a genuine or forged.

Table 1 gives algorithm for the offline signature verification system in which neural network is used for verifying the au- thenticity of signatures.

Fig. 7 Signatur e image after vertical splitting

IJSER © 201 2

Inte rnatio nal Jo urnal o f Sc ie ntific & Eng inee ring Re se arc h, Vo lume 3, Issue 2, February -2012 4

ISS N 2229-5518

TABLE 1

Algorithm for Offline Signature Verification using Neural Network

Input: signature from a database

Output: verified signature classified as genuine or forged.

1. Retrieval of signature image from database.

2. Preprocessing the signatures.

2.1 Converting image to binary.

2.2 Image resizing.

2.3 Thinning.

2.4 Finding bounding box of the signature.

3. Feature extraction

3.1 Extracting features using vertical splitting.

3.2 Extracting features using horizontal splitting.

4. Creation of feature vector by combining extracted features

Perf ormance is 0.00999993, Goal is 0.01

1 1

1 0

10-1

10-2

10-

![]()

0 0. 1 1. 2 2. 3 3. 4 4. 5

Obtained from horizontal and vertical splitting.

51311 Epochs

x 104

5. Normalizing a feature vector.

6. Training a neural network with normalized feature vector.

7. Steps 1 to 5 are repeated for testing signatures.

8. Applying normalized feature vector of test signature to trained neural network.

9. Using result generated by the output neuron of the neural

network declaring signature as genuing or forged.

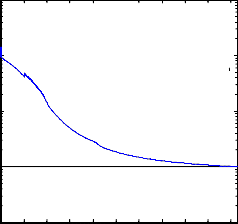

Fig.8 Perf ormance graph of trainning a NN using EBPTA .

False Acceptance Rate (FAR), False Rejection Rate (FRR) and Correct Classification Rate (CCR) are the three parameters used for measuring performance of system. FAR is calculated by (1), FRR is calculated by (2) and CCR is calculated by (3).

For training and testing of the system many signatures are used. The results given in this paper are obtained using the “Grupo de Procesado Digital de Senales” (GPDS) signature database [8]. The results provided in this research used a total of 1440 signatures. Those 1440 signatures are comprised of 30 sets (i.e. from 30 different people) and, for each person there are 24 samples of genuine signatures and 24 samples of forge- ries. Figure 6 shows some of the signatures in the GPDS data- base. To train the system, a subset of this database was taken comprising of 19 genuine samples taken from each of the 30 different individuals and 19 forgeries made by different per-

FAR = Number of forgeries accepted![]()

Number of forgeries tested

FRR = Number of originals rejected![]()

Number of original tested

CCR = Number of samples correctly

Recocgnized![]()

Number of samples tested

* 100 (1)

* 100 (2)

* 100 (3)

son for one signature. The features extracted from 19 genuine signatures and 19 forged signatures for each person were used to train a neural network. The architecture of neural network used has input layer, hidden layer and output layer [9]. Nu m- ber of neurons in the input layer are 120 for 60 feature points as each feature point has a row and a column number, 120 neurons in the hidden layer and one neuron in the ou tput layer. After applying a feature vector of test signature if the output neuron generates value close to +1 test signature is declared as genuine or if it generates value close to -1 it is de- clared as forged.

Fig.8 shows performance graph of the training a two layer

feed forward neural network using Error Back Propogation

Algorithm (EBPTA).

The genuine and forged signature samples used for training neural network is applied in the testing phase to check whether neural network classifies it correctly as genuine or forged. This is called Recall. The result of recall is as shown in Table 2.

When the neural networ k was pr esented w ith 570 genuine signatur es fr om 30 differ ent persons, it classified all 570 ge- nuine signatur es as genuine and when 570 for ged signatur es fr om 30 differ ent persons w er e a pplied it r ecognized all 570 signatur es as for geries. Thus FAR and FRR of the system is

0%.Hence, Corr ect Classification Rate (CCR) is 100% for Re-

call.

IJSER © 201 2

Inte rnatio nal Jo urnal o f Sc ie ntific & Eng inee ring Re se arc h, Vo lume 3, Issue 2, February -2012 5

ISS N 2229-5518

TABLE 2

Result of Testing Neural Network with Trained Signatue Sam- ples

Samples pr esented | Genuine | For ged | CCR in Recall |

570 genuine | 570 | 0 | 100% |

570 for ged | 0 | 570 | 100% |

When the signatures samples not used for traini ng neural network are applied as test signatures to the trained neural network, it is called Generalization. The result of generaliza- tion is shown in Table 3.

TABLE 3

Result of Testing Neural Network with New Signtaure Samples from Database.

Samples prsented | Ge- nuine | For g ed | FAR | FRR | CCR In Gener aliza- tion |

150 ge- nuine | 125 | 25 | - | 16.7 % | 85.7% |

150 for ged | 18 | 132 | 12% | - | 85.7% |

The neural network when presented with 150 genuine signa- tures from 30 different persons classified 125 signatures out of

150 as genuine and 25 signatures as forgeries. Thus FRR of the

system is 16.7% .When 150 forged signatures were given as input to neural network, it classified 18 signatures as genuine and 132 as forgeries. Thus FAR of the system is 12%. And hence the Correct Classification Rate is 85.7% for generaliza- tion.

This paper pres ents a method of offline s ignature verification us ing neural network approach. The method us es geometric fe a- tures extracted from preproces s ed s ignature images . The e x- tracted features are us ed to train a neural network us ing error back propagation training algorith m. As s hown in Table 2 CCR in recall is 100%. The network could clas s ify all g enuine and forged s ignatures correctly. When the network was pres ented with s ignature s amples fro m databas e different than the ones us ed in tra ining phas e, out of 300 s uch s ignatures (150 genuine and

150 forged) it could recognize 257 s ignatures correctly. Hence,

the correct clas s ification rate of the s ys tem is 85.7% in generali- zation as s hown in Table 3.

[1] Bradley Sc hafer, Se re stina V iriry “An Offline S ig nature Ve rific atio n sy ste m” IEEE Inte rnatio nal co nfe re nce o n sig nals and image proces sing applic atio n, 2009.

[2] Ramac handra A. C ,Jyo ti shriniv as Rao ”Ro bust Offline sig nature ve ri- fic atio n base d o n g lo bal fe ature s” IEEE Inte rnatio nal Adv ance Co mput- ing Co nfe re nce ,2009.

[3] J Edso n, R. Justino , F. Bo rto lozzi and R. S abo urin, "An o ff-line sig na- ture ve rific atio n using HMM fo r Rando m,S imple and S kille d Fo rge rie s", Sixth Interna tiona l Conference on Document Ana lysis a nd Recognition, pp.1031-1034, Se pt.2001. 211 -222, Dec .2000.

[4] J Edso n, R. Justino , A. El Yaco ubi, F. Bo rto lo zzi and R. S abo urin, "An o ff-line S ig nature Ve rific atio n Syste m Using HMM and Grapho me tric fe ature s", DAS 2000

[5] R. Plamo ndo n and S.N. S rihari, " Online and Offli ne Handwriti ng Rec- og nitio n: A Co mpre he nsive S urvey" , IEEE Tran. on Pa ttern Ana lysis a nd Machine Intelligence, vol.22 no .1, pp.63 -84, Jan.2000.

[6] Prasad A.G. Amaresh V.M. “An o ffline sig nature ve rific atio n syste m”

[7] B. Fang , C.H. Le ung , Y.Y. Tang , K.W. Tse , P.C.K. Kwo k and Y.K. Wo ng, "Off-line sig nature ve rific atio n by the trac king o f fe ature and stro ke posi- tio ns" , Pa ttern Recognition 36, 2003, pp. 91–101.

[8] Martinez, L.E., Trav ieso , C.M, Alo nso, J.B., and Fe rre r, M. Pa rameteriza- tion of a forgery Ha ndwritten Signa ture Verifica tion using SVM. IEEE

38thAnnual 2004 Inte rnatio nal Carnahan Co nfe re nce o n Sec urity Techno l- ogy ,2004 PP.193-196

[9] “An Intro duc tio n to Artific ial Ne ural Sy ste ms” by Jace k M. Zurada,

We st Publishing Co mpany 1992.

IJSER © 201 2