Find all connected components in S(x,y) and erode each connected component to one pixel; label all such pixels found as 1. All other pixels in S are labeled 0.

International Journal of Scientific & Engineering Research, Volume 5, Issue 7, July-2014 320

ISSN 2229-5518

Novel Multi-modal Image Fusion Techniques

Y.G.Srilatha (MTech Student, Jain University, Bangalore)

Thermal images are used to deduce temperature at the surface of viewed objects. Visual images are used to provide information about absorptivity and relative orientation of the viewed surface which is needed for correct estimation of surface heat fluxes.

The task of interpreting images, either visual images alone or thermal images alone, is an under constrained problem. The thermal image can at best yield estimates of surface temperature which, in general, is not specif ic in distinguishing between object classes. The features extracted from visual intensity images also lack the specificity required for uniquely determining the identity of the imaged object. The interpretation of each type of image thus leads to ambiguous inferences about the nature of the objects in the scene. The use of thermal data gathered by an infrared camera, along with the visual image, is seen as a way of resolving some of these ambiguities.[4]

In this work, Google Earth images have been considered as visual images. Since the Google Earth image and the IR are in two different modalities, it is obvious that the two images cannot be perfectly aligned. As shown in Fig 3., the visual image and the IR image are not registered perfectly. First, the two images were mutually registered as shown in Fig 3. (c). The registered image was then used for fusion algorithms.

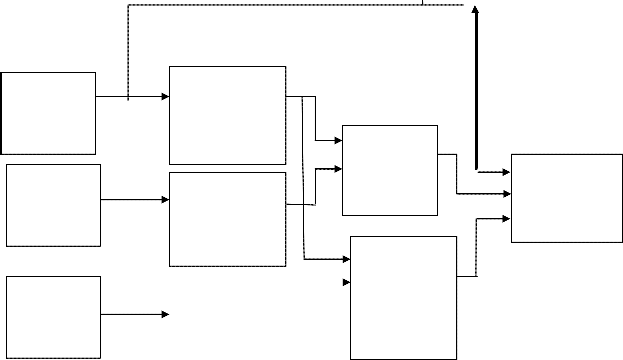

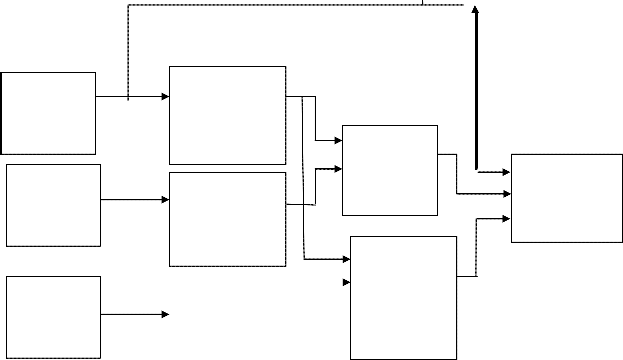

In this paper two methods of image f usion are discussed. The f irst method is based on multi- resolution, Wavelet Transform based image fusion. The next method uses image segmentation and hot spot detection through color transforms.

————————————————

Y.G.Srilatha is currently pursuing MTEch in Jain University, PH- 8088489816, E-mail: srilathamusic@gmail.com

Firstly the input visual image is transformed from RGB color space to HSV color space. This is because HSV color space is well suited for describing colors that are closely related to human interpretation. It also allows a decoupling of the intensity component from the color carrying information in a color image. The V channel of the visual image which represents the intensity of the image will be used in the f usion. The other two channels H-channel and S-channel carry color information. Besides HSV, LAB color space is also used. A color is defined in LAB color space by the brightness L, the red-green chrominance A, and the yellow-blue chrominance B.[2][4]

In this methodology along with visual image and IR image the reverse polarity IR is also used. The motivation for using this is that sometimes the hot spots are more evident in the reverse polarity IR.

The V channel of the visual image is not only fused with the IR image but also with the reverse polarity IR image. Two methods of wavelet transforms have been implemented on both the fusion operations. The traditional Mallat’s wavelet as well as the simple Haar wavelet methods have been used.[4][5]

First each source image is decomposed into multi-scale representation using the DW T transforms. For the case of fusing a visual and an IR image, it is difficult to obtain perfect registration due to the different characteristics of visual and IR images. So, for obtaining properly aligned images we have implemented registration. The f usion rule used is : “choose the average value of the coefficients of the source images for the low frequency band and the maximum value of the coefficients of the source images for the high frequency bands”. At last, the fused image

is produced by applying the inverse DW T.[5]

After obtaining the two gray-level f used images, a color

RGB image is obtained by assigning the V channel of

IJSER © 2014 http://www.ijser.org

International Journal of Scientific & Engineering Research, Volume 5, Issue 7, July-2014 321

ISSN 2229-5518

the visual image in HSV color space to the green channel, the f used image of visual and IR to the red channel and the fused image of visual and reverse polarity IR to the blue channel. The resulting color

image is called a pseudo color image. The idea of color mappings employed is motivated by the opponent color processing work by Aguilar and Waxman.[2]

VISUAL IMAGE

INFRARED IMAGE

REVERS POLARITY IR IMAGE

WAVELET TRANSFORM OF VISUAL IMAGE

WAVELET TRANSFORM OF IR IMAGE

VISUAL AND IR FUSED IMAGE

VISUAL AND REVERSE POLARITY IR FUSED

IMAGE

PSEUDO IMAGE

Find all connected components in S(x,y) and erode each connected component to one pixel; label all such pixels found as 1. All other pixels in S are labeled 0.

Image segmentation can be broadly classif ied into Region-growing and region splitting and merging. In this fusion methodology region growing method of image segmentation is used. The goal of region growing is to use image characteristics to map individual pixels in an input image to sets of pixels called region. As the name applies region growing is a procedure that group pixe ls or sub-regions into larger regions based on pre-defined criteria for growth. The basic approach is to start with a set of “seed” points and from these grow regions by appending to each seed those neighboring pixels that have pre-defined properties similar to the seed.[1]

A region-growing algorithm based on 8-connectivity is stated as follows:

Let f(x,y) denote an input image; S(x,y) denote a seed array containing 1s at the locations of seed points and

0s elsewhere and Q denote a predicate to be applied at each location (x,y).

Form an image such that fQ such that, at a pair of coordinates (x,y), let fQ (x,y) = 1 if the input image satisfies the given predicate, Q, at those coordinates; otherwise, let fQ (x,y) = 0.

Let g be an image formed by appending to each seed point in S all the 1-valued points in fQ that are 8- connected to that seed point.

Label each connected component in g with a different region label. This is the segmented image obtained by region growing.[1]

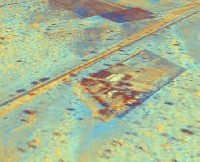

Initially thresholding was done on the IR image. i.e. the regions wherein hot spots could be visible were considered. After obtaining the seed pixels region growing was applied to the image.

Further, the IR image was pseudo colored so that the variations in the temperatures could be easily detected.

There are various techniques to pseudo coloring an image.

IJSER © 2014 http://www.ijser.org

International Journal of Scientific & Engineering Research, Volume 5, Issue 7, July-2014 322

ISSN 2229-5518

Fig 1. (a) Visual image, (b) IR image, (c) Fused image (weighted average)

The above diagram depicts the color variations of the basic RGB color space wherein each color is valued in the range 0-255.[2] Therefore, by using the above color variation scheme the IR image has been pseudo colored. The pseudo color IR image and the day light visual image are fused by the method of weighted averaging. Since IR detects hot spots, IR is given more weight than the visual image.

Initially the tests were done on some normal images obtained through a f uzor which provided pre-registered visual and IR images and later the same was tested on some satellite and aerial images. Fig 1. Shows the segmentation method of fusion images. As we can see, the IR image has been pseudo colored and the fusion has been done by a weighted average of IR and visual image. The hot spots are shown in red. The less hotter regions have a color variation in the decreasing gradient of temperature.[2]

Fig 2. (a) Visual (EO) image, (b)IR image, (c)Registered

Fig 2. (a) Visual (EO) image, (b)IR image, (c)Registered

EO image

In Fig 2. The visual and IR images are registered and then f usion has been performed based on both the fusion methods and the f used outputs are as shown below.

IJSER © 2014 http://www.ijser.org

International Journal of Scientific & Engineering Research, Volume 5, Issue 7, July-2014 323

ISSN 2229-5518

Fig 3. (a) Fusion output obtained by the method of Wavelet Transform , (b) Fusion output obtained by segmentation and weighted average method.

Proceedings of IEEE, vol.87, no. 8, pp. 1315-1326,

1999.

[4] Zhiyun Xue, Rick. S. Blum, Concealed Weapon Detection Using Color Image Fusion, Electrical and Computer Engineering Department, Lehigh University, Bethlehem, P.A., U.S.A

[5] Jorge Nunez, Xavier Otazu, Octavi Fors, Albert Prades, Vicenc Pala and Roman Arbiol, Multiresolution- Based Image Fusion with Additive wavelet Decomposition, IEEE Transactions on Geoscience and Remote Sensing, vol. 37, no. 3, May 1999.

In this paper, two color image fusion methods have been developed, which will be useful for surveillance, weapon, target detection. The fused image takes the complimentary information from the visual and the IR image and provides a detailed description of the objects in the scene and also any other hidden objects.[4] The utility of the methods is demonstrated in the experiment tests. The hot spots present in IR image was clearly visible after obtaining the f used image. The Google Earth is usually a bigger map on which the points on the bigger Google Earth corresponds to the IR image. After fusion the image was projected in the big Google Earth image.

[1] R. C. Gonzalez, R.E. Woods, Digital Image

Processing, Second Edition, Prentice Hall, New Jersey,

2002

[2] A. M. Waxman, M. Aguilar, R. A. Baxter, D. A. Fay, D. B. Ireland, J. P. Racamato, W. D. Ross, Opponent- color fusion of multi-sensor imagery : visible, IR and SAR, Proceedings of IRIS Passive Sensors, vol.1, pp.

43-61, 1998

[3] Z. Zhang, R. S. Blum, A categorization of multi-scale decomposition-based image fusion schemes with a performance study for a digital camera application,

IJSER © 2014 http://www.ijser.org