International Journal of Scientific & Engineering Research, Volume 2, Issue 12, December-2011 1

ISSN 2229-5518

Natural Image Segmentation and Object Recognition using ACA and Steerable Filter based on Color-Texture Features

Mrs. Nilima Kulkarni*, Dr. Mrs. A.M. Rajurkar

Abstract - An image segmentation algorithm that is based on low-level features for color and texture is presented. It is aimed at segmentation of natural scenes, in which the color and texture of each segment does not typically exhibit uniform statistical characteristics. The pr oposed approach combines knowledge of human perception with an understanding of signal characteristics in order to segment natural scenes into perceptually/semantically uniform regions. The proposed approach is based on two types of spatially adaptive low-level features. The first describes the local color composition in terms of spatially adaptive dominant colors, and the second describes the spatial characteristics of the grayscale component of the texture. Together, they provide a simple and effective characterization of texture that the proposed algorithm uses to obtain robust and, at the same time, accurate and precise segmentations. We have tried to recognize objects in images. We used color values for object recognition. The images are assumed to be of relatively low resolution and may be degraded compressed.

Index Terms—Adaptive clustering algorithm (ACA), content based image retrieval (CBIR), color values, human visual system (HVS), local median energy, natural images, optimal color composition distance (OCCD), steerable filter decomposition.

—————————— ——————————

1 INTRODUCTION

HIS paper considers the problem of segmentation of natural images based on color and texture. Although significant progress has been made in texture segmentation (e.g., [3],[4],[5],[6], and [7]) and color segmentation (e.g., [8],[9],[10], and [11]) separately, the area of combined color and texture segmentation remains open and active. Some of the recent work includes JSEG [12], stochastic model-based approaches [13],[14], and [15], watershed techniques [16],

edge flow techniques [17], and normalized cuts [18].

Another challenging aspect of image segmentation is the extraction of perceptually relevant information. Since humans are the ultimate users of most CBIR systems, it is important to obtain segmentations that can be used to organize image contents semantically, according to categories that are meaningful to humans. This requires the extraction of low- level image features that can be correlated with high-level image semantics. However, rather than trying to obtain a complete and detailed description of every object in the scene, it may be sufficient to isolate certain regions of perceptual significance (such as “sky,” “water,” “mountains,” etc.) that can be used to correctly classify an image into a given category, such as “natural,” “man-made,” “outdoor,” etc. [19]. An important first step toward accomplishing this goal, is to develop low-level image features and segmentation techniques that are based on perceptual models and principles

————————————————

* Nilima Kulkarni is masters in engineering ( computer science and engineering) working as Asst. Professor (Information Science & Engineering Department) in New Horizon College of Engineering, Bangalore, Country India , E-mail: kulkarninilima@gmail.com

about the processing of color and texture information. We focus on natural images. The images are assumed to be relatively low resolution (e.g., 200 X 200 or lower) and occasionally degraded or compressed images, just as humans do. This is especially important since low resolution images are most frequently used within WWW documents.

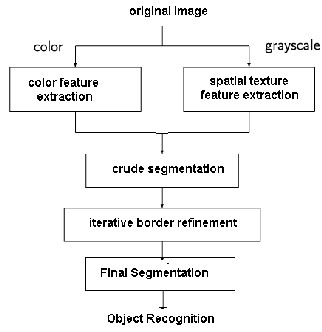

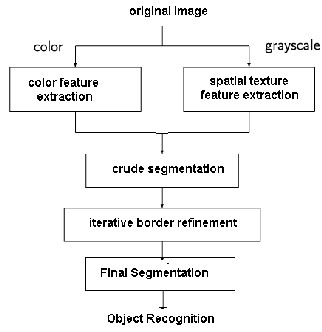

As illustrated in Fig. 1, we develop two types of features,

one describes the local color composition, and the other the spatial characteristics of the grayscale component of the texture. These features are first developed independently, and then combined to obtain an overall segmentation. The initial motivation for the proposed approach came from the adaptive clustering algorithm (ACA) proposed by Pappas [8]. ACA has been quite successful for segmenting images with regions of slowly varying intensity but oversegments images with texture. Thus, a new algorithm is necessary that can extract color textures as uniform regions and provide an overall strategy for segmenting natural images that contain both textured and smooth areas.

The proposed approach uses ACA as a building block. It separates the image into smooth and textured areas, and combines the color composition and spatial texture features to consolidate textured areas into regions. The color composition features consist of the dominant colors and associated percentages in the vicinity of each pixel. They are based on the estimation of spatially adaptive dominant colors.

This is an important new idea, which on one hand, reflects

the fact that the human visual system (HVS) cannot simultaneously perceive a large number of colors, and on the other, the fact that region colors are spatially varying.

Spatially adaptive dominant colors can be obtained using

IJSER © 2011

http://www.ijser.org

International Journal of Scientific & Engineering Research, Volume 2, Issue 12, December-2011 2

ISSN 2229-5518

the ACA [8]. Finally, we use a modified optimal color composition distance (OCCD) metric to determine the perceptual similarity of two color composition feature vectors [23] and [27]. The spatial texture features describe the spatial characteristics of the grayscale component of the texture, and are based on multiscale frequency decomposition that offers efficient and flexible approximation of early processing in the HVS. We use the local energy of the subband coefficients as a simple but effective characterization of spatial texture.

A median filter operation is used to distinguish the energy due to region boundaries from the energy of the textures themselves.

The segmentation algorithm combines the color composition and spatial texture features to obtain segments of uniform texture [27]. This is done in two steps. The first relies on a multigrid region growing algorithm to obtain a crude segmentation.

Figure 1. Schematic of proposed segmentation algorithm

The segmentation is crude due to the fact that the estimation of the spatial and color composition texture features requires a finite window. The second step uses an elaborate border refinement procedure to obtain accurate and precise border localization by appropriately combining the texture features with the underlying ACA segmentation. Next step of segmentation is object recognition. Object recognition is complex task in computer vision. We have tried to recognize three different types of objects in image.

The paper is organized as follows. Section 2 presents the

color composition feature extraction. The extraction of the

spatial texture features is presented in Section 3. Section 4 discusses the algorithm for combining the spatial texture and color composition features to obtain an overall segmentation, object recognition and segmentation results. The conclusions are summarized in section 5.

2 COLOR COMPOSITION FEATURES EXTRACTION

Color has been used extensively as a low-level feature for image retrieval [1], [24],[25], and [26]. In this section, we discuss new color composition texture features that take into account both image characteristics and human color perception. In order to account for the spatially varying image characteristics and the adaptive nature of the HVS, we have use the idea of spatially adaptive dominant colors. The proposed color composition feature representation consists of a limited number of locally adapted dominant colors and the corresponding percentage of occurrence of each color within a certain neighborhood

fc( x, y, Nx, y ) = {(ci, pi ), i = 1,..., M , pi ∈[0,1]} (1)

Where each of the dominant colors, ci , is a 3-D vector in Lab space, and pi are the corresponding percentages. Nx, y denotes the neighborhood around the pixel at location (x,y) and M is the total number of colors in the neighborhood. A typical value is M =4.

One approach for obtaining spatially adaptive dominant

colors is the ACA proposed in [8] and extended to color in [9]. The ACA is an iterative algorithm that can be regarded as a generalization of the K-means clustering algorithm in two respects: it is adaptive and includes spatial constraints. It segments the image into K classes. Each class is characterized by a spatially varying characteristic function that replaces the spatially fixed cluster center of the K-means algorithm. Given these characteristic functions, the ACA finds the segmentation that maximizes the a posteriori probability density function for the distribution of regions given the observed image. The algorithm alternates between estimating the characteristic functions and updating the segmentation. The initial estimate is obtained by the K-means algorithm, which estimates the cluster centers (i.e., the dominant colors) by averaging the colors of the pixels in each class over the whole image. The key to adapting to the local image characteristics is that the ACA estimates the characteristic functions by averaging over a sliding window whose size progressively decreases. Thus, the algorithm starts with global estimates and slowly adapts to the local characteristics of each region. It is these characteristic functions that are used as the spatially adaptive dominant colors.

IJSER © 2011

http://www.ijser.org

International Journal of Scientific & Engineering Research, Volume 2, Issue 12, December-2011 3

ISSN 2229-5518

2.1 Color Composition Similarity Metric

We now define a metric that measures the perceptual similarity between two color composition feature vectors. Based on human perception, the color composition of two images (or image segments) will be similar if the colors are similar and the total areas that each color occupies are similar. The definition of a metric that takes into account both the color and area differences, depends on the mapping between the dominant colors of the two images. Mojsilovic et al. [23] proposed the OCCD, which finds the optimal mapping between the dominant colors of two images and, thus, provides a better similarity measure. The OCCD, overcomes the (significant) problems of the other metrics, but in general, requires more computation. However, since we are primarily interested in comparing image segments that contain only a few colors (at most four), the additional overhead for the OCCD is reasonable. Moreover, we use an efficient implementation of OCCD for the problem at hand that produces a close approximation of the optimal solution [27].

Given two color composition feature vectors fc1,and fc2, create a stack of tokens (colors and corresponding percentages) for each feature vector, create an empty destination stack for each vector

(1) Select a pair of tokens (ca, pa) and (cb, pb) with nonzero percentages, one from each feature vector, whose colors are closest.

(2) Move the token with the lowest percentage (e.g(ca, pa)) to the destination stack. Split the other token into (cb, pa) and (cb, pb - pa), and move the first to the corresponding destination stack.

(3) Repeat above steps with the remaining colors, until the initial stacks are empty.

Even though this implementation is not guaranteed to result in the optimal mapping, in practice, given the small number of classes, it produces excellent results. On the other hand, it avoids the quantization error introduced by the original OCCD and, thus, can be even more accurate than the original implementation. Once the color correspondences are established, the OCCD distance is calculated [27].

3 SPATIAL TEXTURE FEATURES EXTRACTION

In this section, we try to isolate the texture feature extraction from that of color. We use only the grayscale component of the image to derive the spatial texture features, which are then combined with the color composition features to obtain an intermediate crude segmentation. Like many of the existing algorithms for texture analysis and synthesis (e.g.,[5], [6], and [20]), this approach is based on a multiscale frequency decomposition. The proposed methodology, however, can make use of any

of the decompositions mentioned above.

This approach is based on the local median energy of the subband coefficients, where the energy is defined as the square of the coefficients. As we saw in the introduction, the advantage of the median filter is that it suppresses textures associated with transitions between regions, while it responds to texture within uniform regions.

We use steerable filter decomposition with four orientation subbands (horizontal, vertical, +45, -45). Most researchers have used four to six orientation bands to approximate the orientation selectivity of the HVS. Our goal is to identify regions with a dominant orientation (horizontal, vertical, +45, -45) all other regions will be classified as smooth (not enough energy in any orientation) or complex (no dominant orientation). The spatial texture feature extraction consists of two steps. First, we classify pixels into smooth and nonsmooth categories. Then we further classify nonsmooth pixels into the remaining categories. Let s0 (x, y), s1 (x, y), s2 (x, y) and s3(x, y) represent the steerable subband coefficient at location (x, y) that corresponds to the horizontal (0º), diagonal with positive slope (+45º), vertical (90º), and diagonal with negative slope (-45º) directions, respectively. We will use smax (x, y) to denote the maximum (in absolute value) of the four coefficients at location (x, y), and si (x, y) to denote the subband index that corresponds to that maximum. A pixel will be classified as smooth if there is no substantial energy in any of the four orientation bands. As we discussed above, a median operation is necessary for boosting the response to texture within uniform regions and suppressing the response due to textures associated with transitions between regions. A pixel (x, y) is classified as smooth if the median of smax(x ', y ') over a neighborhood of (x, y) is below a threshold. This threshold is determined using a two-level K- means algorithm that segments the image into smooth and nonsmooth regions. A cluster validation step is necessary at this point. If the clusters are too close, then the image may contain only smooth or nonsmooth regions, depending on the actual value of the cluster center.

The next step is to classify the pixels in the nonsmooth regions. As we mentioned above, it is the maximum of the four subband coefficients, si(x, y) that determines the orientation of the texture at each image point. The texture classification is based on the local histogram of these indices. Again, a median type of operation is necessary for boosting the response to texture within uniform regions and suppressing the response due to textures associated with transitions between regions. This is done as follows. We compute the percentage for each value (orientation) of the index si(x, y) in the neighborhood of (x, y). Only the nonsmooth pixels within the neighborhood are con- sidered. If the maximum of the percentages is higher than a threshold T1 (e.g., 36%) and the difference between the first and second maxima is greater than a threshold T2 (e.g., 15%),

IJSER © 2011

http://www.ijser.org

International Journal of Scientific & Engineering Research, Volume 2, Issue 12, December-2011 4

ISSN 2229-5518

then there is a dominant orientation in the window and the pixel is classified accordingly. Otherwise, there is no dominant orientation, and the pixel is classified as complex. The first threshold ensures the existence of a dominant orientation and the second ensures its uniqueness.

4 SEGMENTATION ALGORITHM

In this section, we present an algorithm that combines the color composition and spatial texture features to obtain the overall image segmentation.

The smooth and nonsmooth regions are considered separately. As we discussed in Section II, the ACA was developed for images with smooth regions. Thus, in those regions, we can rely on the ACA for the final segmentation. However, some region merging may be necessary. Thus, in the smooth regions, we consider all pairs of connected neighboring segments, and merge them if the average color difference across the common border is below a given threshold. The color difference at each point along the border is based on the spatially adaptive dominant colors provided by ACA, which, thus, provides a natural and robust region merging criterion. Finally, any remaining small color segments that are connected to nonsmooth texture regions are considered together with the nonsmooth regions, and are assumed to have the same label as any nonsmooth region they are connected to. Figure 2 shows the different stages of the algorithm: (a) shows an original color image, (b) shows the ACA segmentation (dominant colors), and (c) shows the texture classes.

We now consider the nonsmooth regions, which have been further classified into horizontal, vertical, +45º,-45º, and complex categories. These categories must be combined with the color composition features to obtain segments of uniform texture. We obtain the final segmentation in two steps. The first combines the color composition and spatial texture features to obtain a crude segmentation, and the second uses an elaborate border refinement procedure, which relies on the color infor- mation to obtain accurate and precise border localization.

4.1 Crude Segmentation

The crude segmentation is obtained with a multigrid region growing algorithm. We start with pixels located on a coarse grid in nonsmooth regions, and compute the color composition features using a window size equal to twice the grid spacing, i.e., with 50% overlap with adjacent horizontal or vertical windows. Only pixels in nonsmooth regions and smooth pixels that are neighbors with nonsmooth pixels are considered. Note that the color composition features are computed at the full resolution; it is the merging only that is carried out on different grids. The merging criterion, which we discuss below, combines the color composition and spatial texture information. Ideally, a pair of pixels belongs to the same region, if their color composition features are similar and they belong to the same spatial texture category.

4.2 Border Refinement Using Adaptive Clustering

Once the crude segmentation is obtained, we refine it by adaptively adjusting the borders using the color composition texture features. The approach is similar to that of the ACA [8]. For each pixel in the image, we use a small window to estimate the pixel texture characteristics, and a larger window to obtain a localized estimate of the region characteristics. For each texture segment that the larger window overlaps, we obtain a separate color composition feature vector, that is, we find the average color and percentage for each of the dominant colors. We then use the OCCD criterion to determine which segment has a feature vector that is closest to the feature vector of the small window, and classify the pixel accordingly. Figure 2(d) shows an example of resulting crude segmentation and figure 2(e) shows final segmentation result on original image.

4.3 Object Recognition

Next step segmentation is object recognition. Object recognition is difficult and challenging task. As we are using natural images, we have tried to recognize three different types of objects forest (greenery), sea and sky in image. For object recognition, we have calculated color values of each pixel. We check color value of each pixel against range. If the color value is in between that range then that pixel is displayed in that color, else it is displayed in gray scale color. For ex. If pixel value is in between the green range then that pixel is displayed in green. in fig. 2 (f) some segmented part in image is displayed in green color and remaining segments in gray scale. So that particular segment (object) can be called as greenery. The final results of object recognition are shown in Fig. 2.

5 CONCLUSION

We presented a approach for image segmentation and object recognition that is based on low-level features for color and texture. It is aimed at segmentation of natural scenes, in which the color and texture of each segment does not typically exhibit uniform statistical characteristics. We also tried to recognize objects in images. The proposed approach combines knowledge of human perception with an understanding of signal characteristics in order to segment natural scenes into perceptually/semantically uniform regions. The proposed approach is based on two types of spatially adaptive low-level features. The first describes the local color composition in terms of spatially adaptive dominant colors, and the second describes the spatial characteristics of the grayscale component of the texture. Together, they provide a simple and effective characterization of texture that can be used to obtain ro- bust, and at the same time, accurate and precise segmentations.

IJSER © 2011

http://www.ijser.org

International Journal of Scientific & Engineering Research, Volume 2, Issue 12, December-2011 5

ISSN 2229-5518

We used color value for object recognition. The performance of the proposed algorithms has been demonstrated in the domain of photographic images, including low resolution, degraded, and compressed images.

The image segmentation results can be combined with other segment information, such as location, boundary

shape, and size, in order to extract semantic information. Such semantic information may be adequate to classify an image correctly.

(a) (b) (c) (d)

(e) (f) (g) (h)

Figure 2. (a) Original color image. (b) Color segmentation (ACA). (c) Texture classes. (d) Crude segmentation. (e) Final segmentation (on original image) (f), (g), (h) Forest (greenery), Sky and Sea objects are recognized

REFERENCES

[1] Y. Rui, T. S. Huang, and S.-F. Chang, ―Image retrieval: Current tech- niques, promising directions and open issues,‖ J. Vis. Commun. Image Represen., vol. 10, pp. 39–62, Mar. 1999.

[2] W. M. Smeulders, M. Worring, S. Santini, A. Gupta, and R. Jain, ―Con- tent- based image retrieval at the end of the early years,‖ IEEE Trans.

Pattern Anal. Mach. Intell., vol. 22, no. 12, pp. 1349–1379, Dec. 2000. [3] A. Kundu and J.-L. Chen, ―Texture classification using QMF bank-based

subband decomposition,‖ Comput. Vis., Graphics, and Image Process , Graph. Models Image Process., vol. 54, pp. 369–384, Sep. 1992.

[4] T. Chang and C.-C. J. Kuo, ―Texture analysis and classification with tree-

structured wavelet transform,‖ IEEE Trans. Image Process., vol. 2, no. 10, pp. 429–441, Oct. 1993.

[5] M . Unser, ―Texture classification and segmentation using wavelet frames,‖ IEEE Trans. Image Process., vol. 4, no. 11, pp. 1549–1560,Nov.

1995.

[6] T . Randen and J. H. Husoy, ―Texture segmentation using filters with

optimized energy separation,‖ IEEE Trans. Image Process., vol. 8, no. 4, pp. 571–582, Apr. 1999.

[7] G . V. de Wouwer, P. Scheunders, and D. Van Dyck, ―Statistical texture characterization from discrete wavelet representations,‖ IEEE Trans. Image Process., vol. 8, no. 4, pp. 592–598, Apr. 1999.

[8] T . N. Pappas, ―An adaptive clustering algorithm for image segmenta- tion,‖

IEEE Trans. Signal Process., vol. SP-40, no. 4, pp. 901–914, Apr.1992.

[9] M . M. Chang, M. I. Sezan, and A. M. Tekalp, ―Adaptive Bayesian seg-

mentation of color images,‖ J. Electron. Imag., vol. 3, pp. 404–414, Oct.1994.

[10] D. Comaniciu and P. Meer, ―Robust analysis of feature spaces: Color image segmentation,‖ in Proc. IEEE Conf. Computer Vision and Pattern

Recognition, San Juan, PR, Jun. 1997, pp. 750–755.

[11] J. Luo, R. T. Gray, and H.-C. Lee, ―Incorporation of derivative priors in adaptive Bayesian color image segmentation,‖ in Proc. Int. Conf. Image Processing, vol. III, Chicago, IL, Oct. 1998, pp. 780–784.

[12] Y . Deng and B. S. Manjunath, ―Unsupervised segmentation of color- texture regions in images and video,‖ IEEE Trans. Pattern Anal. Mach. Intell., vol. 23, no. 8, pp. 800–810, Aug. 2001.

[13] S . Belongie, C. Carson, H. Greenspan, and J. Malik, ―Color- and texture-

based image segmentation using EM and its application to content based image retrieval,‖ in Proc. ICCV, 1998, pp. 675–682.

[14] D . K. Panjwani and G. Healey, ―Markov random-field models for unsu- pervised segmentation of textured color images,‖ IEEE Trans. Pattern Anal. Mach. Intell., vol. 17, no. 10, pp. 939–954, Oct. 1995.

[15] J . Wang, ―Stochastic relaxation on partitions with connected

components and its application to image segmentation,‖ IEEE Trans. Pattern Anal. Mach. Intell., vol. 20, no. 6, pp. 619–636, Jun. 1998.

[16] L . Shafarenko, M. Petrou, and J. Kittler, ―Automatic watershed segmen-

tation of randomly textured color images,‖ IEEE Trans. Pattern Anal. Mach. Intell., vol. 6, no. 11, pp. 1530–1544, Nov. 1997.

[17] W . Ma and B. S. Manjunath, ―Edge flow: A technique for boundary de-

tection and image segmentation,‖ IEEE Trans. Image Process., vol. 9, no. 8, pp. 1375–1388, Aug. 2000.

[18] J . Shi and J. Malik, ―Normalized cuts and image segmentation,‖ IEEE Trans. Pattern Anal. Mach. Intell., vol. 22, no. 8, pp. 888–905, Aug.

2000.

[19] A . Mojsilovic and B. Rogowitz, ―Capturing image semantics with low- level descriptors,‖ in Proc. Int. Conf. Image Processing, Thessaloniki, Greece, Oct. 2001, pp. 18–21.

[20] J . Portilla and E. P. Simoncelli, ―A parametric texture model based on

joint statictics of complex wavelet coefficients,‖ Int. J. Comput. Vis., vol.40, pp. 49–71, Oct. 2000.

[21] A . Mojsilovic´, J. Kovacˇevic´, J. Hu, R. J. Safranek, and S. K. Ganapathy,

―Matching and retrieval based on the vocabulary and grammar of color

IJSER © 2011

http://www.ijser.org

International Journal of Scientific & Engineering Research, Volume 2, Issue 12, December-2011 6

ISSN 2229-5518

patterns,‖ IEEE Trans. Image Process., vol. 1, no. 1, pp. 38–54, Jan.2000.

[22] W . Y. Ma, Y. Deng, and B. S. Manjunath, ―Tools for texture/color based search of images,‖ in Proc. SPIE Human Vision and Electronic ImagingII,

vol. 3016, B. E. Rogowitz and T. N. Pappas, Eds., San Jose, CA, 1997, pp.

496–507

[23] A. Mojsilovic´, J. Hu, and E. Soljanin, ―Extraction of perceptually

important colors and similarity m easu r em en t for image matching, retrieval, and analysis,‖ IEEE Trans. Image Process., vol. 11, no. 11, pp.

1238–1248, Nov. 2002.

[24] M. Swain and D. Ballard, ―Color indexing,‖ Int. J. Comput. Vis., vol. 7, no. 1, pp. 11–32, 1991.

[25] W. Niblack, R. Berber, W. Equitz, M. Flickner, E. Glaman, D. Petkovic, and P. Yanker, ―The QBIC project: Quering images by content using color, texture, and shape,‖ in Proc. SPIE Storage and Retrieval for Image and Video Data Bases, vol. 1908, San Jose, CA, 1993, pp. 173–187.

[26] B. S. Manjunath, J.-R. Ohm, V. V. Vasudevan, and A. Yamada, ―Color and texture descriptors,‖ IEEE Trans. Circuits Syst. Video Technol., vol.11, no. 6, pp. 703–715, Jun. 2001.

[27] Junquing Chan, T. N. Pappas, Aleksandra Mojsilovic and Bernice E.

Rogowitz ―Adaptive Perceptual Color-Texture Image Segmentation‖

IEEE Transaction on Image Processing VOL 14, NO. 10, October 2005.

IJSER © 2011

http://www.ijser.org