International Journal of Scientific & Engineering Research, Volume 4, Issue 12, December-2013 962

ISSN 2229-5518

Multivariate Polynomial Regression in Data

Mining: Methodology, Problems and Solutions

Priyanka Sinha

—————————— ——————————

ITH the increasing use of computers in our data to day life, there is a continuous growth in the data. These da-

ta contain a large amount of known and unknown knowledge which could be utilized for various applications like for [1],[2] knowledge extraction, pattern analysis, data archaeology and data dredging. This extraction of unknown useful information is achieved by the process of Data Mining.

Data mining is considered as an instrumental development in analysis of data with respect to various sectors like produc- tion, business and market analysis. There are two forms of data mining namely: Predictive Data Mining and Descriptive Data Mining.

Descriptive data mining is the process of extracting the fea-

tures from the given set of values. Predictive Data Mining is

the process of estimating or predicting future values from an

available set of values. Predictive data mining uses the concept of regression for the estimation of future values.

Regression is the method of estimating a relationship from the given data to depict the nature of data set. This relationship can then be used for various computations like for the fore- casting future values or for computing if there exists a relation amongst the various variables or not.[3.]

Regression analysis is basically composed of four different stages:

1. Identification of dependent and independent variables.

2. Identification of the form of relationship among the varia-

bles like linear, parabolic, exponential, etc. by means of

scatter diagram between dependent and independent vari- ables.

3. Computation of regression equation for analysis.

4. Error analysis to understand how good the estimated

model fits the actual data set.

————————————————

• Priyanka Sinha is currently pursuing Masters degree program inSoftware

Technology in Velloe Institute of Technology,India,

PH-91-9629785836. E-mail:er.priyakasinha@gmail.com

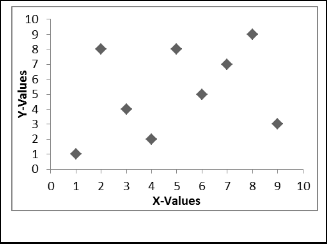

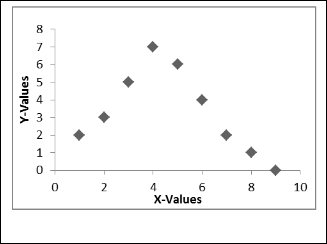

Fig. 1. ScatterPlot on x and y values

There can be ‘n’ number of ways of joining the given points in scatter graph. The idea of plotting the regression curve is that the diversion within the various points should be mini- mal. But if we simply compute the diversion to be minimal, i.e., ![]() is minimal, we can again have n number of possibilities as the negative and the positives cancel out. So just computing minimal diversion is not appropriate.

is minimal, we can again have n number of possibilities as the negative and the positives cancel out. So just computing minimal diversion is not appropriate.

There are mainly two methods for finding the best fit curve, namely, Method of Least Square and Method of Least Abso- lute Value. In Method of Least Square Value(LSV), the sum of the squares of the diversions is taken to be minimum. This sum is referred to as Sum of Square of Error.

![]() = minimum. (1) Another method of finding approximate regression curve is

= minimum. (1) Another method of finding approximate regression curve is

the method of Least Absolute Value (LAV). Here, it I s as- sumed that ![]() is minimum. This method has a drawback that finding a line that satisfies this equation is diffi- cult. Furthermore, there may be no unique LAV Regression Curve.

is minimum. This method has a drawback that finding a line that satisfies this equation is diffi- cult. Furthermore, there may be no unique LAV Regression Curve.

There are various methods of Regression Analysis like: Simple Linear Regression, Multivariate Linear Regression, Polynomial Regression, Multivariate Polynomial Regression, etc.

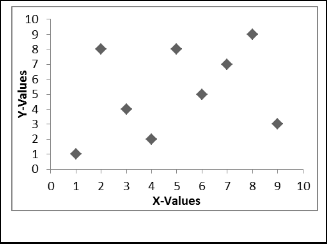

In Linear Regression, a linear relationship exists between the variables. The linear relationship can be amongst one re- sponse variable and one regressor variable called as simple

IJSER © 2013 http://www.ijser.org

International Journal of Scientific & Engineering Research, Volume 4, Issue 12, December-2013 963

ISSN 2229-5518

![]()

linear regression or between one response variable and multi- ple regression variable called as multivariate linear regression. The linear regression equation is of the form:

for simple linear regression (2)

for multivariate linear regression (3)

The linear regression curve is of the form:

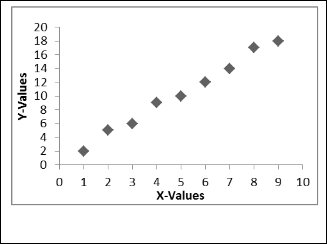

Polynomial Regression is a model used when the response variable is non-linear, i.e., the scatter plot gives a non-linear or curvilinear structure.[3]![]()

General equation for polynomial regression is of form:

(6)

To solve the problem of polynomial regression, it can be

converted to equation of Multivariate Linear Regression with

k Regressor variables of the form:

(8)![]()

Where,

(7)

ε is the error component which follows normal distribution εi ~N(0,σ2)![]()

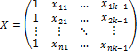

The equation can be expressed in matrix form as :

(9) Where, X, Y, β, ε are the vector matrix form representations

which can be expanded as:

Fig. 2. ScatterPlot in Linear Regressionon x and y values

is the vector of observations (10)

Quadratic Regression is the regression in which there is a quadratic relationship between the response variable and the Regressor variable. Quadratic equation is a special case of pol-![]()

ynomial linear regression where the nature of the curve can be predicted. The equation is of the form:

is the vector of parameters (11)

and regression curve is a parabolic curve.

(4)

is the vector of errors (12)

is the vector array or variables

(13)

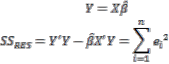

Estimation of parameters is done by Least Square Method. Assuming the fitted regression equation as:

Fig. 2. ScatterPlot in Polynomial Regressionon x and y values

![]()

(14)

Then, by Least Square Method, minimum error is repre- sented as :![]()

In Polynomial Regression, the relationship between the response and the Regressor variable is modelled as the nth or- der polynomial equation. The nature of regression graph pre- diction is not possible in this case. The form is:

(5)

as:![]()

(15) The matrix representation for the above equation is given![]()

(16)

By partial differentiation with respect to the regression

IJSER © 2013 http://www.ijser.org

International Journal of Scientific & Engineering Research, Volume 4, Issue 12, December-2013 964

ISSN 2229-5518

equation model parameters, we have k independent normal equations which can be solved for solution of parameters, which is represented as:

![]() (17) Substitution of gives error as:

(17) Substitution of gives error as:

![]()

The parameter for the given equation can be computed as: (22)

And the Computed Regression Equation is represented as:![]()

(23)

TABLE 1

ANOVA TABLE FOR REGRESSION PARAMETERS

![]()

ANOVA Table

(18)

The major issue with Multivariate Polynomial Regression is the problem of Multicolinearity. When there are multiple re- gression variables, there are high chances that the variables are interdependent on each other. In such cases, due to this

Source of

Variation

Degree

of

Freedom

Sum of

Square

Mean

Square

F- Statistics

this relationship amongst variables, the regression equation computed does not properly fit the original graph.![]()

(Src) (DF) (SS) (MS) (F)

Another problem with Multivariate Polynomial Regression

is that the higher degree terms in the equation do not contrib-

Regression

(Reg) k-1 SSReg

MSReg=

SSReg/(k-

1) F =

ute majorly to the regression equation. So they can be ignored. But if the degree is each time estimated and decided I required or not, then each time all the parameters and equations need

Residual

(Res) n-k SSRes

MSRes=

SSRes/(n-k)

MSReg/

MSRes

to be computed.

IJSER

Total (Tot) n-1 SSTot MSTot= SSTot/(n-1)

Here, Degree of freedom for the regression equation is (n- k).Significance of the regression equation can be estimated by means of Analysis of Variance table called ANOVA table.

This Multiple Linear Regression model can be used to com- pute the Polynomial Regression Equation.

![]()

Polynomial Regression can be applied on single Regressor variable called Simple Polynomial Regression or it can be computed on Multiple Regressor Variables as Multiple Poly- nomial Regression[3],[4]. A Second Order Multiple Polynomi- al Regression can be expressed as:

Multicolinearity is a big issue with Multivariate Polynomial Regression as it restricts from the proper estimation of regres- sion curve. To solve this issue, the Polynomial Equation can be mapped to a higher order space of independent variables called as the feature space. There are various methods for this like: Sammon’s Mapping, Curvilinear Distance Analysis, Cur- vilinear Component Analysis, Kernel Principle Component Analysis, etc. These methods transform the related regression variables into independent variables which results in better estimation of the regression curve.

The solution to the problem of computation of parameter each time for increase in order can be solved by computation using Orthogonal Polynomial Representation as:

![]() (24)

(24)

Here,

β 1 , β 2 are called as linear effect parameters.

β 11 , β 22 are called as quadratic effect parameters.

β 12 is called as interaction effect parameter.

The Regression Function for this is given as:![]()

This is also called as the Response Surface.![]()

This can again be represented in Matrix form as:

(19)

(20)

(21)

Data Mining in real time problems consist of variety of data sets with different properties. The prediction of values in such prob- lems can be done by various forms of regression. The Multivari- ate Polynomial Regression is used for value prediction when there are multiple values that contribute to the estimation of val- ues. These may be related to each other and can be converted to independent variable set which can be used for better regression estimation using feature reduction techniques.

IJSER © 2013 http://www.ijser.org

International Journal of Scientific & Engineering Research, Volume 4, Issue 12, December-2013 965

ISSN 2229-5518

[1] Han, Jiawei and Kamber, Micheline. (2001). Data Mining Concepts

& Techniques, Elsevier

[2] Fayyad, Usama, Pietetsky-Shapiro, Gregory, and Symth, Padharic.

Knowledge Discovery and Data Mining: Towards a Unifying

Framework (1999). KDD Proceedings, AAAI

[3] Gatignon, Hubert. (2010). Statistical Analysis of Management Data

Second Edition. New York: Springer Publication.

[4] Kleinbaum, David G, Kupper, Lawrence L, and, Muller, Keith E. Applied Regression Analysis and Multivariable Methods 4th Edition. California: Thomson Publication.

IJSER

IJSER © 2013 http://www.ijser.org