International Journal of Scientific & Engineering Research, Volume 5, Issue 4, April-2014 1119

ISSN 2229-5518

Minimizing CO2 Emissions on Cloud Data

Centers

Soumya Ranjan Jena Teaching Associate Department of CSE

AKS University, Satna, M.P, India soumyajena1989@gmail.com

Sudarshan Padhy

Director

IMA, Bhubaneswar, Odisha, India director.ima@iomaorissa.ac.in

Abstract— The need of hour extended its area of conservation of energy in cloud computing. Cloud computing is the heart of research and one of the hottest topics in the field of computer science and engineering. Basically Cloud computing provides services that are referred to as Soft- ware-as-a-Service (SaaS), Platform-as-a-Service (PaaS) and Infrastructure-as-a-Service (IaaS). As the technology advances and network access becomes faster and with lower latency, the model of delivering computing power remotely over the Internet will proliferate. Hence, Cloud data cen- ters are expected to grow and accumulate a larger fraction of the world’s computing resources. In this way, energy efficient management of data center resources is a major issue in regard to both the operating costs and CO 2 emissions to the environment. In energy conservation we try to reduce CO 2 emissions that contribute to greenhouse effect. Therefore, the reduction of power and energy consumption has become a first-order objective in the design of modern computing systems. In this paper we propose two algorithms for energy conservation on cloud based infrastruc- ture.

Index Terms— Cloud Computing, Energy Conservation, Modified Best Fit Decreasing Algorithm, Power Aware Best Fit Decreasing

Algorithm.

—————————— ——————————

LOUDS have floated as the next-generation IT platform

for hosting applications in science, business, social net-

working, and media content delivery. Moreover it is a

distributed computing paradigm which facilitates the users

with a distributed access to scalable virtualized hardware or

software [1]. Cloud data centers are the foundations to support

many internet applications, enterprise operations, and scien-

tific computations. Data centers are driven by large-scale

computing services such as web searching, online social net-

working, online office and IT infrastructure outsourcing, and

scientific computations [2]. A cloud consists of several ele-

ments such as clients, datacenter and distributed servers. It

includes fault tolerance, high availability, scalability, flexibility,

reduced overhead for users, reduced cost of ownership, on

demand services etc.

The National Institute of Standards and Technology (NIST) defines cloud computing as a model for enabling ubiq- uitous, convenient, on-demand network access to a shared pool of configurable computing resources (e.g., networks, servers, storage, applications, and services) that can be rapidly provisioned and released with minimal management effort or service provider interaction. The big organizations like Rack- space, Amazon, Google, Microsoft, IBM, and VMware are providing cloud computing services such as data storage, an application development platform, data access and computa- tion. They provide the complete infrastructure to manage the IT services on-demand self-basis. Customers can dynamically choose their computing services according to their changing need at reduced costs. The providers gain benefits by reusing

computing resources. Thus cloud computing is advantageous to both the service providers and the clients. Several key fac- tors that enable cloud computing to lower energy use and car- bon emissions from IT [3]:

Dynamic Provisioning: It is the process of reducing wast- ed computing resources through better matching of server capacity with actual demand.

Multi-Tenancy: Multi-tenancy in cloud service is a policy- driven enforcement, segmentation, isolation, governance, service levels, and chargeback/billing models for different consumer constituencies [4].

Server Utilization: Operating servers at higher utilization rates.

Data Center Efficiency: Utilizing advanced data center infrastructure designs that reduce power loss through im- proved cooling, power conditioning, etc.

In this section we will discuss about different parameters that are essential for energy conservation.

Number of users: It tells us the number of users in a giv- en application.

Number of servers: It is the number of production serv- ers to operate a given application.

IJSER © 2014 http://www.ijser.org

International Journal of Scientific & Engineering Research, Volume 5, Issue 4, April-2014 1120

ISSN 2229-5518

Utility of device: Computational load that a device (serv- er, network device or storage array) is handling relative to the specified peak load.

How to consume power per server: Average power con-

sumed by a server.

Power consumption for networking and storage: Aver- age power consumed for networking and storage equipment in addition to server power consumption.

Effectiveness of data center power usage: Data center ef-

ficiency metric which is defined as the ratio of the total data center power consumption divided by the power consumption of the IT equipment. Power usage effec- tiveness accounts for the power overhead from cooling, power conditioning, lighting and other components of the data center infrastructure.

Data center carbon intensity: It is the amount of carbon

content to generate the energy consumed by a data cen- ter, depending on the mixture of primary energy sources and transmission losses. The carbon emission a key fac- tor of carbon intensity of these energy sources.

Kyong Hoon Kim et al. [3] have studied the processing power management through the Virtual Machine (VM) provi- sioning which a vital technique in cloud based infrastructure. In this paper the authors have provided a real-time cloud ser- vice framework for requesting a virtual platform, and also investigated various power-aware VM provisioning schemes based on DVFS (Dynamic Voltage Frequency Scaling) schemes. Olivier Beaumont et al. [4] have studied approxima- tion algorithms for minimizing both the number of used re- sources and the dissipated energy in the context of service allocation under reliability constraints on Clouds. For both optimization problems, authors have given lower bounds and have exhibited algorithms that achieve claimed reliability. Bo Li et al. [5] have demonstrated a novel energy efficient ap- proach called “EnaCloud”, which enables application live placement dynamically with consideration of energy efficien- cy in a cloud platform. Here a Virtual Machine is used to en- capsulate the application, which supports applications sched- uling and live migration to minimize the number of running machines; which indirectly saves energy. P. Prakash et al. [6] have given a distributed power migration and management algorithm for cloud environment that uses the resources in an effective and efficient manner ensuring minimal use of power. The proposed algorithm performs computation more efficient- ly in a scalable cloud computing environment that reduces up to 30% of the power consumption to execute services. Qi Zhang et al. [7] have suitably explained a control theoretic solution to the dynamic capacity provisioning problem that minimizes the total energy cost while meeting the perfor-

mance objective in terms of task scheduling delay.

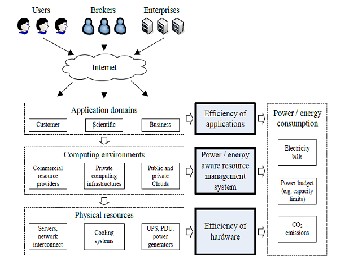

Energy consumption is not only determined by hardware efficiency, but also by the resource management system de- ployed on the infrastructure and the efficiency of applications running in the system. The interdependence of different levels of computing systems in regard to energy consumption is shown in Fig.1. Energy efficiency impacts end-users in terms of resource usage costs, which are typically determined by the Total Cost of Ownership (TCO) incurred by the resource pro- vider. Higher power consumption results not only in boosted electricity bills but also in additional requirements to the cool- ing system and power delivery infrastructure [8][9], i.e., Unin- terruptible Power Supplies (UPS), Power Distribution Units (PDU), and so on.

Figure 1: Energy Consumption at Different Levels in Compu- ting Systems

Power consumption and energy management techniques are closely related to each other. There are two types of power consumption in cloud computing environment [11]. One is static power consumption and the other is dynamic power consumption. The static power consumption is mainly deter- mined by the type of transistors, logic gates and process tech- nology used on cloud based infrastucture. The reduction of static power requires improvements of the low-level system design.

Dynamic power consumption is created by circuit activity (i.e., transistor, switches, changes of values in registers, etc.) and depends mainly on a specific usage scenario, clock rates, and input-output activity. The sources of dynamic power con- sumption are the short circuit current and switched capaci- tance. Short-circuit current causes only 10-15% of the total power consumption and so far no way has been found to re-

IJSER © 2014 http://www.ijser.org

International Journal of Scientific & Engineering Research, Volume 5, Issue 4, April-2014 1121

ISSN 2229-5518

duce this value without compromising the performance

[12][13].

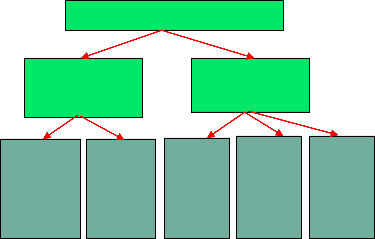

Similarly power management techniques are of two types: Static Power Management (SPM) and Dynamic Power Man- agement (DPM) as shown in Fig.2. SPM contains all the opti- mization methods that are applied at the design time at the circuit, logic, architectural, and system levels [13]. Whereas DPM contains all optimization at virtualization level, operat- ing system level, and circuit level.

Power Management Techniques

4. For each host in Host list if host has enough resource for VM then

5. Estimate the Power of VM

6. if power of the Virtual Machine is less than minimum

power

7. then allocate the Host having minimum power

8. if there is no allocated Host

9. allocate Virtual Machine to a Host

10. return allocation

In Power Aware Best Fit Decreasing (PABFD) algorithm we sort all the Virtual Machines in the decreasing order of their current CPU utilizations and allocate each Virtual Machine to a host that provides the least increase of the power consump-

Static Power

Management

(SPM)

Dynamic Power

Management

(DPM)

tion caused by the allocation.

Input: Host list, Virtual Machine list

Output: Allocation of Virtual Machines

Architec- tural Level Optimiza- tion

Logic Level Optimi- zation

Circuit Level Optimi- zation

Virtual- ization- Level Optimi- zation

Operat- ing Sys- tem Level Optimi- zation

1. Sort the Virtual Machines in decreasing order of utili- zation

2. For each Virtual Machine in Virtual Machine list

3. Assign the Virtual Machine having minimum Power

(which is the maximum priority) until all the allocat- ed hosts are null

Figure 2: Energy and Power Management

In this section we propose two algorithms i.e., Modified Best Fit Decreasing (MBFD) and Power Aware Best Fit De- creasing (PABFD) for energy conservation in cloud based in- frastructure.

The fundamental mechanism that we are going to implement in this algorithm is completely based on sorting the available Virtual Machines in decreasing order of their current CPU utilizations, and allocates each Virtual Machine to a host that provides the least increase of power consumption. This allows leveraging the heterogeneity of resources by choosing the most power-efficient nodes first. The pseudo-code for the al- gorithm is given as follow:

Input: Host list, Virtual Machine list

Output: Allocation of Virtual Machines

1. Sort the Virtual Machines in decreasing order of utili- zation

2. For each Virtual Machine in Virtual Machine list

3. Assign the Virtual Machine having minimum Power

(which is the maximum priority) until all the allocat- ed hosts are null

4. if host has enough resources for Virtual Machine then

5. for each host in Host list if host has enough resource

for Virtual Machine then

6. if power of the Virtual Machine is less than minimum power

7. then allocate the Host having minimum power

8. if there is no allocated host

9. add the Virtual Machine to the allocation of VM

10. return the allocation

Data centers have become a cost-effective infrastructure for data storage and hosting large-scale service applications. However, large data centers today consume significant amounts of energy. This not only raises the operational ex- penses of cloud providers, but also raises environmental con- cerns with regard to minimizing carbon footprint. To develop a strong and competitive cloud computing environment we need to reduce the data center energy consumption costs by the virtue of which we will be able to achieve our goal.

We would like to thank Dr. G.K.Pradhan, Dean of Engineering and Technology, AKS University, Satna and Er. Satyabadi Kumar Jena, Mining Engineer, SECL for their motivation and inspiration to work on energy conservation. We are also in- debted to Mr. Abhaya Kumar Jena, PGT English, GSREMS, Bhubaneswar for his supporting effort in our work.

IJSER © 2014 http://www.ijser.org

International Journal of Scientific & Engineering Research, Volume 5, Issue 4, April-2014 1122

ISSN 2229-5518

[1] Soumya Ranjan Jena and Zulfikhar Ahmad, “Response time minimiza- tion of different load balancing algorithms in cloud computing environ- ment”, IJCA, Volume 69, No 17, May 2013 edition.

[2] Soumya Ranjan Jena, Sudarshan Padhy and Balendra Kumar Garg,“Performance evaluation of load balancing algorithms on cloud data centers”, IJSER, Volume 5, Issue 3, March-2014, ISSN 2229-5518.

[3] Kyong Hoon Kim, Anton Beloglazov and Rajkumar Buyya, “Power- aware provisioning of cloud resources for real-time services”, ACM, 2009.

[4] Olivier Beaumont, Philippe Duchon, and Paul Renaud Goud, “Approx- imation algorithms for energy minimization in cloud service allocation under reliability constraints”, Research Report, No-8241, February 2013, Project-Teams CEPAGE, ISSN-02496399.

[5] Bo Li, Jianxin Li, Jinpeng Huai, Tianyu Wo, Qin Li and Liang Zhong, “EnaCloud: An energy-saving application live placement approach for cloud computing environments”, IEEE International Conference on Cloud Computing, 2009.

[6] Prakash, P., G. Kousalya, Shriram K. Vasudevan and Kawshik K. Rangaraju, “Distributive power migration and management algorithm for cloud environment”, Journal of Computer Science, Science Publications, ISSN: 1549-3636.

[7] Qi Zhang, Mohamed Faten Zhani, Shuo Zhang, Quanyan Zhu, Raouf Boutaba, and Joseph L. Hellerstein, “Dynamic energy-aware capacity provisioning for cloud computing environments”, ICAC, San Jose, Cali- fornia, USA, September 18–20, 2012.

[8] Accenture, “Cloud computing and sustainability: The environmental benefits of moving to the cloud”, White paper.

[9] Soumya Ranjan Jena, “Bottle-necks of cloud security- A survey”, IJCA,Volume 85, No 12, January 2014.

[10] Anton Beloglazov and Rajkumar Buyya, “Energy efficient resource management in virtualized cloud data centers”, IEEE, 2010.

[11] Anton Beloglazov and Rajkumar Buyya, “Optimal online determinis- tic algorithms and adaptive heuristics for energy and performance effi- cient dynamic consolidation of virtual machines in cloud data centers”, John Wiley & Sons, Ltd, 2012.

[12] Anton Beloglazov, Jemal Abawajyb and Rajkumar Buyya, “Energy- aware resource allocation heuristics for efficient management of data cen- ters for cloud computing”, Elsevier Science, 2011.

[13] Anton Beloglazov, “Energy-efficient management of virtual machines in data centers for cloud computing” Doctor of Philosophy, Department of Computing and Information Systems, The University of Melbourne, Feb- ruary 2013.

Soumya Ranjan Jena is currently working as the Teaching Asso-

ciate in the department of CSE at AKS University, Satna. He has completed B.Tech in CSE, M.Tech in IT, and CCNA. He is a member of Cisco Networking Academy. He is an author of “Design and Analysis of Algorithms”, published by Kalayni Publishers, New Delhi and “Theory of Compu- tation and Application” published by BPB Pub- lication, New Delhi. He has also published four international research papers in the field of Cloud Computing. His research interests

include Cloud Computing and Analytics, Mobile Cloud Computing, Wireless Networks, Sensor Networks, Algorithms, Mobile Computing, etc.

40 years of teaching and research experi-

ence he has guided many Ph.D and M.Tech

students in the field of Parallel Algorithm, Numerical Analysis, Computational Fluid Dy- namics, Computational Finance, Bioinformat- ics, etc.

IJSER © 2014 http://www.ijser.org