International Journal of Scientific & Engineering Research Volume 2, Issue 6, June-2011 1

ISSN 2229-5518

Face Recognition System Based on Principal Component Analysis (PCA) with Back Propagation Neural Networks (BPNN)

Mohammod Abul Kashem, Md. Nasim Akhter, Shamim Ahmed, and Md. Mahbub Alam

Abstract— Face recognition has received substantial attention from researches in biometrics, pattern recognition field and computer vision communities. Face recognition can be applied in Security measure at Air ports, Passport verification, Criminals list verification in police department, Visa processing , Verification of Electoral identification and Card Security measure at ATM’s. In this paper, a face recognition system for personal identification and verification using Principal Component Analysis (PCA) with Back Propagation Neural Networks (BPNN) is proposed. This system consists on three basic steps which are automatically detect human face image using BPNN, the various facial features extraction, and face recognition are performed based on Principal Component Analysis (PCA) with BPNN. The dimensionality of face image is reduced by the PCA and the recognition is done by the BPNN for efficient and robust face recognition. In this paper also focuses on the face database with different sources of variations, especially Pose, Expression, Accessories, Lighting and backgrounds would be used to advance the state-of-the-art face recognition technologies aiming at practical applications

Index Terms— Face Detection, Facial Features Extraction, Face Database, Face Recognition, Increase Acceptance ratio and Reduce

Execution Time.

1 INTRODUCTION

—————————— • ——————————

ITHIN computer vision, face recognition has become increasingly relevant in today’s society. The recent interest in face recognition can be attributed to the increase of commercial interest and the development of feasible technologies to support the development of face recogni- tion. Major areas of commercial interest include biometrics, law enforcement and surveillance, smart cards, and access control. Unlike other forms of identification such as finger- print analysis and iris scans, face recognition is user- friendly and non-intrusive. Possible scenarios of face recog-

————————————————

Mohammad Abul Kashem has been serving as an Associate Professor and Head of the Department, Department of Computer Science and Engi- neering (CSE), Dhaka University of Engineering & Technology (DUET), Gazipur, Bangladesh. Field of interest: Speech Signal Processing. E-mail: drkashem11@duet.ac.bd.

Md. Nasim Akhter has been serving as an Assistant Professor, Depart- ment of Computer Science and Engineering (CSE), Dhaka University of Engineering & Technology (DUET), Gazipur, Bangladesh. Field of inter- est: Operating System, Data Communication. E-mail: nasimn- tu@yahoo.com.

Shamim Ahmed has been studying as an M.Sc. in Engineering Student,

Department of Computer Science and Engineering (CSE), Dhaka Univer-

sity of Engineering & Technology (DUET), Gazipur, Bangladesh. He got

B.Sc. in engineering degree in CSE in the year of 2010 from DUET, Gazi-

pur, Bangladesh. He joined at Dhaka International University (DIU) as

lecturer (part time), in the department of Computer Science and Engineer-

ing (CSE) in January 2011 after completion his B.Sc. in Engg. (CSE). Field

of interest: Digital Image Processing, Artificial Neural Network, Artificial

Intelligence & Visual Effects. E-mail: shamim.6feb@gmail.com.

Md. Mahbub Alam has been serving as a Lecturer, Department of Com-

puter Science and Engineering (CSE), Dhaka University of Engineering &

Technology (DUET), Gazipur, Bangladesh. He got B.Sc. in engineering

degree in CSE in the year of 2009 from DUET, Gazipur, Bangladesh. Field

of interest: Digital Image Processing, Neural Network, Artificial Intelli-

gence. E-mail: emahbub.cse@gmail.com.

nition include: identification at front door for home securi- ty, recognition at ATM or in conjunction with a smart card for authentication, video surveillance for security. With the advent of electronic medium, especially computer, society is increasingly dependent on computer for processing, storage and transmission of information. Computer plays an impor- tant role in every parts of today life and society in modern civilization. With increasing technology, man becomes in- volved with computer as the leader of this technological age and the technological revolution has taken place all over the world based on it. It has opened a new age for humankind to enter into a new world, commonly known as the technol- ogical world. Computer vision is a part of every day life. One of the most important goals of computer vision is to achieve visual recognition ability comparable to that of hu- man [1],[2],[3].

Face recognition has received substantial attention from researches in biometrics, pattern recognition field and com- puter vision communities. In this paper we proposed a computational model of face detection and recognition, which is fast, reasonably simple, and accurate in con- strained environments such as an office or a household. Face recognition using Eigen faces has been shown to be accurate and fast. When BPNN technique is combined with PCA, non-linear face images can be recognized easily.

2 OUTLINE OF THE SYSTEM

In this papers to design and implementation of the Face

Recognition System (FRS) can be subdivided into three

IJSER © 2011 http://www.ijser.org

International Journal of Scientific & Engineering Research Volume 2, Issue 6, June-2011 2

ISSN 2229-5518

main parts. The first part is face detection-automatically face detection can be accomplished by using neural net- works back propagation. The second part is to perform var- ious facial features extraction from face image using digital image processing and Principal Component Analysis (PCA). And the third part consists of the artificial intelli- gence (face recognition) which is accomplished by Back Propagation Neural Network (BPNN).

The first part is the Neural Network-based Face Detection

described in [4]. The basic goal is to study, implement, train

and test the Neural Network-based machine learning sys-

tem. Given as input an arbitrary image, which could be a

digitized video signal or a scanned photograph, determine

whether or not there are any human faces in the image, and

if there are, return an encoding of the location and spatial

extent of each human face in the image. The first stage in

face detection is to perform skin detection. Skin detection

can be performed in a number of color models. To name a

few are RGB, YCbCr, HSV, YIQ, YUV, CIE, XYZ, etc. An

efficient skin detection algorithm is one which should be

able to cover all the skin colors like black, brown, white, etc.

and should account for varying lighting conditions. Expe-

riments were performed in YIQ and YCbCr color models to

find out the robust skin color model. This part consists of

YIQ and YCbCr color model, skin detection, blob detection,

smooth the face, image scaling.

Fig: 3. (a) skin detection, and (b) face detection. The second

part is to perform various facial features extraction from

face image using digital image processing and Principal

Component Analysis (PCA) and the Back Propagation

Neural Network (BPNN). We separately used iris recogni-

tion for facial feature extraction. Facial feature extraction

consists in localizing the most characteristic face compo-

nents (eyes, nose, mouth, etc.) within images that depict

human faces. This step is essential for the initialization of

many face processing techniques like face tracking, facial

expression recognition or face recognition. Among these,

face recognition is a lively research area where it has been

made a great effort in the last years to design and compare

different techniques. The second part consists of face land-

marks, iris recognition, fiducial points.

The third part consists of the artificial intelligence (face

recognition) which is accomplished by Back Propagation

Neural Network (BPNN). This paper gives a Neural and

PCA based algorithm for efficient and robust face recogni-

tion. This is based on principal component-analysis (PCA)

technique, which is used to simplify a dataset into lower

dimension while retaining the characteristics of dataset.

Pre-processing, Principal component analysis and Back

Propagation Neural Algorithm are the major implementa-

tions of this paper.

This papers also focuses on the face database with differ-

ent sources of variations, especially Pose, Expression, Ac-

cessories, and Lighting would be used to advance the state-

of-the-art face recognition technologies aiming at practical

applications especially for the oriental.

3 FACE DETECTION

The face detection can be perform by given as input an

arbitrary image, which could be a digitized video signal or a scanned photograph, determine whether or not there are any human faces in the image, and if there are, return an encoding of the location and spatial extent of each human face in the image[5].

3.1 The YIQ & TCbCr color model for skin detection The first stage in face detection is to perform skin detection. Skin detection can be performed in a number of color mod-

els. To name a few are RGB, YCbCr, HSV, YIQ, YUV, CIE,

XYZ, etc. An efficient skin detection algorithm is one which

should be able to cover all the skin colors like black, brown,

white, etc. and should account for varying lighting condi-

tions. Experiments were performed in YIQ and YCbCr color

models to find out the robust skin color model.

a b c

Fig. 1. (a) RGB, (b) RGB to YIQ, and (c) Skin threshold in YIQ.

a b

Fig. 2. (a) RGB to YCbCr, and (b) Skin threshold in

a b

Fig. 3. (a) skin detection, and (b) face detection.

3.2 Blob detection and Smooth the face

We used an open GL blob detection library. This library designed for finding 'blobs' in an image, i.e. areas whose luminosity is above or below a particular value. It computes their edges and their bounding box. This library does not perform blob tracking; it only tries to find all blobs in each frame it was fed with. Blobs in the image which are elliptic- al in shape are detected as faces. The blob detection algo- rithm draws a rectangle around those blobs by calculating information such as position and center. After the previous steps, the above face would be a possible outcome. When the face is zoomed in, it turns out the outline of the face is

IJSER © 2011 http://www.ijser.org

International Journal of Scientific & Engineering Research Volume 2, Issue 6, June-2011 3

ISSN 2229-5518

not smooth. So the next step is to smooth the outline of the face.

a b

Fig. 4. (a) Blob detection, and (b) Smooth the face image.

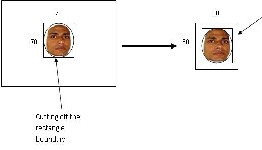

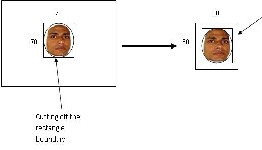

3.3 Image Scaling.

According to the client’s requirement, the image is to be scaled to the size of 80*80 pixels with the face centred. The face should contain 3350 pixels and all the rest of the pixels are white. Some edge detection algorithms cannot be ap- plied to color images, so it is also necessary to convert the image to grey scale.

There are four steps in this stage:

1. Scaling the face to the number of pixels which is most

approximate to and greater than 3350.

2. Making the number of pixels of the face exactly equal to

3350.

3. Making the size of the image 80*80 pixels.

Adding extra white pixels

Fig. 5. Making the size of the image 80*80 pixels.

4. Converting the image to grey scale.

3.4 Face Detector Algorithms

Training Data Preparation:

- For each face and non-face image:

o Subtract out an approximation of the shad- ing plane to correct for single light source

effects.

o Rescale histogram so that every image has the same gray level range.

- Aggregate data into data sets.

Backpropagation Neural Network.

- Set all weight to random value range from -1.0 to 1.0.

- Set an input pattern (binary values) to the neurons of

the net’s input layer.

- Active each neuron of the following layer:

o Multiply the weight values of the connections leading to this neuron with the output values of the preceding neurons.

o Add up these values.

o Pass the result to an activation function,

which computes the output value of this neu-

ron.

- Repeat this until the output layer is reached.

- Compare the calculated output pattern to the desired

target pattern and compute a square error value.

- Change all weights values of each weight using the

formula:

Weight (old) + Learning Rate * Output Error * Out- put (Neuron i) * Output (Neuron i + 1) * (1 – Out- put (Neuron i + 1))

- Go to the first step.

- The algorithm end, if all output pattern match their

target pattern.

Apply Face Detector to Image:

- Apply the 20 x 20 pixel view window at every pixel

position in the input image.

- For each window region:

o Apply linear fit function and histogram equalization function on the region.

o Pass the region to the trained Neural Net- work to decide whether or not it is a face.

o Return a face rectangle box scaled by the scale factor, if the region is detected as a face.

- Scale the image down by a factor of 1.2.

- Go to the first step, if the image is larger than the 20 x

20 pixel window.

4 FACIAL FEATURE EXTRACTION

The part is to perform various facial features extraction from face image using digital image processing and Prin- cipal Component Analysis (PCA). We separately used iris recognition for facial feature extraction. Facial feature ex- traction consists in localizing the most characteristic face components (eyes, nose, mouth, etc.) within images that depict human faces. This step is essential for the initializa- tion of many face processing techniques like face tracking, facial expression recognition or face recognition. Among these, face recognition is a lively research area where it has been made a great effort in the last years to design and compare different techniques.

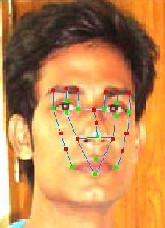

4.1 Face Landmarks (Nodal points)

Facial features can be extracted according to various face landmarks on human face. Every face has numerous, dis- tinguishable landmarks, the different peaks and valleys that make up facial features. It defines these landmarks as nodal points. Each human face has approximately 80 nodal points. Some of these measured by the software are:

IJSER © 2011 http://www.ijser.org

International Journal of Scientific & Engineering Research Volume 2, Issue 6, June-2011 4

ISSN 2229-5518

1. Distance between the eyes.

2. Width of the nose.

3. Depth of the eye sockets.

4. The shape of the cheekbones.

5. The length of the jaw line.

6. Height & Width of forehead and total face.

7. Lip height.

Fig. 7. Steps involved in detection of inner pupil boundary and outer iris localization.

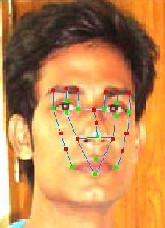

Fig. 6. Various Face Landmarks (nodal points).

8. Lip width.

9. Distance between nose & mouth.

10. Face skin marks, etc.

4.2 Iris Recognition for facial feature extraction.

The iris is an externally visible, yet protected organ whose unique epigenetic pattern remains stable throughout adult life. These characteristics make it very attractive for use as a biometric for identifying individuals.

4.3 Image Acquisition

The iris image should be rich in iris texture as the feature extraction stage depends upon the image quality. Thus, the image is acquired by 3CCD camera placed at a distance of approximately 9 cm from the user eye. The approximate distance between the user and the source of light is about 12 cm.

4.4 Iris Localization

The acquired iris image has to be preprocessed to detect the iris, which is an annular portion between the pupil (inner boundary) and the sclera (outer boundary). The first step in iris localization is to detect pupil which is the black circular

Fig. 8. Iris normalization

4.5 Iris Normalization

Localizing iris from an image delineates the annular portion from the rest of the image. The concept of rubber sheet modal suggested by Daugman takes into consideration the possibility of pupil dilation and appearing of different size in different images.

4.6 Feature Extraction

Corners in the normalized iris image can be used to extract features for distinguishing two iris images. The steps in- volved in corner detection algorithm are as follows

S1: The normalized iris image is used to detect corners us- ing covariance matrix

S2: The detected corners between the database and query

image are used to find cross correlation coefficient

S3: If the number of correlation coefficients between the

part surrounded by iris tissues. The center of pupil can be

T I D 2

I D D

x

cv I D D

x y

I D 2

used to detect the outer radius of iris patterns. The impor-

tant steps involved are:

1. Pupil detection.

2. Outer iris localization.

L x y

y I

Fig. 9. Detection of corners.

IJSER © 2011 http://www.ijser.org

International Journal of Scientific & Engineering Research Volume 2, Issue 6, June-2011 5

ISSN 2229-5518

detected corners of the two images is greater than a thre- shold value then the candidate is accepted by the system

4.7 Corner detection

Corner points can be detected from the normalized iris im- age using covariance matrix of change in intensity at each point. A 3x3 window centered on point p is considered to find covariance matrix Mcv

Fig. 10. A face is described by 27 fiducial points: 13 are directly extracted from the image (in green), 14 are inferred from the former ones (in red).

4.8 From eye centers to fiducial points

In this section we show how, given the eye centers, we de- rive a set of 27 characteristic points (fiducial points): three points on each eyebrow, the tip, the lateral extremes and the vertical mid-point of the nose, the eye and lip corners, their upper and lower mid-points, the midpoint between the two eyes, and four points on the cheeks (see Fig: 10).

with the highest symmetry and high luminance values; therefore we can identify the nose tip as the point that lies on the nose profile, above the nose baseline, and that cor- responds to the brightest gray level. These considerations allow to localize the nose tip robustly (see Figure: 11).

4.10 Mouth

Regarding the mouth, our goal is to locate its corners and its upper and lower mid-points. To this aim, we use a snake [Hamarneh, 2000] to determine the entire contour since we verified that they can robustly describe the very different shapes that mouths can assume. To make the snake con-

a b c d

Fig. 12 Mouth corners estimation: a) mouth subimage b) mouth map c) binarized mouth map d) mouth corners.

a b c

4.9 Nose

The nose is characterized by very simple and generic prop-

Fig. 11. Examples of nose processing. The black horizontal line indi- cates the nose base; the black dots along the nose are the points of maximal symmetry along each row; the red line is the vertical axis approximating those points; the green marker indicates the nose tip.

erties: the nose has a “base” the gray levels of which con- trast significantly with the neighboring regions; moreover, the nose profile can be characterized as the set of points

Fig. 13. Snake evolution: a) snake initialization b) final snake position c) mouth fiducial Points.

verge, its initialization is fundamental; therefore the algo-

rithm estimates the mouth corners and anchors the snake to them: first, we represent the mouth subimage in the YCbCr color space, and we apply the following transformation:

MM = (255 - (Cr - Cb)) Cr 2

MM is a mouth map that highlights the region correspond-

ing to the lips; MM is then binarized putting to 1 the 20% of

its highest values; the mouth corners are determined taking

the most lateral extremes (see “Fig 12”).

5 FACE DATABASE

Our ace database contains large-scale face images with dif- ferent sources of variations, especially Pose, Expression, Accessories, and Lighting would be used to advance the

IJSER © 2011 http://www.ijser.org

International Journal of Scientific & Engineering Research Volume 2, Issue 6, June-2011 6

ISSN 2229-5518

state-of-the-art face recognition technologies aiming at prac- tical applications especially for the oriental. Our face data- base contains 99,594 images of 1040 individuals (595 males

Fig. 14. Different kinds of poses.

and 445 females) with varying Pose, Expression, Accessory, and Lighting.

5.1 Poses

In our face database we consider various kinds of poses such as front pose, left pose, right pose, left corner pose, righter corner pose, left up pose, right up pose, front up

Normal Happiness Anger Disgust

Sadness Fear Surprise Eyes closed

Fig. 15. Different kinds of expressions.

pose, front down pose, left down pose, and right down pose.

5.2 Facial expression

A database of facial expression images was collected. Ten expressors posed 3 or 4 examples of each of the six basic facial expressions (happiness, sadness, surprise, anger, dis- gust, fear) and a neutral face for a total of 219 images of fa- cial expressions.

5.3 Varying lighting conditions

The various lighting conditions are effects the facial images. Images with varying lighting conditions are recommended

Fig. 16. Face images with varying lighting conditions.

for the purpose of image processing and face recognition under natural illumination. It is recommended to store fa- cial images in the face database with varying lighting condi- tions.

Fig. 17. Face images with different kinds of accessories.

5.4 Accessories: Glasses and Caps

Several kinds of glasses and hats are prepared in the room used as accessories to further increase the diversity of the database. The glasses consisted of dark frame glasses, thin and white frame glasses, glasses without frame. The hats also have brims of different size and shape.

5.5 Backgrounds

Without special statement, we are capturing face images with a blue cloth as the default background. However, in practical applications, many cameras are working under the auto-white balance mode, which may change the face ap- pearance much. Therefore, it is necessary to mimic this situ- ation in the database. We just consider the cases when the background color has been changed. Concretely, five sheets of cloth with five different unicolors (blue, white, black, red and yellow) are used.

IJSER © 2011 http://www.ijser.org

International Journal of Scientific & Engineering Research Volume 2, Issue 6, June-2011 7

ISSN 2229-5518

6 FACE RECOGNITION

This part consists of the artificial intelligence (face recogni- tion) which is accomplished by Principal Component Anal- ysis (PCA) with Back Propagation Neural Network (BPNN). This paper gives a Neural and PCA based algorithm for efficient and robust face recognition. A face recognition sys- tem [11] is a computer vision and it automatically identifies a human face from database images. The face recognition problem is challenging as it needs to account for all possible appearance variation caused by change in illumination, fa- cial features, occlusions, etc. This is based on principal component-analysis (PCA) technique, which is used to sim- plify a dataset into lower dimension while retaining the characteristics of dataset. Pre-processing, Principal compo- nent analysis and Back Propagation Neural Algorithm are the major implementations of this paper. Pre-processing is done for two purposes

1. To reduce noise and possible convolute effects of

Fig. 19. Outline of Face Recognition System by using PCA & Back- propagation Neural Network.

interfering system,

2. To transform the image into a different space

where classification may prove easier by exploita-

tion of certain features.

PCA is a common statistical technique for finding the pat- terns in high dimensional data’s [6]. Feature extraction, also called Dimensionality Reduction, is done by PCA for a three main purposes like

1. To reduce dimension of the data to more tractable limits

2. To capture salient class-specific features of the da- ta,

3. To eliminate redundancy.

6.1 Experimentation and Results

When BPNN technique is combined with PCA, non linear face images can be recognized easily. One of the images as

a b c

Fig. 20. (a) Input Image , (b) Recognized Image by BPNN, (c) Rec- ognized Image by PCA method.

shown in fig 20 (a) is taken as the Input image. The Recog- nized Image by BPNN and reconstructed output image by PCA is as shown in fig 20 (b) and 20 (c).

Fig. 18. Face images with different kinds backgrounds.

IJSER © 2011 http://www.ijser.org

International Journal of Scientific & Engineering Research Volume 2, Issue 6, June-2011 8

ISSN 2229-5518

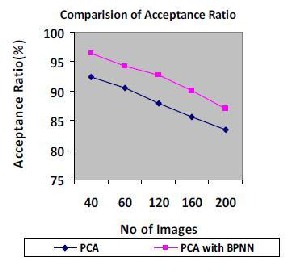

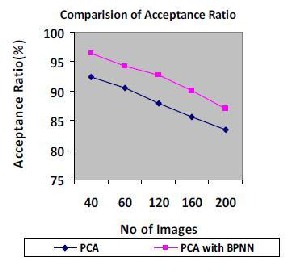

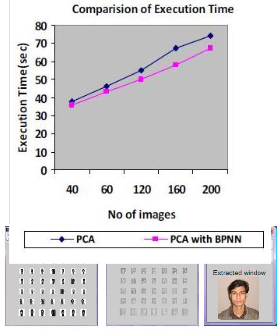

Table1 shows the comparison of acceptance ratio and execu-

TABLE 1

COMPARISON OF ACCEPTANCE RATIO AND EXECU- TION TIME FOR DATABASE IMAGES.

No .of Images | Acceptance ratio (%) | Execution Time (Seconds) |

No .of Images | PCA | PCA with BPNN | PCA | PCA with BPNN |

40 | 92.4 | 96.5 | 38 | 36 |

80 | 90.6 | 94.3 | 46 | 43 |

120 | 87.9 | 92.8 | 55 | 50 |

160 | 85.7 | 90.2 | 67 | 58 |

200 | 83.5 | 87.1 | 74 | 67 |

tion time values for 40, 80, 120,160 and 200 images of Yale database. Graphical analysis of the same is as shown in “Fig

22.”

TABLE 2

USING BPNN THE ACCEPTANCE RATIO AND EX- ECUTION TIME FOR DATABASE IMAGES

No .of Images | Acceptance ratio (%) | Execution Time (Seconds) |

No .of Images | BPNN | BPNN |

40 | 94.2 | 37 |

80 | 92.3 | 45 |

120 | 90.6 | 52 |

160 | 87.9 | 65 |

200 | 85.5 | 71 |

Fig. 22. comparison of Acceptance ratio and execution time.

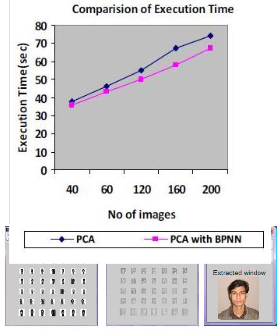

a b c

Fig. 21. (a) Training set, (b) Eigen faces , (c) Recognized Image by

PCA with BPNN method.

IJSER © 2011 http://www.ijser.org

International Journal of Scientific & Engineering Research Volume 2, Issue 6, June-2011 9

ISSN 2229-5518

7 CONCLUSION

In this paper, Face recognition using Eigen faces has been shown to be accurate and fast. When BPNN technique is combined with PCA, non linear face images can be recog- nized easily. Hence it is concluded that this method has the acceptance ratio is more than 90 % and execution time of only few seconds. Face recognition can be applied in Securi- ty measure at Air ports, Passport verification, Criminals list verification in police department, Visa processing , Verifica- tion of Electoral identification and Card Security measure at ATM’s. Face recognition has received substantial attention from researches in biometrics, pattern recognition field and computer vision communities. In this paper we proposed a computational model of face detection and recognition, which is fast, reasonably simple, and accurate in con- strained environments such as an office or a household.

REFERENCES

[1] Jain, Fundamentals of Digital Image Processing, Prentice-Hall Inc., 1982.

[2] E. Trucco, and A. Verri, Introductory Techniques for 3-D Computer

Vision, Prentice-Hall Inc., 1998.

[3] L. G. Shapiro, and G. C. Stockman, Computer Vision, Prentice-Hall

Inc., 2001.

[4] Rowley, H., Baluja, S. and Kanade, T., Neural Network-Based Face Detection. IEEE Transactions on Pattern Analysis and Machine Intelli- gence, Vol. 20, No. 1, January, 1998, pp. 23-38. http://www.ri.cmu.e du/pu bs/pu b_926_text.html

[5] Duda, R.O., Hart, P.E. and Stork, D.G. Pattern Classification. Wiley, New York, 2001.

[6] B.K.Gunturk,A.U.Batur, and Y.Altunbasak,(2003) “Eigenface- domain super-resolution for face recognition,” IEEE Transactions of . Image Processing. vol.12, no.5.pp. 597-606.

[7] M.A.Turk and A.P.Petland, (1991) “Eigenfaces for Recognition,” Journal of Cognitive Neuroscience. vol. 3, pp.71-86.

[8] T.Yahagi and H.Takano,(1994) “Face Recognition using neural networks with multiple

combinations of categories,” International Journal of Electronics Infor-

mation and Communication Engineering., vol.J77-D-II, no.11, pp.2151-

2159.

[9] S.Lawrence, C.L.Giles, A.C.Tsoi, and A.d.Back, (1993) “IEEE Trans- actions of Neural Networks. vol.8, no.1, pp.98-113.

[10] C.M.Bishop,(1995) “Neural Networks for Pattern Recognition” London, U.K.:Oxford

University Press.

[11] Kailash J. Karande Sanjay N. Talbar “Independent Component

Analysis of Edge

Information for Face Recognition” International Journal of Image

Processing Volume (3) : Issue (3) pp: 120 -131.

[12] FernandoDe La Torre, Michael J.Black 2003 Internatioal Confe- rence on Computer Vision (ICCV’2001), Vancouver, Canada, July 2001. IEEE 2001

[13] Turk and Pentland, Face Recognition Using Eigenfaces, Method

Eigenfaces”, IEEE CH2983-5/91, pp 586-591.

[14] Douglas Lyon. Image Processing in Java, Prentice Hall, Upper

Saddle River, NJ. 1998.

[15] MATTHEW T. RUBINO, EDGE DETECTION ALGORITHMS,

<HTTP://WWW.CCS.NEU.EDU/HOME/MTRUBS/HTML/EDGEDETECTI ON.HTML >

[16] H. Schneiderman, “Learning Statistical Structure for Ob- ject Detection”, Computer Analysis of Images and Patterns (CAIP), 2003, Springer-Verlag, August, 2003.

[17] Yang and Huang 1994. “Human face detection in a complex background.” Pattern Recognition, Vol 27, pp53-63

[18] Paul Viola and Michael Jones. Rapid object detection using a boosted cascade of simple features. In CVPR, 2001,

<http://citeseer.nj.nec.com/viola01rapid.html>

[19] Angela Jarvis, < http://www.forensic- evidence.com/site/ID/facialrecog.html>

[20] Konrad Rzeszutek, <http://darnok.com/projects/face- recognition>

Mohammad Abul Kashem has been serving as an Associate Professor and Head of the Department, Department of Computer Science and Engineering (CSE), Dhaka University of Engineering & Technol- ogy (DUET), Gazipur, Bangladesh. Field of interest: Speech Signal Processing. E-mail: drka- shem11@duet.ac.bd.

Md. Nasim Akhter has been serving as an As- sistant Professor, Department of Computer Science and Engineering (CSE), Dhaka University of Engi- neering & Technology (DUET), Gazipur, Bangladesh. Field of interest: Operating System, Data Communi- cation. E-mail: nasimntu@yahoo.com.

Shamim Ahmed has been studying as an M.Sc. in Engineering Student, Department of Computer Science and Engineering (CSE), Dhaka University of Engineer- ing & Technology (DUET), Gazipur, Bangladesh. He got B.Sc. in engineering degree in CSE in the year of 2010 from DUET, Gazipur, Bangladesh. He joined at Dhaka

International University (DIU) as lecturer (part time), in the department

of Computer Science and Engineering (CSE) in January 2011 after completion his B.Sc. in Engg. (CSE). Right now he teaches courses on Data Communication and Computer Networking, Object Oriented Pro- gramming using C++ and Java, Artificial Intelligence, Formal Language and Automata Theory. Field of interest: Digital Image Processing, Ar-

IJSER © 2011 http://www.ijser.org

International Journal of Scientific & Engineering Research Volume 2, Issue 6, June-2011 10

ISSN 2229-5518

tificial Neural Network, Artificial Intelligence & Visual Effects. E-mail:

shamim.6feb@gmail.com.

Md. Mahbub Alam has been serving as a Lecturer, Department of Computer Science and Engineering (CSE), Dhaka University of Engineering & Technology (DUET), Gazipur, Bangladesh. He got B.Sc. in engineer- ing degree in CSE in the year of 2009 from DUET, Ga- zipur, Bangladesh. Field of interest: Digital Image

Processing, Neural Network, Artificial Intelligence. E-mail: emah-

bub.cse@gmail.com.

IJSER © 2011 http://www.ijser.org