Inte rnatio nal Jo urnal o f Sc ie ntific & Eng inee ring Re se arc h, Vo lume 3, Issue 3, Marc h-2012 1

ISS N 2229-5518

Face Recognition Using Combined Global Local Preserving Projections and Compared With Various Methods

Nisar Hundewale

Abs tract— In appearance-based methods, w e usually represent an image of size n x m pixels by a vector in an n x m - d imensional space. How ever, these n x m-dimensional spaces are too large to allow robust and f ast f ace recognition. A common w ay to attempt to resolve this problem is to use dimensionality reduction techniques. The most prominent existing techniques f or this purpose are Principal Co mponent Analysis (PCA) and Locality Preserving Projections (LPP). We propose a new combined approach f or f ace recognition w hich aims to integrate the advantages of the global f eature extraction technique like PCA and the local f eature extraction techn ique LPP . It has been introduced here (CGLPP- Co mbined Global Local Preserving Projections. Finally, Co mparison is done w ith various recognition methods. Experimental evaluations are perf ormed on the ORL and UMIST data sets w ith 400 images and 40 subjects.

Inde x Terms— CGLPP, Dimensionality reduction, Face recognition, Locality Preserving Projection, ORL, Principal Co mponent Analysis, UMIST.

—————————— ——————————

1 INTRODUCTION

ACE recognition research started in the late70s and has become one of the active and exciting research are as in computer science and information technology areas since

1990. Basically, there are two major approaches in automatic

recognition of faces by computer, namely, constituent- base recognition (we called as local feature approach) and face- based recognition (we called as global feature approach).

Face recognition can significantly impact authentication, monitoring and indexing applications. Much research on face recognition using global or local information has been done earlier. By using global feature preservation techniques like principal component analysis (PCA) [1] the authors can effectively preserve only the Euclidean structure of face space that suffers lack of local features, but which may play a major role in some applications. On the other hand, the local feature preservation technique namely locality preserving projections (LPP) preserves local information and obtains a face subspace that best detects the essential face manifold structure [2]. However, it also suffers loss in global features which may also be important in some of the applications.

Here in the proposed method, we are using both global and

local features for recognition. A new combined approach for

recognition faces that integrates the advantages of the global feature extraction technique like PCA and the local feature

proposed method CGLPP – Combined Global Local Preserving Projections. The experimental results are shown in Section 4. Finally, we give concluding remarks and future work in Section 5.

2 B RIEF R EVIEW OF PC A AND LPP

2.1 Principal Component Analysi s

Principal Component Analysis is a well-known method for dimension reduction. PCA has been used widely in computer vision applications. PCA is a mathematical procedure that transforms a larger number of (possibly) correlated variables into a smaller number of uncorrelated variables called prin- cipal components. The objective of principal component ana l- ysis is to reduce the dimensionality (number of variables) of the dataset but retain most of the original variability in the data. It is a classical statistical method widely used in data analysis and compression. Principal component analysis is based on the statistical representation of a random variable. A

2-D facial image can be represented as 1 -D vector by concate- nating each row (or column) into a long thin vector. Let’s su p- pose we have M vectors of size N (= rows of image £ columns of image) representing a set of sampled images. Pi’s represent the pixel values.

extraction technique LPP has been introduced here (CGLPP -

x P ...P i , i 1...M

(1)

i 1 N

Combined Global Local Preserving Projections).In Prop osed

method, the authors had to extract discriminating features

that yields improved facial image recognition results. This has

The images are mean centered by subtracting the mean image

from each image vector. Let m represent the mean image.

been verified by making a fair comparison with the existing methods like PCA, LPP.

The rest of this paper is organized as follows: In Section 2,

we give a brief review of PCA and LPP. Section 3, The

m 1

M

M

xi

i 1

(2)

———— ——— ——— ——— ———

And let Wi be defined as mean centered image

Wi x m

(3)

Nisar Hundewale, Ph.D., Sr. IEEE Member

College of Computers and IT, Taif University, Taif, Saudi Arabia

nisar@computer.org

Our goal is to find a set of ei ’s which have the largest poss ible projection onto each of the Wi ’s. We wish to find a set of M orthonormal vectors ei for which the quantity

IJSER © 2012

http :// www.ijser.org

Inte rnatio nal Jo urnal o f Sc ie ntific & Eng inee ring Re se arc h, Vo lume 3, Issue 3, Marc h-2012 2

ISSN 2229-5518

1 M

eT w 2

(4)

basis set for facial images. The simplest method for determi n- ing which face class provides the best description of an input facial image is to find the face class k that minimizes the Eu c-

i M n1 i n

lidean distance

is maximized with the orthonormality constraint

T

l k lk

(5)

K

K

(10)

Where, K

is a vector describing the kth face class. If K is

It has been shown that the ei ’s and

i ’s are given by the

less than some predefined threshold µ², a face is classified as

egenvectors and eigen values of the covariance matrix

C WW T

Where, W is a matrix composed of the column vectors

(6)

Wi

belonging to the class k.

2.2 Locality Preserving Projection s

Linear dimensionality reduction algorithm, called Lo- cality Preserving Projections (LPP) [4]. It builds a graph incor-

placed side by side. The size of C is N * N which could be enormous. It is not practical to solve for the eigenvectors of C directly. A common theorem in linear algebra states that the

porating neighborhood information of the dataset [5], [6]. Us-

ing the notion of the Laplacian of th e graph, we then compute a transformation matrix, which maps the data points to a su b-

vectors

ei and scalars

i can be obtained by solving for the

space. This linear transformation optimally preserves local

eigenvectors and eigen values of the M * M matrix W TW . Let

neighborhood information in a certain sense. The representa-

di and

i be the eigenvectors and eigen values of WTW, re-

tion map generated by the algorithm may be viewed as a li-

spectively.

W TWd

i di

(7)

near discrete approximation to a continuous map that natura l-

ly arises from the geometr y of the manifold [4 ], [7]. The locali- ty preserving character of the LPP algorithm makes it relative- ly insensitive to outliers and noise.

By multiplying left to both sides by W

T

Actually, the local features preserving technique seeks to preserve the intrinsic geometry of the data and local

WW (Wdi ) I (Wdi )

(8)

structure. The following are the steps to be carried out to ob- tain the Laplacian [8] transformation matrix WLPP, which we

which means that the first M-1 eigenvectors ei and eigen

values¸ i of WWT are given by Wdi and µi, respectively. Wdi

needs to be normalized in order to be equal to ei. Since we

only sum up a finite number of image vectors, M, the rank of

the covariance matrix cannot exceed M - 1 (The -1 come from

the subtraction of the mean vector m).

The eigenvectors corresponding to nonzero eigen values of the

covariance matrix produce an orthonormal basis for the sub-

space within which most image data can be represented with a small amount of error. The eigenvectors are sorted from high to low according to their corresponding eigen values. The ei- genvector associated with the largest eigenvalue is one that reflects the greatest variance in the image. That is, the smallest eigenvalue is associated with the eigenvector that finds the least variance. They decrease in exponential fashion, meaning

use to preserve the local features.

Constructing the nearest-neighbor graph: Let G denote a graph with k nodes. The ith node corresponds to the face image xi. We put an edge between nodes i and j if xi and xj are

―close,‖ i.e., xj is among k nearest neighbors of xi, or xi is among k nearest neighbors of xj. The constructed nearest

neighbor graph is an approximation of the local manifold structure, which will be used by the distance preserving spec- tral method to add the local manifold structure information to the feature set.

Choosing the weights: The weight matrix S of graph G mod- els the face manifold structure by preserving local structure. If node i and j are connected, put

2

that the roughly 90% of the total variance is contained in the

first 5% to 10% of the dimensions.

A facial image can be projected onto M’(<<M) dimensions by

Sij e

xi x j

t

(11)

computing

v v .........v T

(9)

Eigen map: The transformation matrix WLPP that minimizes

the objective function is given by the minimum Eigen value solution to the generalized Eigen value problem. The detailed

1 2 MI

Where, vi eI wI , vi is the ith coordinate of the facial image in the new space, which came to be the principal component. The

study about LPP and Laplace Beltrami operator is found in [9 ],

[10]. The Eigen vectors and Eigen values for the generalized eigenvector problem are computed.

vectors ei are also images, so called, eigen images, or eigenfa c- es in our case, which was first named by [3]. They can be

XLX TW

XDX TW

(12)

viewed as images and indeed look like faces.

So, it describes the contribution of each eigenface in

representing the facial image by treating the eigenfaces as a

Where, D is a diagonal matrix whose entries are column or

row sums of S, Dii = ΣjSji, L = D - S is the Laplacian matrix. The

ith row of matrix X is xj. Let WLPP = w0,w1,...,wk-1 be the solutions

IJSER © 2012

http :// www.ijser.org

Inte rnatio nal Jo urnal o f Sc ie ntific & Eng inee ring Re se arc h, Vo lume 3, Issue 3, Marc h-2012 3

ISSN 2229-5518

of the above equation, ordered according to their Eigen values,

0 ≤ λ0 ≤ λ1 ≤ … ≤ λk-1. These Eigen values are equal to or greater than zero because the matrices XLXT and XDXT are both sym- metric and positive semi-definite. Note that the two matrices XLXT and XDXT are both symmetric and positive semi-

definite since the Laplacian matrix L and the diagonal matrix

D are both symmetric and positive semi-definite.

By considering the transformation space WPCA and WLPP, the embedding is done as follows:

Each of the above two dataset contains 400 images including

40 different subjects of 10 poses or variations. The third one is

the combination of ORL + UMIST database, which contain 800 images including 80 different subjects of 10 poses. The size of each used in the proposed method is 50 x 50 pixels, with 256 gray levels per pixel. Finally, each image is represented by a

2500 dimensional vector in image space.

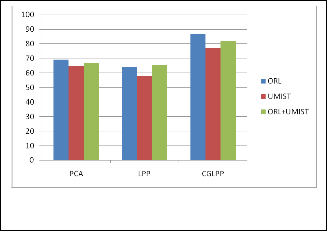

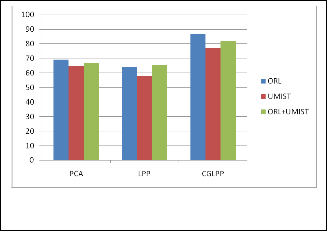

TABLE 1

OVERALL COMPARISON BET WEEN EXISTING AND PRO-

x y

W T x,

POSED MET HODS

W WLDA WLPP ,

WLPP [w0 , w1 ,...,wk 1 ]

(13)

where y is a k-dimensional vector, WPCA, WLPP and W are the transformation matrices of PCA, LPP and CGLPP algorithms respectively.

3 FORM ATION OF COM BINED GLOBAL AND LOCAL

PRES ERV ING PROJ ECTIONS (CGLPP)

Earlier works based on PCA suffer from not preserving the local manifold of the face structure whereas the research works on LPP [8], [9], [11] lacks to preserve global features of face images. Where as our proposed work uses the combina- tion of PCA and LPP and the distance preserving spectral me- thod LPP, that captures the most discriminative features which plays a major role in face recognition. Also, those works that uses alone PCA captures the variation in the samples without considering the variance among the subjects. Hence, in our proposed work, for the first time up to our knowledge, we employ the combination of global feature extraction tec h- nique PCA and local feature extraction technique LPP to achieve a high quality feature set called Combined Global and Local Preserving Projections (CGLPP) that captures the dis- criminate features among the samples considering the differ- ent classes in the subjects which produces the considerable improved results in facial image representation and recogn i- tion. The proposed combined a pproach that combines global feature preservation technique PCA and local feature preser- vation technique LPP to form the high quality feature set CGLPP is described in this section. Actually, the CGLPP me- thod is to project face data to a PCA space for preserving the global information and then projecting to Locality Preserving Projection (LPP) space by using the distance preserving spec- tral methods, to add the local neighborhood manifold infor- mation which may not be interested by PCA alone.

4 EXPERIM ENTAL R ESULT

We use, ORL and UMIST face databases with different pose, illumination, and expression for Training and Testing. The second one is the UMIST database (mainly for different orien- tations, brightness and contrast, hairstyle, glasses, cosmetics).

Fig. 1. Sa mple Images f rom ORL f ace database, used in our experiments.

Fig. 2. Sa mple Images from UMIST f ace database, used in our experiments.

IJSER © 2012

http :// www.ijser.org

Inte rnatio nal Jo urnal o f Sc ie ntific & Eng inee ring Re se arc h, Vo lume 3, Issue 3, Marc h-2012 4

ISSN 2229-5518

And Machine Intelligence, vol. 27, no. 3, (2005), pp. 328–340.

[10] A. Jose, Diaz-Garcia, ―Derivation of the Laplace-Beltrami operator for the zonal polynomials of positive definite hermitian matrix argument― in Applied Mathematics Sciences, Vol.1, no.4, (2007), pp. 191-200.

[11] B. Moghaddam and A. Pentland, ―Probabilistic Visual Learning for Object

Representation,‖ IEEE Trans. Pattern Analysis and Machine Intelligence, vol.

19, pp. 696-710, 1997.

[12] P.J. Phillips, ―Support Vector Machines Applied to Face Recognition,‖ Proc.

Conf. Advances in Neural Information Processing Systems 11, pp. 803-809,

1998.

Fig. 3. Over all Comparison b etween Existing and Proposed

Methods.

5 CONCLUSIONS

The CGLPP algorithm that combines the global and local information preserving features has been analyzed under us- ing ORL and UMIST facial image database. In this work the main focus is on linear face recognition technique. Currently, we experimented, the face recognition by varying various di- mension reduction methods like PCA, LPP. Various existing face manifold structure like eigen faces, Laplacian faces, and proposed method CGLPP has been implemented and success- fully tested and compared. In future, the same can be experi- mented by various classifiers like Support Vector Machine [12], Bayesian Methods .We can also use different database like Yale, Ferret containing large number of dataset. In this work, it is mainly concentrate on still images and we can pro- ceed with video images and can also try out in online recogn i- tion in unsupervised method.

REFERENCES

[1] A.U. Batur and M.H. Hayes, ―Linear Subspace for Illumination Robust Face Recognition,‖ Proc. IEEE Int’l Conf. Computer Vision and Pattern Recogni- tion, Dec. 2001.

[2] M. Belkin and P. Niyogi, ―Laplacian Eigenmaps and Spectral Techniques for

Embedding and Clustering,‖ Proc. Conf. Advances in Neural Information

Processing System 15, 2001.

[3] M.A. Turk and A.P. Pentland, ―Face Recognition Using Eigenfaces‖, IEEE

Conf. onComputerVisionand Pattern Recognition, pp. 586-591, 1991.

[4] M. Belkin and P. Niyogi, ―Using Manifold Structure for Partially Labeled Classification,‖ Proc. Conf. Advances in Neural Information Processing Sys- tem 15, 2002.

[5] M. Brand, ―Charting a Manifold,‖ Proc. Conf. Advances in Neural Informa-

tion Processing Systems, 2002.

[6] F.R.K. Chung, ―Spectral Graph Theory,‖ Proc. Regional Conf. Series in Math.,

no. 92, 1997.

[7] Y. Chang, C. Hu, and M. Turk, ―Manifold of Facial Expression,‖ Proc. IEEE Int’l Workshop Analysis and Modeling of Faces and Gestures, Oct. 2003.

[8] Jiangfeng Chen Bo Li , Baozong Yuan, ―Face recognition using direct LPP

algorithm ― , 26-29 Oct. 2008.

[9] Xiaofei He., Shuicheng Yan, Yuxiao Hu, Partha Niyogi, Hong-Jiang Zhang,

―Face recognition using Laplacian faces― in IEEE Trans. on Pattern Analysis

Dr. Nisar Hundewale received his Ph.D. in Computer Science from Georgia State University, USA. He has worked at National Institutes of Health (NIH), USA, as post-doctoral Fellow. Currently, he is an Assis- tant Professor at Taif University. His research interests are Algorithms, Machine Learning, Bioin- formatics, Distributed Computing, and Net- works.

IJSER © 2012

http :// www.ijser.org