Unit test Integration test System test Acceptance test Regression testing

Defects can be categorized in to different groups based on severity and priority of the defects. Below list shows the common defect category used in software industry

The research paper published by IJSER journal is about Effective Testing: A customized hand book for testing professionals and students 1

ISSN 2229-5518

Abdul Rauf E M , Sajna P.V

Index Terms— Show stopper, Exception handling, Resource hogging, Sandwich , Big bang, Risk based testing, Clean room software enginee ring,

Combinational test design

—————————— ——————————

This Even though software development industry spends more than half of its budget on Software testing and mainten- ance related activities; software testing has received little at- tention in our curricula. This suggests that most software tes- ters are then either self taught or they acquire needed skills on the job perhaps through informal and formal mechanisms used commonly in the industry. Lack of proper attention in acquiring testing skills is resulting in less utilization of test resources and thus results in less test efficiency of organisa- tion. Review of extant literature on software testing lifecycle (STLC) identifies various Software testing activities and ways in which these activities can be carried out in conjunction with the software development process. This hand book tries to give a basic knowledge about various skills that software tes- ters need to possess in order to perform activities effectively in a given phase of STLC

Software has been tested from as early as software has been written (Marciniak 1994). Software testing has therefore be- come a natural part of the software development cycle, al- though its purpose and execution has not been the same all the time. Early thoughts of testing believed that software could be tested exhaustively, i.e. it should be possible to test all execu- tion paths (Marciniak 1994). However, as software systems grew increasingly complex, people realized that this would not be possible for larger domains. Therefore, the ideal goal of executing tests that could succeed only when the program contained no defects became more or less unreachable (Marci-

————————————————

Abdul Rauf EM is a research scholar in Christ University –Bangalore and

also working as test lead in IBM India Software Labs, Bangalore. He received

his bachelor’s degree in Computer Engineering from the University of Cochin,

Kerala -India (2000), master’s degree in Telecommunication and Software

Engineering from Bits – Pilani (2004). He has total 11+ years of software

industry experience with proven expertise in different skills and with in- volvement in various life cycles of project developmentand testing

Sajna P.V has 3+years of testing exeperince in avenionics filed and she re- cieved her bachelor’s degree and Masters degree in computer science from

niak 1994). In the 80‘s, Boris Beizer extended the former proactive definition of testing to also include preventive ac- tions. He claimed that test design is one of the most effective ways to prevent bugs from occurring (Boris Beizer 2002). These thoughts were brought further into the 90‘s in the form of more emphasizes on early test design (Marciniak 1994). Nevertheless, Marciniak (1994) states that the most significant development in testing during this period was an increased tool support, and test tools have now become an important part of most software testing efforts. As the systems to devel- op become more complex, the way of performing testing also needs to be developed in order to meet new demands. In par- ticular, automated tools that can minimize project schedule and effort without loosing quality are expected to become a more central part of testing.

Marciniak 1994- defines testing as ‗a means of measuring or assessing the software to determine its quality‘. Here, quali- ty is considered as the key aspect in testing and Marciniak expounds the definition by stating that testing assesses the behaviour of the system, and how well it does it, in its final environment. Without testing, there is no way of knowing whether the system will work or not before live use. Although most testing efforts involve executing the code, Marciniak claims that testing also includes static analysis such as code checking tools and reviews. According to Marciniak, the pur- pose of testing is two-fold: to give confidence in that the sys- tem is working but at the same time to try to break it. This leads to a testing paradox since you cannot have confidence in that something is working when it has just been proved oth- erwise. If the purpose of testing only would be to give confi- dence in that the system is working, the result would accord- ing to Marciniak be that testers under time pressure only would choose the test cases that they know already work. Therefore, it is better if the main purpose of testing is to try to break the software so that there are fewer defects left in the delivered system. According to Marciniak, a mixture of defect-

Calicut university,Kerala_india

IJSER -2012

The research paper published by IJSER journal is about Effective Testing: A customized hand book for testing professionals and students 2

ISSN 2229-5518

revealing and correct-operation tests are used in practice, e.g. first, defect-revealing tests are run and when all the defects are corrected, the tests are executed again until no defects are found

Software testing is the process of verifying, validating and defect finding in a software application or program. In verifi- cation we are ensuring that the construction steps are done correctly (are we building the product right), where as in vali- dation we are checking that deliverable (code) is correct (are we building the right product). In software testing a defect is the variance between the expected and actual result. During defects finding, its ultimate source may be traced to a fault introduced in specification, design or development phases. Following are the different levels of testing doing in STLC![]()

Unit test Integration test System test Acceptance test Regression testing

Defects can be categorized in to different groups based on severity and priority of the defects. Below list shows the common defect category used in software industry

![]() Show stopper - Not possible to continue testing because of the severity of the defect

Show stopper - Not possible to continue testing because of the severity of the defect

![]() Critical – Testing can proceed but the application

Critical – Testing can proceed but the application

cannot release until the defect is fixed

![]() Major – Testing can continue but the defects may results serious impacts in business requirements if

Major – Testing can continue but the defects may results serious impacts in business requirements if

released for production

![]() Medium - Testing can continue and the defect will

Medium - Testing can continue and the defect will

cause only minimal departure from the business requirements when in production

![]() Minor –Testing can continue and the defect won‘t

Minor –Testing can continue and the defect won‘t

affect release

![]() Cosmetic - Minor cosmetic issues like colors, fonts,

Cosmetic - Minor cosmetic issues like colors, fonts,

and pitch size that do not affect testing or produc- tion release

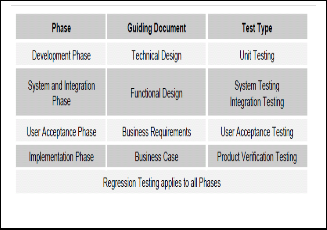

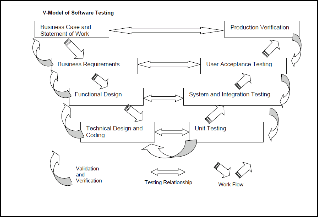

Figure -1 shows the V-model of software testing. V- Model incorporates testing in to the entire SDLC cycle and highlights the existence of different levels of testing and depicts the way each relates to a different development phase. Figure 2 shows

5 different testing phases each with a certain type of test asso- ciated with it. Each phase has entry criteria and that must be met before testing start and specific exit criteria that should meet before certification of the test. Entry and exit criteria are defined by the test owners listed in the test plan

Fig. 2

A. Unit testing

Unit testing test the functionality of basic software units. A unit is the smallest piece of software that does something meaningful; it may be a small function, a statement or a li- brary. Unit test is also called module test where the developer tests the code he/she has produced. Unit tester mainly looking whether the code implemented as per low level design docu- ment (LLD or functional requirements) and the code structure. Following are some of the faults that uncover during unit test- ing

![]() Unit implementation issues – Checking that the unit has implemented the algorithm correctly

Unit implementation issues – Checking that the unit has implemented the algorithm correctly

![]() Input data validation errors – Unit inputs are vali- dated properly before it used

Input data validation errors – Unit inputs are vali- dated properly before it used

![]() Exception handling – Checking whether unit han-

Exception handling – Checking whether unit han-

dles all the environment related errors/exceptions

![]() Dynamic resource related errors –Verify whether

Dynamic resource related errors –Verify whether

the dynamic resources (memory, handles etc ...)

are allocated and deallocated

![]() UI formatting errors – Verify UI is consistent, cor-

UI formatting errors – Verify UI is consistent, cor-

rect user interface (tabs, spelling, colors …)

![]() Basic performance issues – Each unit is critical to overall system performance , checking is the unit implemented is fast enough or not

Basic performance issues – Each unit is critical to overall system performance , checking is the unit implemented is fast enough or not

IJSER -2012

The research paper published by IJSER journal is about Effective Testing: A customized hand book for testing professionals and students 3

ISSN 2229-5518

Designing of unit test cases is done using functional specifi- cation or LLD of the units. Any techniques like white box/black box/ grey box can be applied to design unit test cases. Also the structure of the code can be used as another input for improving the quality of the unit test cases. During test cases design some test cases may come as common to many units, such test cases can be considered as a standard check list and can use as reusable test case. If the unit is not a user interface (UI), it is necessary to write test driver (drives the unit under – test with inputs and stores the outcome of the test) and test stubs (dummy storage module used for replacing the unit not available) to automate the unit testing

B. Integration testing

Integration testing starts as soon as few modules are ready and the developers integrate their code for testing the inter- faces implemented by their code .High level design document (HLD) is the main input for designing the test cases for inte- gration testing. Following are some of the faults that uncover during integration testing

![]() Interface integrity issues – Test whether the unit comply to the agreed upon interface specification

Interface integrity issues – Test whether the unit comply to the agreed upon interface specification

![]() Data sharing issues – Verifying the common data is

Data sharing issues – Verifying the common data is

handled properly , synchronization issues etc …

![]() Exception handling – Handles all the environment related errors/exceptions

Exception handling – Handles all the environment related errors/exceptions

![]() Resource hogging issues – Check whether any unit consume excessive resources in the integrated units failing

Resource hogging issues – Check whether any unit consume excessive resources in the integrated units failing

![]() Build issues – Cases like multiple unit use a version

Build issues – Cases like multiple unit use a version

of common unit that each depends upon

![]() Error handling and bubbling of errors - Check that

Error handling and bubbling of errors - Check that

the error returned by a unit is handled by the higher unit appropriately

![]() Functionality errors – Functionality formed by the

Functionality errors – Functionality formed by the

integration of unit(s) work

Integration testing is proceeded based on integration strat-

egy (order of integration of module) that the project follows. Since testing is an act to find issues that pose severe risk as early as possible, it is preferable to test those interfaces that pose the high risk. Mainly four types of integration strategy employed in software industry

![]() Top-down – Integration starts form highest chain of control (top-most module) and this kind of inte- gration uses where upper level interfaces are im- portant

Top-down – Integration starts form highest chain of control (top-most module) and this kind of inte- gration uses where upper level interfaces are im- portant

![]() Bottom-up –Integration starts form lowest chain of

Bottom-up –Integration starts form lowest chain of

control (bottom-most module) and this kind of in- tegration uses where lower level interfaces are im- portant

![]() Sandwich – Approach uses when not all on the top

Sandwich – Approach uses when not all on the top

or not all at the bottom are important, this will be a mixture of top-down and bottom-up approach

![]() Big bang –This is pretty dumb strategy but this will find issues, the main problem of this approach is the difficulty in debugging

Big bang –This is pretty dumb strategy but this will find issues, the main problem of this approach is the difficulty in debugging

The approach will be decided based on the criticality of the interfaces and the most critical interfaces should be tested first and the others later. The criticality of the interface can decide once the architecture of the project is ready. Normally most of the projects will follow sandwich approach

C. System testing

A system is not a just our code that we developed but that will be a collection of developed code, supporting libraries , data bases (if any) , Web/App servers (if any), operating sys- tem and hardware. In system testing phase we test the systems as a whole. For ensuring the maximum benefit of the system test, it is preferable to perform system testing in an environ- ment that is similar to the target environment. Following are the types of faults discovered in system testing.

![]() Functional errors – Verification of the system that it has implemented the functionality correctly

Functional errors – Verification of the system that it has implemented the functionality correctly

![]() Performance issues –Making sure that the system is

Performance issues –Making sure that the system is

fast enough

![]() Load-handling capability –Ensuring that the sys-

Load-handling capability –Ensuring that the sys-

tem handling the real life situation with stated re- sources

![]() Usability issues –Verify that the system is friendly

Usability issues –Verify that the system is friendly

and easy to use

![]() Volume handling –Verify that the system is capable

Volume handling –Verify that the system is capable

of handling large volume of data

![]() Installation errors –Making sure that the system is

Installation errors –Making sure that the system is

able to install correctly using the installation docs

![]() Documentation errors –Checking that the docu-

Documentation errors –Checking that the docu-

mentation done for the system is correct

![]() Language handling issues- Verify that the system is

Language handling issues- Verify that the system is

implemented the multiple locales correctly. Local- ization and internationalization testing is perform- ing in this stage

D. Acceptance testing

Acceptance testing is the final testing done by the test team

and the customer together before the system put in to opera-

tion. Acceptance testing starts after completing the system test.

The purpose of the acceptance test is to give confidence in that

the system is working, rather than trying to find defects. Ac-

ceptance testing is mostly performed in contractual develop- ment to verify that the system satisfies the requirements agreed on. Acceptance testing is sometimes integrated into the system -testing phase

E. Regression testing

Regression testing is doing for building the confidence of the system that has undergone some changes like modification of the code, defects fixing or added some new module etc. In this test user will rerun the existing test suites/test cases and

IJSER -2012

The research paper published by IJSER journal is about Effective Testing: A customized hand book for testing professionals and students 4

ISSN 2229-5518

make sure that the recent changes has not impacted the func- tionality of the system. Regression test selection is one impor- tant task in this phase and need to do carefully for avoiding unnecessary execution. Regression testing is a repeated task and one of the most expensive activities doing in STLC. For saving the effort, it is always good to look for automation so that we can save lot of manual effort. (Harrold 2000) Accord- ing to Harrold, some studies indicate that regression testing can account for as much as one-third of the total cost of a software system

F. Sanity test

Sanity testing will be performed whenever cursory testing is sufficient to prove that the system is functioning according to specifications. A sanity test is a narrow regression test that focuses on one or a few areas of functionality. Sanity testing is usually narrow and deep. It will normally include a set of core tests of basic GUI functionality to demonstrate connectivity to the database, application servers, printers, etc.

G. Alpha testing

Testing of an application when development is nearing completion; minor design changes may still be made as a re- sult of such testing. Typically done by end-users or others, not by programmers or testers

H. Beta testing

Testing when development and testing are essentially com- pleted and final bugs and problems need to be found before final release. Typically done by end-users or others, not by programmers or testers

Effective test cases are the heart of the software testing. For designing test cases testers will use various test techniques in industry and also uses options like domain knowledge, his- tory of past issues etc. Following are some of the test tech- niques used in industry

A. Positive and Negative testing

Positive testing – Check that software performs its intended function correctly and execute programs to check that it meets requirements.

Negative testing –Execute programs with an intent to find defects and discover defects in the system. Negative testing involves testing of special circumstances that are outside the strict scope of the requirements specification, and will there- fore give higher coverage

B. Risk based testing

Risk is the possibility of a negative or undesirable outcome, quality risk is a possible way that something about your or- ganization‘s products or services could negatively affect stakeholder satisfaction. Through risk based testing we can reduce quality risk level. This type of testing has number of advantages

![]() Finding defects earlier in the defect cycle and thus avoid the risk in schedule delay

Finding defects earlier in the defect cycle and thus avoid the risk in schedule delay

![]() Finding high severe and priority bugs than unim- portant bugs

Finding high severe and priority bugs than unim- portant bugs

![]() providing the option of reducing the test execution

providing the option of reducing the test execution

period in the event of a schedule crunch without accepting unduly high risks

C. Defect testing

Defect testing or fault based testing is doing to ensure that certain types of defects are not there in the code. It is a nega- tive testing approach to discover defects in the system. Nor- mally testing team will identify and classify the defects that have occurred in the previous release of the product. Based on this classification test team will decide where to add more test- ing efforts and also will decide how deeply need to conduct testing on those areas. Test team will use defect tracking tool or defect database as an input for this activity. The root cause analysis available in the defect or that is prepared will play a major role in defect classification.

D. White box testing

White box testing or glass box testing or structural testing method uses the code structure to come up with test cases. For doing effective white box testing tester need to have a good understanding of the code. Normally there is a miss- understanding that white box testing can apply only in unit level testing. It can definitely be applied at the unit level. It can be applied at the higher levels like integration level, system level. Unit testing becomes difficult as the size of the code rap- idly increases at higher levels.

E. Black box testing

In black box testing or functional testing. the tester should have a clear understanding of the specification of the prod- uct/project that he is testing. Specification covers both data (input and out put specification) as well as business logic specification (processing logic involved).Requirement specifi- cation is one of the major input doc for doing black box test- ing. Black box testing can apply at any levels of testing. Some of the black box techniques detect functionality issues while some of them help in detecting non-functional issues.

F. Grey box testing

Gray box testing is combination of white and black box test- ing. This testing will identify the defects related to bad design or bad implementation of the product. Test engineer who exe- cutes gray box testing has some knowledge of the system and design test cases based on that knowledge. Tester applies a limited number of test cases to the internal working of the software under test. Remaining part of the execution will do based on data specification and business logic. The idea be- hind the gray box testing is that one who knows something

IJSER -2012

The research paper published by IJSER journal is about Effective Testing: A customized hand book for testing professionals and students 5

ISSN 2229-5518

about how the products works on the inside, one can test it better

G. Statistical testing

The purpose of statistical testing is to test the software ac- cording to its operational behaviour, i.e. by running the test cases with the same distribution as the users intended use of the software. By developing operational profiles that describes the probability of different kinds of user input over time; it is possible to select a suitable distribution of test cases. Develop- ing operational profiles is a time consuming task but a proper developed profile will help to make a system with a high reli- ability. In short a statistical test will help to make a quantita- tive decision about a process.

H. Clean room software engineering

Clean room software engineering is more of a development process than a testing technique. The idea clean room will help to avoid high cost defects by writing source code accurately during early stages of development process and also employ formal methods for verifying the correctness of the code be- fore testing phase. Even though the clean room process is time consuming task but helps to reduce the time to market be- cause the precision of the development helps to eliminate re- work and reduces testing time. Clean room is considered as a radical approach to quality assurance, but has become ac- cepted as a useful alternative in some systems that have high quality requirements.

I. Static testing

Testing is normally considered as a dynamic process, where the tester will give various inputs to the software under test and verify the results. But static testing is of different kind of testing that is used for evaluating the quality of the software without executing the code. Static testing is fall in the verifica- tion process that ensures the construction steps are done cor- rectly with out executing the code. One commonly used tech- nique for static testing is the static analysis-functionality that the compilers for most modern programming languages have. Reviews and inspection are the most commonly used static testing method in almost all software development organiza- tions. Static testing is applicable to all stages but particularly appropriate in unit testing, since it does not require interaction with other units.

J. Review and inspection

Each author has there on definition for the terms review and inspection. As per IEEE Std. 610.12-1990 the terms are defined as

Review: A process or meeting during which a work prod- uct, or set of work products, is presented to project personnel, managers, users, customers, or other interested parties for comment or approval. Types include code review, design re- view, formal qualification review, requirements review, and

test readiness review‘ (IEEE 1990). IEEE standard says that, the purpose of a technical review is to evaluate a software product by a team of qualified personnel to determine its suit- ability for its intended use and identify discrepancies from specifications and standards. Following are some of the in- puts to the technical review

![]() A statement of objectives for the technical review

A statement of objectives for the technical review

![]()

(mandatory)![]()

The software product being examined (mandatory) Software project management plan (mandatory) Current anomalies or issues list for the software product (mandatory)

Documented review procedures (mandatory)

Relevant review reports (should)

Any regulations, standards, guidelines, plans, and procedures against which the software product is to be examined (should)

![]() Anomaly categories (See IEEE Std 1044-1993)

Anomaly categories (See IEEE Std 1044-1993)

(should)

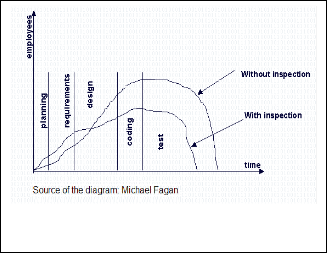

Inspection: A static analysis technique that relies on visual examination of development standards, and other problems. Types include code inspection; design inspection‘ (IEEE 1990). Inspection has many names , some called software inspection that could cover design and documentation , some others will call it as code inspection that relates more on source code writ- ten by developer. Fagan inspection is another name that came as the name of the person who invented QA and testing method. Code inspection is a time consuming task but statis- tics telling that it may cover up to 90% of the contained errors if we apply that in a systematic way. Graph below shows time , employee relation ship in a software development proc- ess .

Fig. 4

IEEE Standard for Software Reviews (IEEE 1028-1997 standard) is talking about manual static testing methods like inspections , reviews and walkthroughs

IJSER -2012

The research paper published by IJSER journal is about Effective Testing: A customized hand book for testing professionals and students 6

ISSN 2229-5518

k. Walk-throughs

Walk-throughs are techniques used in software develop- ment cycle for improving the quality of the product. It helps to detect anomalies, evaluate the conformance to standards and specifications etc. It is considering as a techniques for collect- ing ideas and inputs from team members during the design stage of the software product and also as for exchanging tech- niques and conduct training to the participants , thus to raise the level of team mates to same programming style and de- tails of the product. Walk-through leader, recorder, author of the product under development and team members are some of the roles defined in walk-through method.

A test case is a set of data and test programs (scripts) and their expected results. Test case validates one or more system requirements and generates a pass or fail. The Institute of Elec- trical and Electronics Engineers defines test case as "A set of test inputs, execution conditions, and expected results devel- oped for a particular objective, such as to exercise a particular program path or to verify compliance with a specific require- ment." Selecting adequate test case is an important task to test- ers other wise that may result in too much testing, or too little testing or testing wrong things. Following are the characteris- tics of a good test![]()

![]() A test case has a reasonable probability of catching an error

A test case has a reasonable probability of catching an error

It is not redundant

It‘s the best of its breed

It is neither too simple nor too complex

While doing test case design, designer should have an in- tension to find errors so that he can start searching ideas for test cases and try working backwards from an idea of how the program might fail. Following are some of the techniques we use in industry for designing effective test cases.

1) Equivalence classes

![]()

It is essential to understand equivalence classes and their boundaries. Classical boundary tests are critical for checking the program‘s response to input and output data. You can consider test cases as equivalent, if you expect same result from two tests. A group of tests forms an equivalent class if you believe that

They all test same thing

If one test catch catches a bug , the others probably will too

![]() If one test doesn‘t catch a bug, the others probably

If one test doesn‘t catch a bug, the others probably

won‘t either.

Tests are often lumped into the same equivalence classes when

![]() They involve the same input variables

They involve the same input variables

![]()

They result in similar operations in the program

They affect the same output variables

None force the program to do error handling or all of them do

Different people will analyse programs in different way and![]()

comes up with different list of equivalent classes. This will help you to select test cases and avoid wasting time repeating what is virtually the same test. You should run one or few of the test cases that belongs to an equivalence class and leave the rest aside. Below are some of the recommendations for looking equivalence classes![]()

Don‘t forget equivalence classes for invalid inputs Organize your classification into a table or an out- line

Look for range of numbers

Look for membership in a group Analyse responses to lists and menus Look for variables that must be equal

Create time-determined equivalence classes![]()

Look for variable groups that must calculate to a certain values or range

Look for equivalent output events

Look for equivalent operating environments

2) Boundaries of equivalence classes

Normally we use to select one or two test cases from each equivalence class. The best ones are the class boundaries, the boundary values are the biggest, smallest, soonest, shortest, loudest, fastest ugliest members of the class i.e., the most ex- treme values. Program that fail with non-boundary values usually fail at the boundaries too. While analysing program boundaries it is important to consider all outputs. It is good to remember that input boundary values might not generate output boundary values.

3) Black box test techniques

This type of techniques can be categorized in to three broad types

![]() Those useful to design test scenarios ( High level

Those useful to design test scenarios ( High level

test design techniques)

![]() Those useful to generate test values for each in-

Those useful to generate test values for each in-

put(Low level test design techniques)

![]() Those useful in combining test values to generate

Those useful in combining test values to generate

test cases

High level test design techniques

Some of the commonly used high level test design tech- niques are

![]() Flowchart – Represent flow based behaviour (Each scenarios has a unique flow in the flow chart)

Flowchart – Represent flow based behaviour (Each scenarios has a unique flow in the flow chart)

![]() Decision table – Represent rule based behaviour (

Decision table – Represent rule based behaviour (

Each scenario is an unique rule in the decision ta- ble)

IJSER -2012

The research paper published by IJSER journal is about Effective Testing: A customized hand book for testing professionals and students 7

ISSN 2229-5518

![]() State machine – Represent state based behaviour ( Each scenario is an unique path in the state transi- tion diagram

State machine – Represent state based behaviour ( Each scenario is an unique path in the state transi- tion diagram

Law level test design techniques

Following are some of the some of the low level test design techniques

![]() Boundary value analysis - Generate test values on and around boundary

Boundary value analysis - Generate test values on and around boundary

![]() Equivalence partitioning – Ensues that all represen-

Equivalence partitioning – Ensues that all represen-

tative values have been considered

![]() Special value - generate interesting test values

Special value - generate interesting test values

based on experience/guess

![]() Error based vales - Generate test values based on

Error based vales - Generate test values based on

past history of issues

Combinational test design techniques

This technique will combine test values to generate test cases, some of the combinational test design techniques are mentioned below

![]() Exhaustive testing – Combine all vales exhaus-

Exhaustive testing – Combine all vales exhaus-

tively (All combination of all test inputs are consid- ered)

![]() All-pairs /Orthogonal – Combine to form minimal

All-pairs /Orthogonal – Combine to form minimal

yet complete combinations. This will ensures that all distinct pairs of inputs have been considered

![]() Single-fault – Combine such that only a single in-

Single-fault – Combine such that only a single in-

put in a test case is faulty (Generate negative test cases where only one input is incorrect)

4) White box test techniques

This technique uses the structure of the code for designing test cases, following are some of the aspects of the code that constitutes the code structure

![]() Flow of control – Is the code sequential / recursive

Flow of control – Is the code sequential / recursive

/ concurrent

![]() Flow of data – Where is the data initialized and

Flow of data – Where is the data initialized and

where it is used

![]() Resource usage – What dynamic resources are allo- cated , used and released

Resource usage – What dynamic resources are allo- cated , used and released

5) Coverage based testing

Statement coverage is an oldest structural test technique that targets to execute every statement and branch during a set of tests. Statement coverage will give an idea about the per- centage of total statements executed. Since programs with for example loops contain an almost infinite number of different paths, complete path coverage is impractical. Normally, a more realistic goal is to execute every statement and branch at least once. This technique can be varied in several ways and is usually tightly knit to coverage testing.

![]() Branch coverage – Measuring the number of condi-

Branch coverage – Measuring the number of condi-

tions / branches executed as a percentage of total branch

![]() Multiple condition coverage – Measuring the num- ber of multiple conditions executed as a percentage of total multiple conditions

Multiple condition coverage – Measuring the num- ber of multiple conditions executed as a percentage of total multiple conditions

![]() Statement coverage – Measuring the number of

Statement coverage – Measuring the number of

statements executed as a percentage of total state- ments

6) Random input testing

Rather than explicitly subdividing the input in to a series of equal sub ranges, it is better to use a series of randomly se- lected input values, that will ensues that input value is likely as any other , any two equal sub ranges should be about equally represented in your tests. When ever you cannot de- cide what vales to use in test cases, choose them randomly. Random inputs doesn‘t mean ―what ever inputs come to your mind‖ but a table of random numbers or a random number generating function . Random testing using random inputs can be very effective in identifying rarely occurring defects, but is not commonly used since it easily becomes a labour- intensive process.

7) Syntax testing

This is a data-driven test technique where well-defined syn- tax rules validate the input data Syntax testing can also be called grammar -based testing since grammars can define the syntax rules. An example of a grammar model is the Backus Naur Form, which can represent every combination of valid inputs.

1) Load test

Load testing is used for verifying the software product is able to handle real life operations with the stated resources. It can be done in controlled lab conditions or in a field. Load test in a lab will help to compare the capabilities of different sys- tems or to measure the actual capability of a single system. The main aim of the load testing is to determine the maximum limit of the work that can handle with out significant perform- ance degradation.

2) Stress test

This test will check that worst load it can handle is well above real life extreme load. The stress test process can in- volve quantitative test done in a lab , such as measuring the frequency of errors or system crashes. It can also use for evaluating the factors like availability of the system, resistance to denial of service attacks.

3) performance test

Check that the key system operations perform with in the stated time. Performance testing is very difficult to conduct because the performance requirements often are poorly speci- fied and the test requires a realistic operational environment to get reliable results. Automated tool support is required for doing proper performance evaluation of the software.

IJSER -2012

The research paper published by IJSER journal is about Effective Testing: A customized hand book for testing professionals and students 8

ISSN 2229-5518

4) Scalability test

Check that the system is able to handle more loads with more hardware resources. We can consider scalability testing as an extension of performance testing. Scalability is the factor that needs to consider in the beginning of the project planning and designing. The architect of the product should have a proper picture about the product before he plans the scalabil- ity of the product under development. For making sure that the products is truly scalable and for identify major work loads and mitigate bottlenecks, it is very important to rigor- ously and regularly test it for scalability issues. The results from the performance test can consider as the baseline, and we can compare the results of the performance test results to know the application is scaled up or not.

5) Reliability test

This test will check that the system when used in an ex- tended manner is free from failures. In systems with strict reli- ability requirements, the reliability of the system under typical usage should be tested. Several models for testing and predict- ing reliability exist but in reality, the exact reliability is more or less impossible to predict

6) Volume test

Check that the system can handle large amounts of data. Volume test is mainly concentrating about the concept of throughput instead of response time on other testing. Capacity drivers are the key to do effective volume testing for the appli- cation like messaging systems, batch systems etc... A capacity driver is something that directly impacts on the total process- ing capacity. For a messaging system, a capacity driver may well be the size of messages being processed.

7) Usability test

Check whether the system is easy to operate by its end us- ers. When the system contains a user interface, the user- friendliness might be important. However, it is hard to meas- ure usability since it is difficult to define and most likely re- quire end-user interaction when being tested. Nevertheless, it is possible to measure attributes like for example learn-ability and handling ability by monitoring potential users and record their speed of conducting various operations in the systems

8) Security test

This test will ensure that the integrity of the system is not compromised. Security test is also called penetration testing and used to test how well the system protects against unau- thorized internal or external access, wilful damage, etc; may require sophisticated testing techniques. Testers must use a risk-based approach, grounded in both the system‘s architec- tural reality and the attacker‘s mindset, to gauge software se- curity adequately. By identifying risks in the system and creat- ing tests driven by those risks, a software security tester can properly focus on areas of code in which an attack is likely to succeed. This approach provides a higher level of software security assurance than possible with classical black-box test- ing

9) Recovery test

Recovery test will verify that that the system is able to re- cover from erroneous conditions graciously. It also tests how well a system recovers from crashes, hardware failures, or other catastrophic problems

10) Storage test

Check that the system complies with the stated storage re- quirements like disk/memory.

11) Internationalization test (I18N)

This test will verify the ability of the system to support mul- tiple languages. Internationalization test is also called as I18N test. I18N testing of the products is targeted to uncover the international functionality issues before the system‘s global release. Mainly this will check whether the system is correctly adapted to work under different languages and regional set- tings like the ability to display correct numbering system – thousands, decimal separators, accented characters etc... . I18N testing is not same as the L10N testing. In I18N testing product functionality and usability are the focus, where as L10N test- ing focuses on linguistic relevance and verification that func- tionality has not changed as a result of localization

12) Localization test (L10N)

Check that the strings, currency, date, time formats for this language version has been translated correctly. Localization testing is also called L10N testing. Localization is the process of changing the product user interface and modification of some initial settings to make it suitable for another region. Localization testing checks the quality of a product's localiza- tion for a particular target culture/locale. Localization test is based on the results of I18N testing, which verifies the func- tional support for that particular culture/locale. L10N testing can be executed only on the localized version of a product.

13) Configuration test

Check that the system can execute on different hardware and software configuration

14) Compatibility test

Check that the system is backward compatible to its prior versions

15) Installation test

Check that the system can be installed correctly following the installation instructions. The installation test for a release will be conducted with the objective of demonstrating produc- tion readiness.

16) Documentation test

Documentation test will make sure that the user documen- tation, online help is inline with software functionality. Test- ing of user documentation and help-system documentation is often overlooked because of a lack of time and resources (Watkins 2001). However, Watkins claims that accurate docu-

IJSER -2012

The research paper published by IJSER journal is about Effective Testing: A customized hand book for testing professionals and students 9

ISSN 2229-5518

mentation might be vital for successful operation of the system and reviews are in that case probably the best way to check the accuracy of the documents

17) Compliance test

Check that the software has implemented the applicable standard correctly

18) Accessibility test

Accessibility test will check that the product under test is accessibility complaint or not. With this test we are targeting four types of users namely people with visual impairments, hearing impairments, motor skills( Inability to use keyboard or mouse) and cognitive abilities (reading difficulties, memory loss). Normally we plan separate testing cycle for accessibility testing. Inspectors or web checkers are some example of tools available in market for doing accessibility testing

![]()

A Test Strategy document is a high level document that talks about the overall approach for testing and normally de- veloped by project manager. This document is normally de- rived from the Business Requirement Specification document. This static document contains standards for testing process and won‘t undergo changes frequently. This is acting as an input document for test plan. A good test strategy will answer the below questions

Where should I focus? On what features?

On what type of potential issues?![]()

What test technique should I use for effective test- ing?

How much of black box, white box? What type of issues should I look for? Which is best discovered by testing? Which is best discovered via inspection?

How do I execute the tests? Manual/Automated?

What do I automate?

What tool should I consider?

How do I know that I am doing a good job? What metrics should I collect and analyse?

K. Contents of test strategy

Features to focus on:

-- List down the major features of the products

- -Rate importance of each features

(Importance = Usage frequency * failure

Criticality)

L. Potential issue to uncover

![]() Identify potential faults

Identify potential faults

![]()

![]() Identify potential incorrect inputs that can result in failure

Identify potential incorrect inputs that can result in failure

State the type of issues that you will aim to uncover Identify what types of issues will be detected at each level of testing

M. Types of test to be done

![]() State the various tests that that need to be done to uncover the above potential issues

State the various tests that that need to be done to uncover the above potential issues

![]() Identify the test techniques that may be used for

Identify the test techniques that may be used for

designing effective test cases

N. Execution approach

![]() Continue what test will be done manu- ally/automated

Continue what test will be done manu- ally/automated

![]() Outline tools that may be used for automated test-

Outline tools that may be used for automated test-

ing

O. Test metrics to collect and analyse

![]() Identify measurements that help analyse the strat- egy is working effectively

Identify measurements that help analyse the strat- egy is working effectively

![]()

Test plan details out the operational aspects to executing the test strategy. Test plan will be derived from the product de- scription, software requirement document, use case docu- ments etc. It may be prepared by a test lead or test manager. A test plan outlines the following

Effort / time needed Resources needed Schedules

Team composition

Anticipated risk and contingency plan Process to be followed for efficient execution Roles of various team members and their work

As per the IEEE 829 format, following are the contents of

the test plan

1. Test Plan Identifier : Unique company

generated number to identify this test plan

2. References : List all documents that sup- port this test plan

3. Introduction : A short introduction to the software under test

4. Test Items : Things you intend to test within the scope of this test plan

5. Software Risk Issues : Identify what soft- ware is to be tested and what the critical areas are

6. Features to be Tested: This is a listing of what is to be tested from the users view- point of what the system does

7. Features not to be Tested: Listing of what is not to be tested from both the Users viewpoint of what the system does and a

IJSER -2012

The research paper published by IJSER journal is about Effective Testing: A customized hand book for testing professionals and students 10

ISSN 2229-5518

configuration management/version con- trol view.

8. Approach : This is your overall test strat- egy for this test plan

9. Item Pass/Fail Criteria: What are the Completion criteria for this plan? The goal is to identify whether or not a test item has passed the test process

10. Suspension Criteria and Resumption Re-

quirements: Know when to pause in a se-

ries of tests or possibly terminate a set of

tests. Once testing is suspended how is it

resumed and what are the potential im-

pacts

11. Test Deliverables

12. Remaining Test Tasks: There should be

tasks identified for each test deliverable.

Include all inter-task dependencies, skill

levels, etc. These tasks should also have

corresponding tasks and milestones in the overall project tracking process

13. Environmental Needs : Are there any spe- cial requirements for this test plan

14. Staffing and Training Needs : State the staffing learning/training needs to be

done to execute the test plan

15. Responsibilities: Who is in charge? There should be a responsible person for each aspect of the testing and the test process. Each test task identified should also have a responsible person assigned

16. Schedule : Detail the work schedule as

Gantt chart

17. Planning Risks and Contingencies : State the top five (or more) anticipated risks and mitigation plan

18. Approvals : Who can approve the process

as complete and allow the project to pro- ceed to the next level

19. Glossary

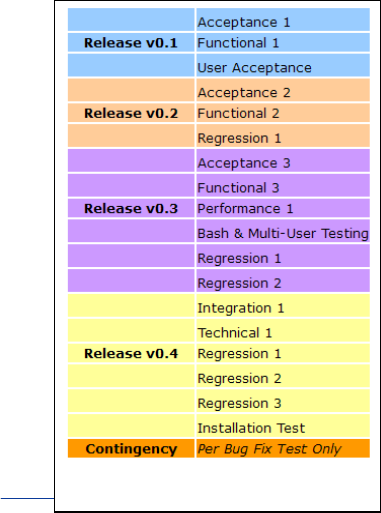

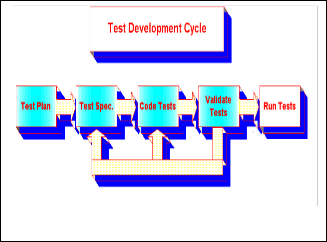

cle 4 be the final proving of the system as a single application. There should be no Sev1 or Sev2 class errors outstanding prior to the start of cycle 4 testing. Figure 4 shows the 4 different cycles (release) of testing that normally follows in software development.

Fig. 3

Test cycle is the point of time wherein the build is validated and it takes multiple test cycles to validate a product. Each test cycle should have a clear scope like what features will be tested and what test will be done. Figure -3 below shows the test development life cycle. Normally we used to run four rounds of the test cycle. In this period will be catching around

80% of the errors. With the majority of these errors fixed, stan-

dard and/or frequently used actions will be tested to prove

individual elements and total system processing in cycle 3. Regression testing of outstanding errors will be performed on an ongoing basis. When all major errors are fixed, an addi- tional set of test cases are processed in cycle 4 to ensure the system works in an integrated manner. It is intended that cy-

IJSER -20

http://www.ijser

Fig. 4

The research paper published by IJSER journal is about Effective Testing: A customized hand book for testing professionals and students 11

ISSN 2229-5518

II. TEST ESTIMATION

Test Estimation is the estimation of the testing size, testing effort, testing cost and testing schedule for a specified software testing project in a specified environment using defined meth- ods, tools and techniques. Effort estimation can consider as a science of guessing. Some of the terms commonly used in test estimation are

![]() Testing Size – the amount (quantity) of testing that

Testing Size – the amount (quantity) of testing that

needs to be carried out. Some times this may not be estimated especially in Embedded Testing (that is, testing is embedded in the software development activity itself) and in cases where it is not neces- sary

![]() Testing Effort – the amount of effort in either per-

Testing Effort – the amount of effort in either per-

son days or person hours necessary for conducting the tests

![]() Testing Cost – the expenses necessary for

Testing Cost – the expenses necessary for

testing, including the expense towards human effort

![]() Testing Schedule – the duration in calendar days

Testing Schedule – the duration in calendar days

or months that is necessary for conducting the tests![]()

To do a proper estimation we need to consider the following areas

Features to focus

Types of test to do

Development of automated scripts

Number of test cycles![]()

Effort to design , document test plan, scenar- ios/cases

Effort need to document defects

Take expert opinion

Use the previous similar projects as inputs

Breaking down the big work of testing to smaller pieces of work and then estimation (Work break down structure)

![]() Use empirical estimation models

Use empirical estimation models

There are multiple number of test reports are using in vari- ous kinds of testing. Some of the commonly used test reports in industry are mentioned below.

![]() Weekly status report: Weekly status report gives an idea about the works completed in a specific week against the plan of actual execution. Compa- nies have there on standard template for reporting this status

Weekly status report: Weekly status report gives an idea about the works completed in a specific week against the plan of actual execution. Compa- nies have there on standard template for reporting this status

![]() Test cycle report: As mentioned in section 7, products testing have multiple cycles. Management will expect the correct status of each cycle for track- ing the project. Test team is responsible for giving report accomplishments in the cycle and potential testing related risks in a standard template ap- proved the company.

Test cycle report: As mentioned in section 7, products testing have multiple cycles. Management will expect the correct status of each cycle for track- ing the project. Test team is responsible for giving report accomplishments in the cycle and potential testing related risks in a standard template ap- proved the company.

![]() Quality report: Quality report will give an idea

Quality report: Quality report will give an idea

about objectives and subjective assessment of qual- ity of product on a specific date. A product quality is depends on factors like scope , cost and time. Quality lead will consider all these 3 factors before reporting the status in standard template

![]() Defect report: A defect report will give a detailed

Defect report: A defect report will give a detailed

description of defects. This is one of the important deliverable in STLC. An effective test reports will reduce the number of returned defects. A good test report will reflect the credibility of the tester and also will help for speeding up the defect fixes.

![]() Final test report: This is the report that summarizes

Final test report: This is the report that summarizes

the test happened in various levels and cycles. Based on this report the stake holder can assesses the release quality of the product.

Based on the work done by the team in the test execution and management area, below are some of the recommendations which the author would like to highlight.![]()

Know your efficiency to know what to improve Institute risk based testing for catching defect in early test cycle

![]() Setup regression frame work as early as possible and

Setup regression frame work as early as possible and

![]()

move repeatedly executing system test scenarios to regression

Introduce light weight test automation

Use IBM Rational Build Forge , that you can easily in- tegrates into your current environment and supports major development languages, scripts, tools, and plat- forms; allowing you to continue to use your existing investments while adding valuable capabilities around process automation, acceleration, notification, and scheduling.

![]() Automate your install verification test (IVT) so that

Automate your install verification test (IVT) so that

you can run the IVT for each and every build with out manual intervention

![]() Introduce proper test tracking system using easily

Introduce proper test tracking system using easily

understandable graphical approach

I would like to offer my deepest gratitude to the following people for their help through out the preparation of this hand book

IJSER -2012

The research paper published by IJSER journal is about Effective Testing: A customized hand book for testing professionals and students 12

ISSN 2229-5518

V Balaji, Manmohan Singh and Sundari Sadasivam for their straightforward and constructive feedback on the hand book.

[1] Cem Kaner, Jack Falk , Hung Quoc Nguyen, „Testing

Computer Software‟ 2nd Edition , 2001 ,ISBN:81-7722-

015-2

[2] Boris Beizer, „Software Testing Techniques‟, 1st Reprint

Edition, 2002, ISBN: 81-7722-260-0

[3] Marciniak, J., ‘Encyclopedia of Software Engineering‟,

John Wiley & Sons Inc, 1994, ISBN 0-471-54004-8 [4] Booz Allen Hamilton, Gary McGraw, ‘Software Secu ri- ty Testing‟, IEEE SECURITY & PRIVACY, 2004, PP

1540-7993

[5] Toshiaki Kurokawa ,Masato Shinagawa, „Technical

Trends and Challenges of Software Testing‟ , Science

& Technology trends 2008 –Quarterly review no .29

[6] IEEE 829-1998 Format -Test Plan Template , „Software

Quality Engineering -Version7.0‟ 2001

[7] IEEE Std. 610.12-1990, “Standard Glossary of Soft ware Engineering Terminology”, 1990.

IJSER -2012