International Journal of Scientific & Engineering Research Volume 2, Issue 10, October-2011 1

ISSN 2229-5518

Different Pattern Recognition in Re-Ranking

Process Using Image Based Retrieval

Ravi Kumar Kallakunta, Dr. Balaji Savadam, Mahidhar Chowdary Mullapudi

Abstract— We propose a location geometric similarity scoring method that is invariant to rotation, scale, and translation, and can be easily incorporated in mobile visual search and augmented reality systems. We present a fast and efficient geometric re-ranking method that can be incorporated in a feature based image-based retrieval system that utilizes a Vocabulary Tree (VT). We form feature pairs by comparing descriptor classification paths in the VT and calculate geometric similarity score of these pairs. We compare the performance of the location geometric scoring scheme to orientation and scale geometric scoring schemes. We show in our experiments that re-ranking schemes can substantially improve recognition accuracy. We can also reduce the worst case server latency up to 1 sec and still improve the recognition performance.

Index Terms— mobile visual Search, image-based retrieval, geometric verification, robust features

T

—————————— ——————————

gated how to perform simple geometric checks by matching

HE Mobile image matching applications have gained popu- lar interest as phones become equipped with powerful compu- ting resources and high resolution cameras. Users can hold up their camera-phone and take pictures of objects they would like to inquire, and connect to a mobile image matching sys- tem that is either on the phone or a remotely located server, and identify the object and find information of the object any- where[1, 2, 3]. Most current image-based retrieval systems adopt the feature based image matching approach [4, 1, 5, 6, 3,

2]. By representing images or objects using sets of local fea- tures [7, 8, 9], recognition can be achieved by matching fea- tures between the query image and candidate database image. Fast large-scale image matching is enabled using a Vocabulary Tree (VT) [10]. Features are extracted from the database of images and a hierarchical k-means clustering algorithm is ap- plied to all of these features to generate the VT. Descriptors of the query image are also classified through the VT and a his- togram of the node visits on the tree nodes is generated. Can- didate images are then sorted according to the similarity of the candidate database image histogram to the query image histo- gram.

A number of groups have investigated different ways to speed up the GV process. In [12, 13], the authors investigate how to optimize steps to speed up RANSAC. In [5], they use geometry detected from each local features to estimate the geometric transformation. The authors in [14] have investi-

Ravi Kumar Kallakunta, Asst.Prof in Dept. of ECM, K L University, Vad- deswaram,Andhra Pradesh. E-mail: ravi.engg38@gmail.com

Dr. Balaji Savadam,Head in Dept. of ECM, K L University, Vaddeswa- ram,Andhra Pradesh. E-mail: balaji@klce.ac.in

Mahidhar Chowdary Mullapudi .(Y8EM281) , B.Tech student in the Dept. of ECM, K L University, Vaddeswaram,Andhra Pradesh. E-mail: mahi.mullapudi@gmail.com

visual words.

Small feature groups have also been proposed in to incor-

porate geometry comparison into VT matching yet at the cost

of greater complexity [15, 16]. We aim to design a fast and effi-

cient mobile visual search system, and to develop a geometric

scoring scheme for largescale image matching. We find match-

ing feature pairs between a query and candidate image using

the descriptor classification paths in the VT. Then, we trans- form location information of the feature pairs into pairwise distances and generate a geometric similarity score of two im- ages. This approach enables us to incorporate the scoring me-

thod described in [14], which uses orientation and scale. How- ever, the two types of information may not be available for certain feature descriptors, such as Rotational Invariant Fast Features (RIFF) [17]. Furthermore, we only re-rank a subset of images using the geometric similarity score. We show that by forming feature pairs using the classification paths in VT and the location geometric scoring method, we can reduce the total time needed and improve the recognition performance.

Geometric Verification (GV) is applied after feature match- ing [3, 2] to eliminate false feature matches. In this process, features of the query object are matched with features of the database objects using nearest descriptor or the ratio test [7]. Then, a geometric transformation of the location of the fea- tures in the query object and the locations of the features in the database object is estimated using RANSAC [11]. A single GV comparison typically takes 30 milliseconds to compute, which prohibits the list of candidate images for verification to a small number.

This paper is organized as the following. In Section 2, we give a brief overview the geometric re-ranking image match- ing pipeline. In Section 2.1, we present how to generate the matching feature pair list and describe the location geometric scoring scheme that we propose in Section 2.2. We present the experimental results in Section 3.

IJSER © 2011 http://www.ijser.org

International Journal of Scientific & Engineering Research Volume 2, Issue 10, October-2011 2

ISSN 2229-5518

Fig. 1. A typical mobile visual search system is presented in the blue diagram. We propose to add a geometric re-ranking stage which speeds up the system and im- proves the overall recognition result.

We consider a mobile visual search system with geometric re-ranking as illustrated in Fig. 1. The mobile client takes a picture of a query object and sends the compressed features to a server where the image recognition takes place. On the serv- er, query features are first quantized using a greedy search through the VT [6]. Then, the histogram of the quantized visu- al words is used to perform a similarity measure between a query image and a database image. We apply geometric re- ranking to a subset of the top matching candidates from the VT search. This improves the final list which is passed on to the GV stage, which typically considers a few images only. In the next section, we describe how we generate a matching fea- ture pair list M from the VT search and use the list to generate geometric similarity scores between a query feature set and a database feature set. We denote the query feature set as Fq =

{lq;i; oq;i; sq;i; dq;i}, where the variables correspond to loca- tion, orientation, scale, and descriptor respectively, and i de- notes the index within the feature set. The candidate database feature set is denoted as Fd = {ld;i; od;i; sd;i; dd;i}.

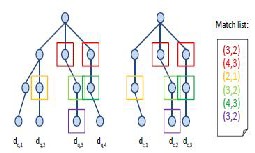

When a descriptor is classified using a VT, the descriptor is compared with the children of a node and the most similar child is selected. The process starts from the root and is re- peated until reaching the leaf node, thereby generating a path from the root to the leaf within the tree. In Fig. 2, we show the paths of two feature sets for a VT of depth 3 and branch factor

3.

Descriptors that are similar tend to be quantized along the

same path. Thus, we generate a matching feature pair list M of

a query feature set Fq and a candidate feature set Fd as fol-

lows. For each node within the VT, we examine if there is one

and only one query descriptor dq;m that has been classified to that node. Similarly, we check if there is one and only one candidate descriptor dd;n that has been classified to the node as well. If both of these criteria is satisfied for dq;m and dd;n,

we add (m; n) to the matching feature pair list, M. Since we also include interior nodes of the VT, there may be duplicate feature pairs in M. Two descriptors that have more than one single-occupancy node in common, tend to be more discri- minative. Hence, by allowing duplicate feature pairs in M, we emphasize the effect of more reliable feature matches.

Fig. 2. We show the intersecting feature paths for two sets of features, using a tree of depth 3 and branch factor 3. We start from the root node and go through the nodes breadth first in the tree to match descriptors with one another. The square blocks indicate a node that has only one descriptor are in the feature path for both the query image and candidate image. For each square block, the pair of matching descriptors is added to the list, where the square block’s color corresponds to the color of the added pair in the list.

We wish to confirm the matching pairs in M using geome- try information. In the GV stage, a rigorous validation requires estimating a geometric transformation between the query im- age and the database image. The estimation of multiple para- meters of the geometric transformation renders the process complex and time consuming; thus, we aim to estimate a sin- gle parameter instead. The simplest approach uses only orien- tation and scale information. If we assume a global rotation between the query image and the candidate matching image, then, matching feature pairs should have a consistent orienta- tion difference. Similarly, matching feature pairs should have a consistent scale difference, corresponding to the global scale change. We describe how to use these two types of informa- tion to perform scoring in Sec. 2.2.3. Using location informa- tion of features for geometric reranking can be advantageous for several reasons. First, for the client server model shown in Fig. 1, we would only need to send the location information of features, which can be compressed efficiently [18]. Second, as GV typically uses only the location information of features for finding a geometric transformation, the location information is already available for geometric similarity scoring. Further- more, it is compatible with systems that use features that are rotation invariant, such as Rotation Invariant Fast Fea- tures[17], which do not yield orientation information.

However, using location information is not intuitive when

IJSER © 2011 http://www.ijser.org

International Journal of Scientific & Engineering Research Volume 2, Issue 10, October-2011 3

ISSN 2229-5518

the geometric transformation involves translation, scaling, and rotation. We show by using distance between feature loca- tions, we are able to perform single parameter estimation us- ing location information.

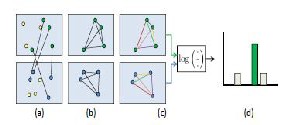

Fig. 3. The process of generating the location geometric score can be shown as the following steps: (a) features of two images are matched according to the descriptor paths, (b) distance of features within image are calculated, (c) log distance ratios of the corresponding pairs (denoted by color) are calculated, and (d) histogram of log distance ratios is formed. The maximum value of the histogram is the geometric similarity score.

2.2.1. Location geometric similarity scoring

We propose transforming the location information into dis- tance ratios to measure the geometric similarity, Fig. 3. We generate a set of log of distance ratios from the list M:

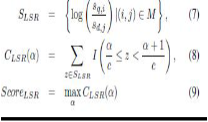

where dist(_ ; _ ) corresponds to the Euclidean distance of two points in the image (Fig. 3 (a)-(c)). For two true matching pairs, the value corresponds to the scale ratio between the query and database image. We then estimate the number of features that have similar scale ratio as follows:![]()

where I(_ ) is the indicator function, and _=c corresponds to the scale ratio difference. c is a tolerance factor that is experi- mentally determined. In practice, for speed and simplicity, we implement (2) as a histogram with soft bin assignment with _ as the histogram bin index. The geometric similarity score of the two feature sets is then given by:![]()

Using log distance ratio enables us to perform single para- meter estimation, estimating the scale ratio between the query and database image. Distances are invariant to rotation, scale, and translation. Distance histograms have been used to match point sets [19]. We extend this idea and use distance ratios, while still preserving robustness against similarity transforms.

2.2.2. Orientation geometric similarity scoring

Similar to what was described in the previous section, the orientation geometric scoring is formed as follow:

Intuitively, this orientation difference corresponds to the global rotation angle between the query image and the data- base image.

2.2.3. Scale geometric similarity scoring

Scale can also be compared by simply using the feature pairs in M. The scale geometric scoring is formed as follow:

In this case, the log scale difference indicates the scale differ- ence between the query image and the database image.

To demonstrate the performance of our proposed algo- rithm, we implement and integrate it in the mobile visual search system [3, 2]. We collect 1M images of CD, DVD and book covers and remove similar product images based on ap- pearance. SURF [8] features are extracted from the database images to train the VT. We use a tree configuration of depth 6 and branch factor 10. The feature paths of each database image is generated by classifying the descriptors down the VT and loaded during the geometric re-ranking stage. We pick the the highest scoring 250 images from the VT search and rerank them based on the computed geometric similarity score. We test the recognition performance using a thousand query im- ages.

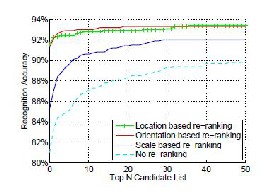

Fig. 4. Performance comparison of different geometric re-ranking schemes. Correct match is declared if the true match is within the top N candidates.

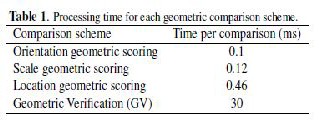

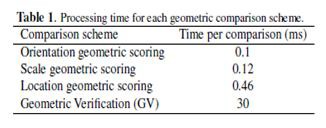

We show the performance of the different geometric scor- ing methods and the results without re-ranking in Fig. 4. We

IJSER © 2011 http://www.ijser.org

International Journal of Scientific & Engineering Research Volume 2, Issue 10, October-2011 4

ISSN 2229-5518

declare a match to be correct if the corresponding image is within the top N candidate in the list. By incorporating a geo- metric re-ranking method, the recognition result can be boosted significantly. The location geometric scoring and the orientation geometric scoring method perform similarly and has a clear advantage over the scale based method. For both methods, the accuracy of the top matching result is improved from 81% to 91% compared to that without re-ranking. We show the average time for the three different geometric scor- ing methods along with the time required for GV in Tab. 1. We see that the location geometric similarity scoring takes longer than the orientation and scale geometric scoring methods. This is because the total number of calculations for the distance is O(n2). All three are only a small fraction of the time of one single GV comparison, which is 30 ms.

A typical system performs GV for the top 50 images with preemptive stop, yielding a worst case scenario of 30.50 = 1500 ms latency with a recognition rate of ~90%. For a geometric re- ranking system using location geometric scoring, we need on- ly retain the top 5 images for GV while providing a system that has a recognition performance of ~92%. In this case the worst case time is only (30.5 + 115) = 265 ms, which is only

<18% of the typical system and 1 second faster.

We develop a new method of incorporating geometric simi- larity re-ranking for mobile image matching systems. Based on the classification feature paths in the VT, a list of matching database and query feature pairs is computed. We use geome- tric similarity scoring to re-rank candidate matching images given by the tree search. We develop a location geometric scoring that is invariant to similarity transform, compatible with rotational invariant features, and can be conveniently integrated in a mobile visual search system. We improve the recognition accuracy from 81% to 91% for the top matching result, and can reduce the overall latency by 1 sec.

[1] G. Takacs, V. Chandrasekhar, N. Gelfand, Y. Xiong, W. Chen, T. Bismpigian- nis, R. Grzeszczuk, K. Pulli, and B. Girod, “Outdoors augmented reality on mo- bile phone using loxel-based visual feature organization,” in ACM International Conference on Multimedia Information Retrieval, Vancouver, Canada, October

2008.

[2] S. S. Tsai, D. Chen, J. Singh, and B. Girod, “Rate-efficient, real-time CD cover

recognition on a camera-phone,” in ACM International Conference on Multime- dia, Vancouver, Canada, October 2008.

[3] D. Chen, S. S. Tsai, R. Vedantham, R. Grzeszczuk, and B. Girod, “Streaming mobile augmented reality on mobile phones,” in International Symposium on Mixed and Augmented Reality, Orlando, FL, USA, October 2009.

[4] J. Sivic and A. Zisserman, “Video google: a text retrieval approach to object matching in videos,” in International Conference on Computer Vision, 2003, vol.

2, pp. 1470–1477.

[5] J. Philbin, O. Chum, M Isard, J. Sivic, and A. Zisserman, “Object retrieval with

large vocabularies and fast spatial matching,” in Conference on Computer Vision

and Pattern Recognition, 2007, pp. 1–8.

[6] G. Schindler, M. Brown, and R. Szeliski, “City-scale location recognition,” in

Conference on Computer Vision and Pattern Recognition, New York, NY, USA, June 2007, pp. 1–7.

[7] D. Lowe, “Distinctive image features from scale-invariant keypoints,” Interna- tional Journal of Computer Vision, vol. 60, no. 2, pp. 91–110, November 2004.

[8] H. Bay, T. Tuytelaars, and L. V. Gool, “SURF: speeded up robust features,” in

European Conference on Computer Vision, Graz, Austria, May 2006, pp. 404–

417.

[9] V. Chandrasekhar, G. Takacs, D. Chen, S. S. Tsai, R. Grzeszczuk, and B. Girod, “CHoG: Compressed Histogram of Gradients,” in In Proceedings of Conference on Computer Vision and Pattern Recognition, 2009.

[10] D. Nister and H. Stewenius, “Scalable recognition with a vocabulary tree,” in

Conference on Computer Vision and Pattern Recognition, New York, NY, USA,

June 2006, pp. 2161–2168.

[11] M. Fischler and R. Bolles, “Random sample consensus: a paradigm for mod-

el fitting with applications to image analysis and automated cryptography,” Communications of ACM, vol. 24, no. 1, pp. 381–395, 1981.

[12] O. Chum, J. Matas, and J. V. Kittler, “Locally optimized RANSAC,” in Pro-

ceedings of DAGM, 2003, pp. 236–243.

[13] O. Chum, T. Werner, and J. Matas, “Epipolar geometry estimation via ransac

benefits from the oriented epipolar constraint,” in International Conference on Pattern Recognition, Washington, DC, USA, 2004, pp. 112–115, IEEE Compute- riety.

[14] H. Jegou, M. Douze, and C. Schmid, “Hamming embedding and weak geo-

metric consistency for large scale image search,” in European Conference on

Computer Vision, 2008, pp. I: 304–317.

[15] Z. Wu, Q. Ke, M. Isard, and J. Sun, “Bundling features for large scale partial-

duplicate web image search,” in Conference on Computer Vision and Pattern

Recognition, 2009, pp. 25–32.

[16] O. Chum, M. Perdoch, and J. Matas, “Geometric min-hashing: Finding a (thick) needle in a haystack,” in Conference on Computer Vision and Pattern Recognition. 2009, pp. 17–24, IEEE.

[17] G. Takacs, V. Chandrasekhar, S. S. Tsai, D. M. Chen, R. Vedantham, R. Grzeszczuk, and B. Girod, “Unified real-time tracking and recognition with rotation-invariant fast features,” in Conference on Computer Vision and Pattern Recognition, 2010, p. submitted.

[18] S. S. Tsai, D. M. Chen, G. Takacs, V. Chandrasekhar, R. Vedantham, R. Grzeszczuk, and B. Girod, “Location coding for mobile image retrieval,” in Proc.

5th International Mobile Multimedia Communications Conference, 2009.

ussian

: 369–

IJSER © 2011 http://www.ijser.org