International Journal of Scientific & Engineering Research, Volume 4, Issue 12, December-2013 703

ISSN 2229-5518

An Efficient Representation of Shape for Object Recognition and Classification using Circular Shift Method

G.Karuna, Research Scholar1

GRIET, Hyderabad

Dr.B.Sujatha

Professor2

GIET, Rajahumndry birudusujatha@gmail.com

Dr.P.Chandrasekhar Reddy

Professor3

Dept. of ECE JNTUH, Hyderabad

Abstract— Object recognition/classification is a hugely researched domain in the areas of computer vision & image processing. The most valuable feature for object recognition is its shape, which is defined for 2D space. In which, a circular shift algorithm is used for finding the exact shape of the object. Finding an appropriate set of features is an essential problem in the design of object recognition system. Before going to recognize the object, first find the shape of the object then a K-nearest neighbor classifier is used for classification. The experimental result shows that the method for classification gives impressive results of above 96% when it was tested on Flavia dataset

that contains 32 kinds of plant leaves. It means that the method gives better performance compared to the original work.

Index Terms- Computer vision, object recognition and classification, shape, color, texture, K-NN classifier.

—————————— ——————————

IJSER

bject recognition plays a crucial role in Computer Vision applications, specifically in the semantic description of visual content whereas it is a simple

task for a human observer [1], [2]. Object recognition is the task of identifying and labeling the parts of a two- dimensional (2D) image of a scene that correspond to objects in the scene [3]. It is challenging to recognize an object from visual information. Objects are very often distinguishable on the basis of their visible features, among these features; an object’s shape is frequently an important key to its recognition. The representation of shape is thoroughly discussed in Refs. [4, 5] and in both, sets of criteria for the evaluation of shape are proposed. Effectively representing shape, however, still remains one of the biggest hurdles to overcome in the field of automated recognition.

Object recognition is tricky because a combination of factors must be considered to identify objects. These factors may include limitations on allowable shapes, the semantics of the scene context, and the information present in the image itself [6].Objects are likely appear at different locations in the image and they can be deformed, rotated, rescaled, differently illuminated or also occluded with respect to a reference view [7]. For effective visual object recognition, a large number of views of each object are required due to viewpoint changes and it is also necessary to recognize a large number of objects, even for relatively simple tasks [8]. Constructing appropriate object models is vital to object recognition, which is a fundamental difficulty in computer vision. Desirable characteristics of a model include good representation of objects, fast and efficient learning algorithms with minimum supervised information [9]. The most common object recognition approaches can be classified into appearance- based [10, 11, 12, 13], model-based [14, 15] and approaches based on local features [16, 17]. Many practical object

recognition systems are appearance-based or model-based. To be successful they address two major interrelated problems: Object representation and object matching. The representation should be good enough to allow for reliable and efficient matching [18].

In this paper, we have investigated two vital research techniques available in the literature for the recognition of objects in digital images. The object recognition approaches based on the image processing and pattern recognition techniques: circular shift algorithm and k-Nearest Neighbors (k-NN) are chosen for investigation. The techniques elected for investigation are programmed in Mat lab and the investigation is performed with the aid of the Flavia dataset, which contains gray scale images of 20 objects; for each object

72 views are gathered, with a separation of 5o. Initially, four

distinct datasets are formed from the original dataset for investigation. The formed datasets are of size 6, 12, 24 and 36 respectively, each with different views of objects for training. Afterwards, the programmed techniques are trained with the formed datasets. The results of the investigation are presented in the experimental results section.

The remainder is organized as follows: Section 1 discusses the related studies; Section 2 describes the notations of the proposed system. Section 3 describes the proposed system. Section 4 explains the geometric shape features for object recognition system. Section 5 for experimental results and finally section 6 concludes the results.

IJSER © 2013 http://www.ijser.org

International Journal of Scientific & Engineering Research, Volume 4, Issue 12, December-2013 704

ISSN 2229-5518

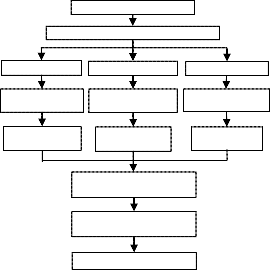

In this paper a method is described which consists of three algorithms are integrally linked aspects. The first is right shift the image, second is circular shift image and the third is diagonal shift image. The present paper proposes a new method for exact shape of the object based on edges using circular shifting operations based on the right, circular and diagonal shift operations. up and bottom. The following notations are used for detection of shape using edges based on circular shifting.

Input: Original color image (I1) Extract the color components of the original

3. CRS Circular Right Shift

4. CS Column Shift

5. CTS Circular Top Shift

6. CBS Circular Bottom Shift

7. DS Diagonal Shift

Using the above notations, the following algorithms are used

for shifting different images.

Step 1: Read the original image (I 1 ) of size m×n.

Step 2: Apply CLS operation on image I 1 produces I 2 .

Step 3: Image differencing between I 1 and I 2 obtains left slope

component (CR)

Apply shift operation (CSR)

component (CG)

Apply shift operation (CSG)

Edge map for representation of shape I1

Post processing of the edge shape image

Final shape of the image

component (CB)

Apply shift operation (CSB)

edge pixels I 3 .

IJSEFig.1. FlowcharRt for the proposed circular shift method for

Step 4: Apply CRS operation on image I 1 produces I 4 .

Step 5: Image differencing between I 1 and I 4 obtains right

slope edge pixels I 5 .

Step 6: Obtain right shift (RS) image I 6 , i.e. I 6 = I 3 +I 5 .

Step 1: Read the original image (I 1 ) of size m×n.

Step 2: Apply CTS operation on image I 1 produces I 2 .

Step 3: Image differencing between I 1 and I 2 obtains top slope

edge pixels I 3 .

Step 4: Apply CBS operation on image I 1 produces I 4 .

Step 5: Image differencing between I 1 and I 4 obtains bottom

slope edge pixels I 5 .

Step 6: Obtain circular shift (CS) image I 6 , i.e. I6 = I 3 +I5 .

Step 1: Read the original image (I 1 ) of size m×n.

Step 2: Apply CS operation on diagonal image I 1 produces I 2 . Step 3: Image differencing between I 1 and I 2 obtains diagonal edge (DS) pixels I 3 .

3 Proposed Method for Object Shape Extraction The most valuable feature for object recognition is its shape, which is defined for 2D space. The second most important

feature is color which is a perception of the wavelength of

light reflected from the surface of an object. Color is

frequently the first characteristic used when looking for an

object in the surrounding. This section presents in detail the

proposed method of representing shapes in natural images

based on color and shape features. The proposed method for

extraction of object shape based on Algorithms 1, 2 & 3

respectively are as shown in Fig.(1).

extraction of edge shape image.

The following steps represent the proposed method are as

follows.

Step 1: Read the original image (I 1 ) of size m×n×p.

Step 2: Extract the individual color components (i.e. R, G, and

B) of the image I 1 .

Step 3:Obtain the real complement for individual components

separately.

Step 4: Find RS image for individual real complement.

Step 5: Find CS image for individual real complement.

Step 6: Find DS image for individual real complement.

Step 7: For strong real connected edges for representation of

shape, add the obtained results of Step 4, Step5 and Step 6.

Step 8: Post processing of the resultant edge image to get the

final output of the shape image.

The geometric features consist of Aspect ratio, Convexity, Sphericity, Solidity and Circularity. For object recognition system Figure 2 and Equations (1)-(5) represents the geometric shape descriptors .

.

IJSER © 2013 http://www.ijser.org

International Journal of Scientific & Engineering Research, Volume 4, Issue 12, December-2013 705

ISSN 2229-5518

Fig .2. The Leaf ROI (a) convex hull, (b) ellipse, (c) MBR (d) Incircle and excircle.

During calculations of the shape descriptors, area of the object is defined as the net area not the filled area because the net area represents the shape better than the filled area.

1. Aspect ratio is the ratio between the maximum length Lmax and the minimum length L min of the ‘Minimum Bounding Rectangle’ (MBR) around the leaf.

2. f 1 =Lmax/Lmin Convexity is the relative amount that an image object differs from a convex object. Convexity is defined as the ratio between Aroi and the convex hull area (Ac ): f 2 = Ac /Aroi

3. Sphericity is the ratio of the radius of the incircle of the

ROI (ri) and the radius of the excircle of the ROI![]()

(r ).𝑓 = 𝑟𝑖

𝑟

4. Solidity measures the density of an object. A measure

of solidity can be obtained as the ratio of the image

object’s area to the area of the object’s convex hull. A

value of 1 signifies a solid object, and a value less than

1 will signify an object having an irregular boundary,

or containing holes (Wirth2005).

5. Circularity is defined based on the mean and variance of ROI: f5 =µroi /roi

The most valuable feature for object recognition is its shape and the second most important feature is color which is a perception of the wavelength of light reflected from the surface of an object. By considering the features of color and shape, a novel method for object recognition system is developed. Based on outline of the shape object, different shape features are used for object classification. The result gives 96.5% of accuracy, which is compared to better results than the original work. It gives the optimum accuracy of the object classification system.

TABLE 1: Mean percentage correct classification rate of Flavia

Dataset

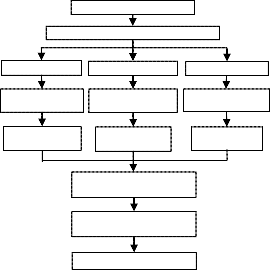

To show the significance of the proposed novel method the present study have evaluated shape features on extracted shape image for object recognition and classification of images from Flavia dataset. The proposed method is tested on both standard and real world images are used to show the efficiency of the proposed method. The standard image dataset consists of Flavia dataset was introduced in [19] and contains 1907 images of leaves of 32 kinds of leaves with green color (50 to 77 images for each species). Each image contains exactly one leaflet: there is no compound or occluded leaves. All images have resolution 1600×1200 px. The dataset is publicly available at the following URL: http://flavia.sourceforge.net/. The following Fig.2 shows Flavia dataset of 32 leaves with original names.

To do classification, the images are randomly divided into non-overlapping windows of different sizes and the resulting windows are divided into two disjoint sets, one for training and one for testing. Each set contained leaves from 32 classes. Shape features are evaluated on each leaf of the Flavia dataset and the results are stored in the feature database. Similarity measurement between image of the query and the images in the database is done by measuring Euclidean distance metric. Euclidean distance is defines as in Equation (1)

𝑑(𝑄, 𝑅) = ∑𝑁

(𝑄𝑖 − 𝑅𝑖 )2

(1)

𝑖=1

Where d(Q,R) is distance between features in the query

image Q and features in the reference image R. Meanwhile, N

is number of shape features. From the Table 1, it is observed

that the mean success rate for Flavia dataset 96.5%. It is found

that the success rate is improved much by combining

statistical and structural approaches.

IJSER © 2013 http://www.ijser.org

International Journal of Scientific & Engineering Research, Volume 4, Issue 12, December-2013 706

ISSN 2229-5518

[4] D. Marr, H. Nishihara, Representation and recognition of the spatial organization of three-dimensional shapes, Proc. R. Soc. London B

200 (1978) 269–294.

[5] M. Brady, Criteria for Representations and of Shape, Human and

Machine Vision, Academic Press, New York, 1983, pp.39–84.

[6] P. Suetens, P. Fua, and A. J. Hanson, "Computational Strategies for Object Recognition", ACM Computing Surveys, Vol. 24, No. 1, pp. 5 – 62, March 1992.

[7] Michela Lecca, "A Self Configuring System for Object Recognition in Color Images," Proceedings of World Academy of Science, Engineering and Technology, Vol. 12, pp. 35 -40, March 2006.

[8] Luke Cole, David Austin, Lance Cole, "Visual Object Recognition using Template Matching", Proceedings of the 2004 Australasian Conference on Robotics and Automation, Canberra, Australia, Nick Barnes & David Austin December 6-8, 2004. ISBN: 0-9587583-6-0.

[9] Nicolas Loeff, Himanshu Arora, Alexander Sorokin, and David Forsyth, “Efficient Unsupervised Learning for Localization and Detection in Object Categories," In NIPS 18, pages 811–818, 2006.

[10] Philip Blackwell and David Austin, "Appearance Based Object Recognition with a Large Dataset using Decision Trees", Proceedings of the Australasian Conference on Robotics and Automation, 2004.

[11] Murphy-Chutorian, E. and Triesch, J., "Shared Features for Scalable Appearance-Based Object Recognition," Seventh IEEE Workshops on Application of Computer Vision, vol. 1, pp. 16 - 21, 5 - 7 Jan, 2005.

[12] Azad, P., Asfour, T. and Dillmann, R., "Combining Appearance- based and Model-based Methods for Real-Time Object Recognition and 6D Localization," In proceedings of IEEE/RSJ International Conference on Intelligent Robots and Systems, pp. 5339-5344,9-15

The authors would like to express their gratitude to chairman

K.V.V. Satya Narayana Raju, MLC and chairman, K.Sasikiran

Varma, Secretary,GIET for providing advanced research lab

facilities through Srinivasa Ramanujan Research Forum GIET.

Fig.2. Flavia Dataset: Pubescent bamboo, Chinese horse chestnut, Anhui Barberry, Chinese redbud, True indigo, Japanese maple, Nanmu, Castor aralia, Chinese cinnamon, Deodar, Ginkgo, Crape myrtle, Oleander, Yew plum pine, Japanese Flowering Cherry, Glossy Privet, Chinese Toon, Peach, Goldenrain tree, Big-fruited Holly, Japanese cheesewood, Wintersweet, Camphor tree, Japan Arrowwood, Sweet osmanthus, Ford Woodlotus, Trident maple, Beale’s barberry, Southern magnolia, Canadian poplar, Chinese tulip tree, Tangerine.

[1] P. Duygulu, K. Barnard, N. de Freitas, and D. Forsyth, "Object Recognition as Machine Translation: Learning a lexicon for a fixed image vocabulary", In European Conference on Computer Vision (ECCV) Copenhagen, 2002.

[2] D. A. Forsyth and J. Ponce, "Computer Vision: a modern approach", Prentice Hall, 2002.

[3] P. Suetens, P. Fua, and A. J. Hanson, "Computational Strategies for

Object Recognition", ACM Computing Surveys, Vol. 24, No. 1, pp. 5

– 62, March 1992.

Oct, 2006.

[13] Martin Winter, ―Spatial Relations of Features and Descriptors for Appearance Based Object Recogn‖it,ionPhD thesis, G raz University of Technology, Faculty of Computer Science, 2007.

[14] Ajmal S. Mian, Mohammed Bennamoun and Robyn Owens, "Three- Dimensional Model-Based Object Recognition and Segmentation in Cluttered Scenes," IEEE Transactions on Pattern Analysis and Machine Intelligence, Vol. 28, No. 10, pp. 1584 - 1601, 2006.

[15] P.A. Larsena,J.B. Rawlingsa and N.J. Ferrier, "Model-based object recognition to measure crystal size and shape distributions from in situ video images," Chemical Engineering Science, Vol. 62, No. 5, pp.

1430-1441,March 2007.

[16] Thomas Serre , Lior Wolf , Tomaso Poggio, ―Object Recognition

with Features Inspired by Visual Corte‖x , Proceedings of the 2005

IEEE Computer Society Conference on Computer Vision and Pattern

Recognition (CVPR'05) - Volume 2, p.994-1000, June 20-26, 2005 .

[17] Sungho Kim, Kuk-Jin Yoon and In So Kweon, "Object recognition using a generalized robust invariant feature and Gestalt's law of proximity and similarity, "Pattern Recognition, Vol. 41, No.2, pp.

726-741, 2008.

[18] A. Diplaros, T. Gevers and I. Patras, "Color-Shape Context for Object Recognition", IEEE Workshop on Color and Photometric Methods in Computer Vision (In conjuction with ICCV), October 2003.

[19] Wu G.S., Bao F.S., Xu E.Y., Wang Y.X., Yi-Fan Chang Y.F., Xiang Q.L.. A Leaf Recognition Algorithm for Plant classification Using Probabilistic Neural Network. Signal Processing and Information Technology, 2007 IEEE International Symposium on.

IJSER © 2013 http://www.ijser.org

International Journal of Scientific & Engineering Research, Volume 4, Issue 12, December-2013

ISSN 2229-5518

707

IJSER 2013 http://www 11ser.org